Matt Stern

Robo-advising: Learning Investors' Risk Preferences via Portfolio Choices

Nov 16, 2019

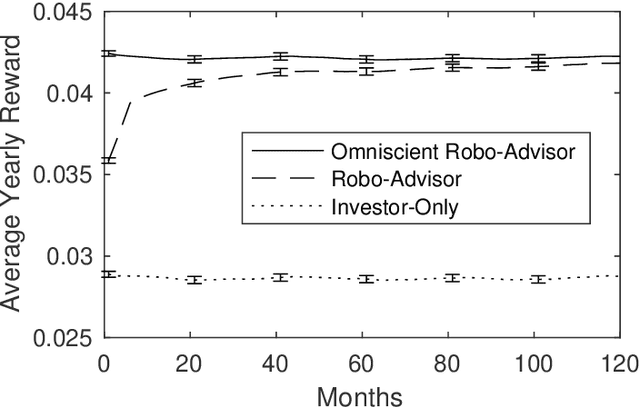

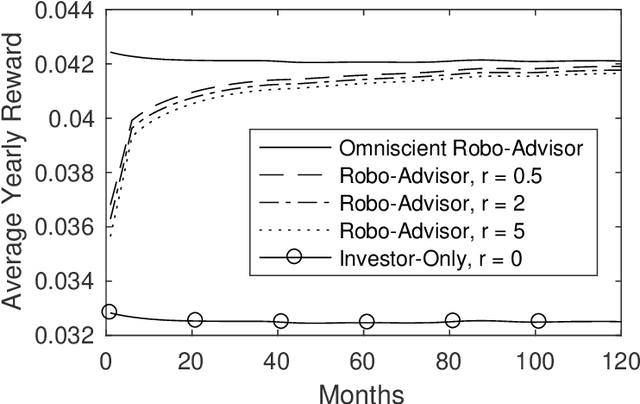

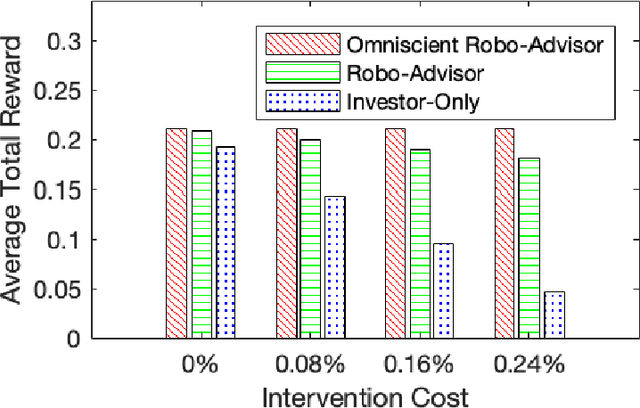

Abstract:We introduce a reinforcement learning framework for retail robo-advising. The robo-advisor does not know the investor's risk preference, but learns it over time by observing her portfolio choices in different market environments. We develop an exploration-exploitation algorithm which trades off costly solicitations of portfolio choices by the investor with autonomous trading decisions based on stale estimates of investor's risk aversion. We show that the algorithm's value function converges to the optimal value function of an omniscient robo-advisor over a number of periods that is polynomial in the state and action space. By correcting for the investor's mistakes, the robo-advisor may outperform a stand-alone investor, regardless of the investor's opportunity cost for making portfolio decisions.

Risk-Sensitive Cooperative Games for Human-Machine Systems

May 26, 2017

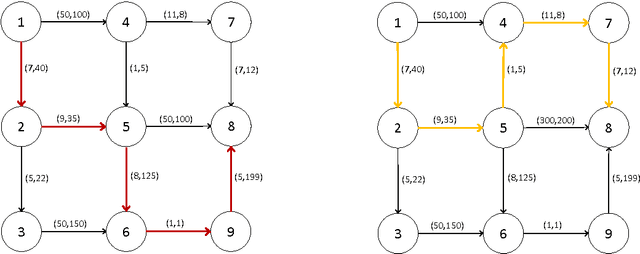

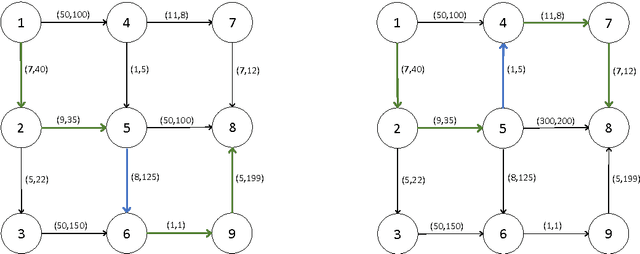

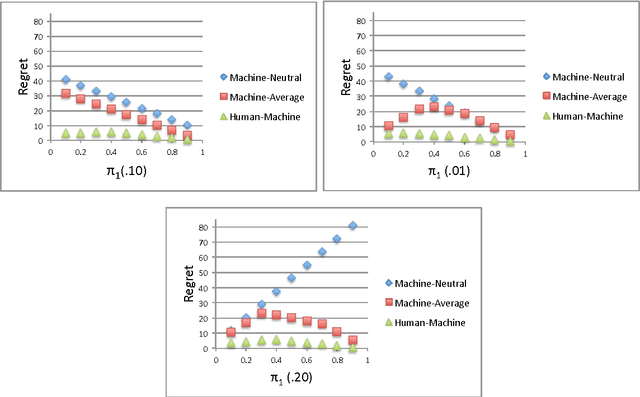

Abstract:Autonomous systems can substantially enhance a human's efficiency and effectiveness in complex environments. Machines, however, are often unable to observe the preferences of the humans that they serve. Despite the fact that the human's and machine's objectives are aligned, asymmetric information, along with heterogeneous sensitivities to risk by the human and machine, make their joint optimization process a game with strategic interactions. We propose a framework based on risk-sensitive dynamic games; the human seeks to optimize her risk-sensitive criterion according to her true preferences, while the machine seeks to adaptively learn the human's preferences and at the same time provide a good service to the human. We develop a class of performance measures for the proposed framework based on the concept of regret. We then evaluate their dependence on the risk-sensitivity and the degree of uncertainty. We present applications of our framework to self-driving taxis, and robo-financial advising.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge