Matias Alvo

Neural Inventory Control in Networks via Hindsight Differentiable Policy Optimization

Jun 20, 2023

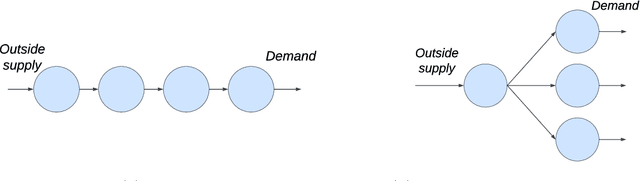

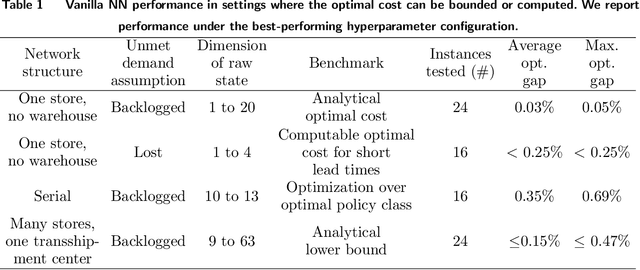

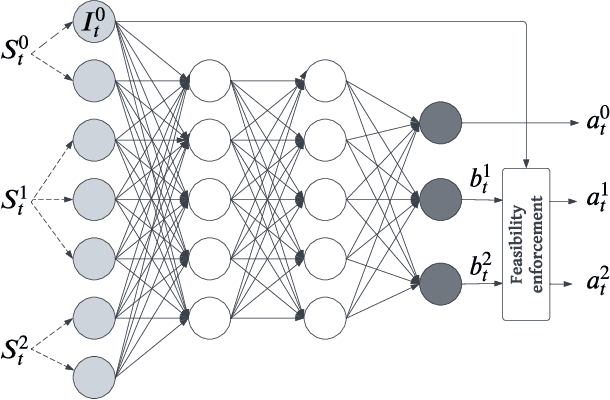

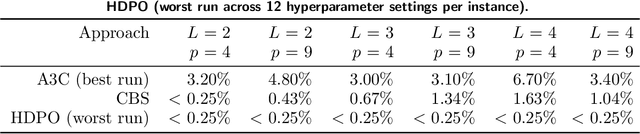

Abstract:Inventory management offers unique opportunities for reliably evaluating and applying deep reinforcement learning (DRL). Rather than evaluate DRL algorithms by comparing against one another or against human experts, we can compare to the optimum itself in several problem classes with hidden structure. Our DRL methods consistently recover near-optimal policies in such settings, despite being applied with up to 600-dimensional raw state vectors. In others, they can vastly outperform problem-specific heuristics. To reliably apply DRL, we leverage two insights. First, one can directly optimize the hindsight performance of any policy using stochastic gradient descent. This uses (i) an ability to backtest any policy's performance on a subsample of historical demand observations, and (ii) the differentiability of the total cost incurred on any subsample with respect to policy parameters. Second, we propose a natural neural network architecture to address problems with weak (or aggregate) coupling constraints between locations in an inventory network. This architecture employs weight duplication for ``sibling'' locations in the network, and state summarization. We justify this architecture through an asymptotic guarantee, and empirically affirm its value in handling large-scale problems.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge