Mathilde Raynal

On the Conflict of Robustness and Learning in Collaborative Machine Learning

Feb 21, 2024Abstract:Collaborative Machine Learning (CML) allows participants to jointly train a machine learning model while keeping their training data private. In scenarios where privacy is a strong requirement, such as health-related applications, safety is also a primary concern. This means that privacy-preserving CML processes must produce models that output correct and reliable decisions \emph{even in the presence of potentially untrusted participants}. In response to this issue, researchers propose to use \textit{robust aggregators} that rely on metrics which help filter out malicious contributions that could compromise the training process. In this work, we formalize the landscape of robust aggregators in the literature. Our formalization allows us to show that existing robust aggregators cannot fulfill their goal: either they use distance-based metrics that cannot accurately identify targeted malicious updates; or propose methods whose success is in direct conflict with the ability of CML participants to learn from others and therefore cannot eliminate the risk of manipulation without preventing learning.

Can Decentralized Learning be more robust than Federated Learning?

Mar 07, 2023

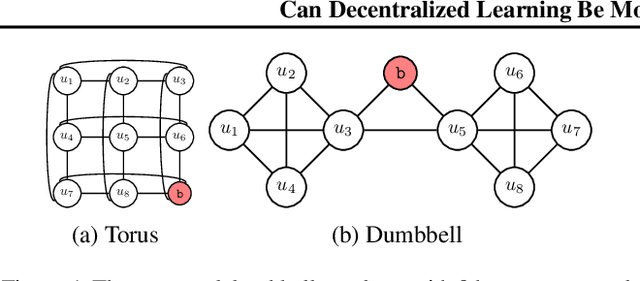

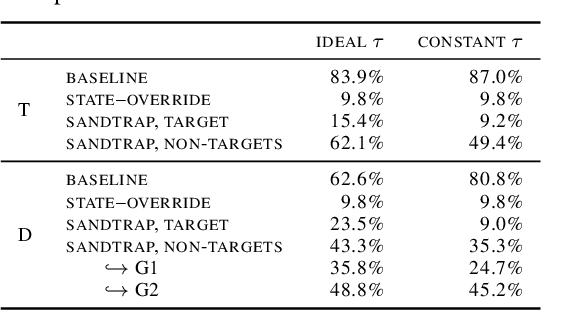

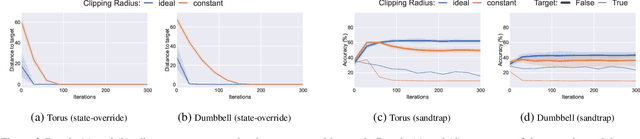

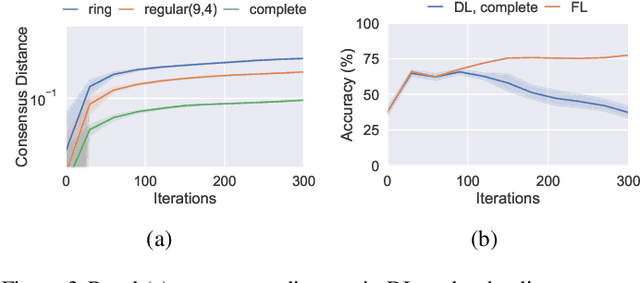

Abstract:Decentralized Learning (DL) is a peer--to--peer learning approach that allows a group of users to jointly train a machine learning model. To ensure correctness, DL should be robust, i.e., Byzantine users must not be able to tamper with the result of the collaboration. In this paper, we introduce two \textit{new} attacks against DL where a Byzantine user can: make the network converge to an arbitrary model of their choice, and exclude an arbitrary user from the learning process. We demonstrate our attacks' efficiency against Self--Centered Clipping, the state--of--the--art robust DL protocol. Finally, we show that the capabilities decentralization grants to Byzantine users result in decentralized learning \emph{always} providing less robustness than federated learning.

On the Privacy of Decentralized Machine Learning

May 17, 2022

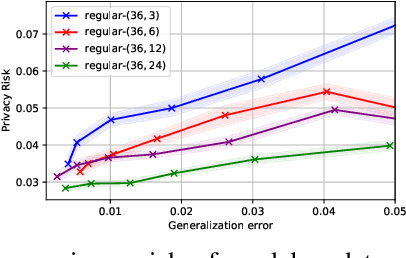

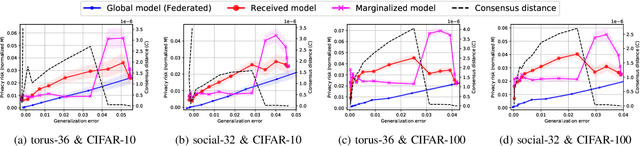

Abstract:In this work, we carry out the first, in-depth, privacy analysis of Decentralized Learning -- a collaborative machine learning framework aimed at circumventing the main limitations of federated learning. We identify the decentralized learning properties that affect users' privacy and we introduce a suite of novel attacks for both passive and active decentralized adversaries. We demonstrate that, contrary to what is claimed by decentralized learning proposers, decentralized learning does not offer any security advantages over more practical approaches such as federated learning. Rather, it tends to degrade users' privacy by increasing the attack surface and enabling any user in the system to perform powerful privacy attacks such as gradient inversion, and even gain full control over honest users' local model. We also reveal that, given the state of the art in protections, privacy-preserving configurations of decentralized learning require abandoning any possible advantage over the federated setup, completely defeating the objective of the decentralized approach.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge