Masato Kiyama

A simple DNN regression for the chemical composition in essential oil

Dec 17, 2024

Abstract:Although experimental design and methodological surveys for mono-molecular activity/property has been extensively investigated, those for chemical composition have received little attention, with the exception of a few prior studies. In this study, we configured three simple DNN regressors to predict essential oil property based on chemical composition. Despite showing overfitting due to the small size of dataset, all models were trained effectively in this study.

Extension of Convolutional Neural Network along Temporal and Vertical Directions for Precipitation Downscaling

Dec 13, 2021

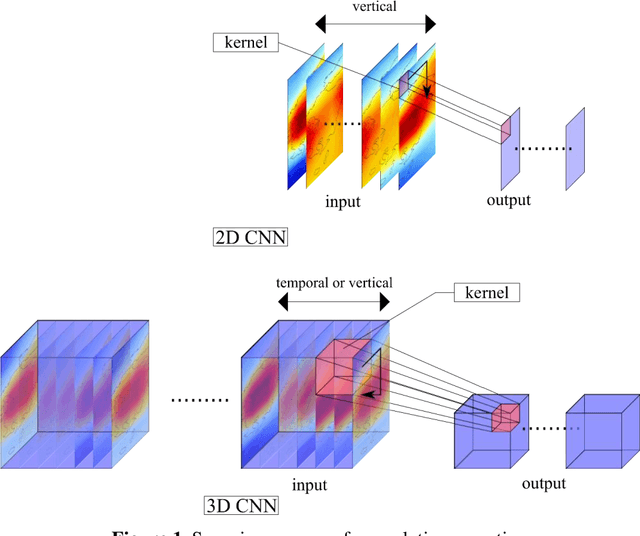

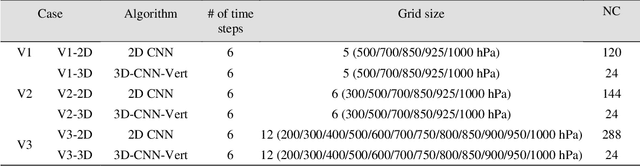

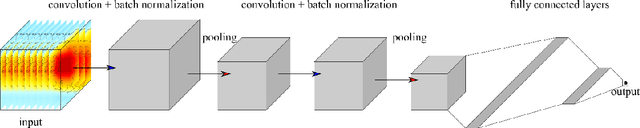

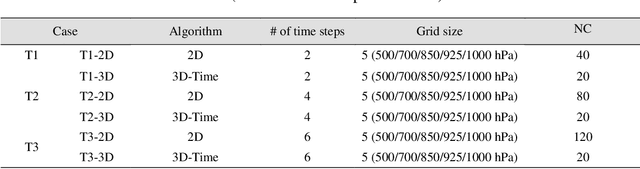

Abstract:Deep learning has been utilized for the statistical downscaling of climate data. Specifically, a two-dimensional (2D) convolutional neural network (CNN) has been successfully applied to precipitation estimation. This study implements a three-dimensional (3D) CNN to estimate watershed-scale daily precipitation from 3D atmospheric data and compares the results with those for a 2D CNN. The 2D CNN is extended along the time direction (3D-CNN-Time) and the vertical direction (3D-CNN-Vert). The precipitation estimates of these extended CNNs are compared with those of the 2D CNN in terms of the root-mean-square error (RMSE), Nash-Sutcliffe efficiency (NSE), and 99th percentile RMSE. It is found that both 3D-CNN-Time and 3D-CNN-Vert improve the model accuracy for precipitation estimation compared to the 2D CNN. 3D-CNN-Vert provided the best estimates during the training and test periods in terms of RMSE and NSE.

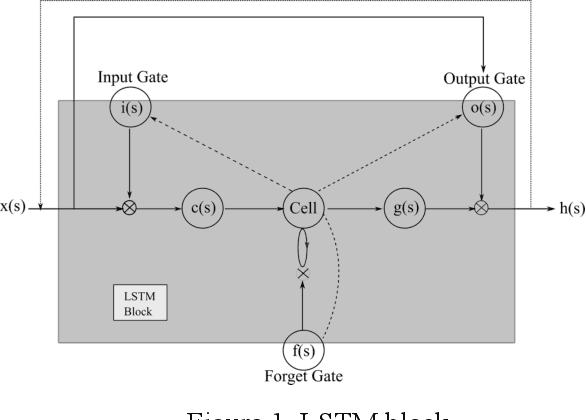

Use of 1D-CNN for input data size reduction of LSTM in Hourly Rainfall-Runoff modeling

Nov 07, 2021

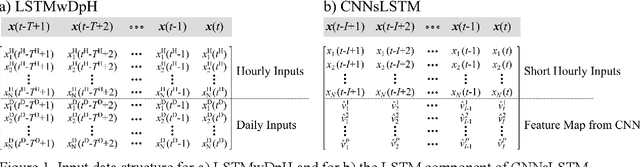

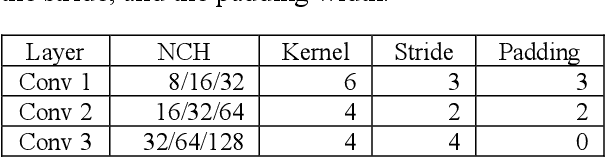

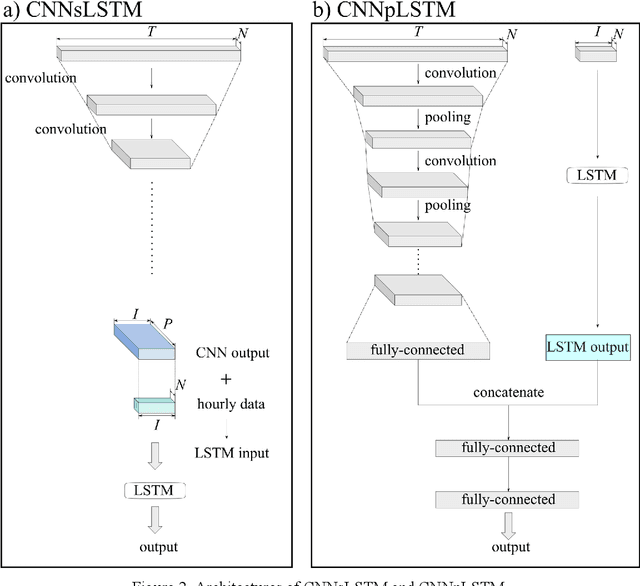

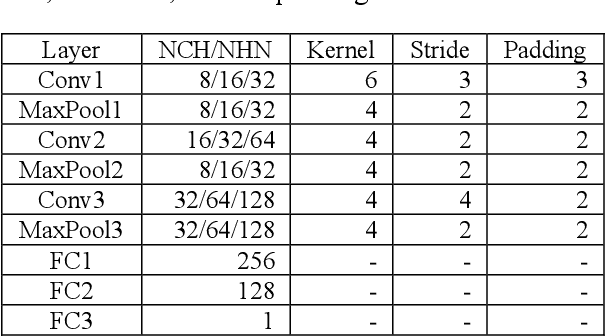

Abstract:An architecture consisting of a serial coupling of the one-dimensional convolutional neural network (1D-CNN) and the long short-term memory (LSTM) network, which is referred as CNNsLSTM, was proposed for hourly-scale rainfall-runoff modeling in this study. In CNNsLTSM, the CNN component receives the hourly meteorological time series data for a long duration, and then the LSTM component receives the extracted features from 1D-CNN and the hourly meteorological time series data for a short-duration. As a case study, CNNsLSTM was implemented for hourly rainfall-runoff modeling at the Ishikari River watershed, Japan. The meteorological dataset, consists of precipitation, air temperature, evapotranspiration, and long- and short-wave radiation, were utilized as input, and the river flow was used as the target data. To evaluate the performance of proposed CNNsLSTM, results of CNNsLSTM were compared with those of 1D-CNN, LSTM only with hourly inputs (LSTMwHour), parallel architecture of 1D-CNN and LSTM (CNNpLSTM), and the LSTM architecture which uses both daily and hourly input data (LSTMwDpH). CNNsLSTM showed clear improvements on the estimation accuracy compared to the three conventional architectures (1D-CNN, LSTMwHour, and CNNpLSTM), and recently proposed LSTMwDpH. In comparison to observed flows, the median of the NSE values for the test period are 0.455-0.469 for 1D-CNN (based on NCHF=8, 16, and 32, the numbers of the channels of the feature map of the first layer of CNN), 0.639-0.656 for CNNpLSTM (based on NCHF=8, 16, and 32), 0.745 for LSTMwHour, 0.831 for LSTMwDpH, and 0.865-0.873 for CNNsLSTM (based on NCHF=8, 16, and 32). Furthermore, the proposed CNNsLSTM reduces the median RMSE of 1D-CNN by 50.2%-51.4%, CNNpLSTM by 37.4%-40.8%, LSTMwHour by 27.3%-29.5%, and LSTMwDpH by 10.6%-13.4%.

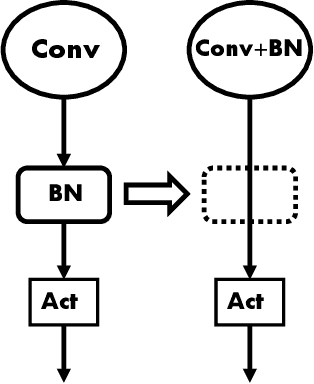

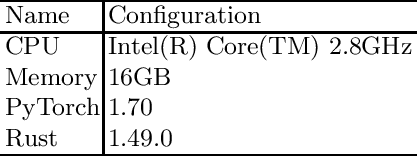

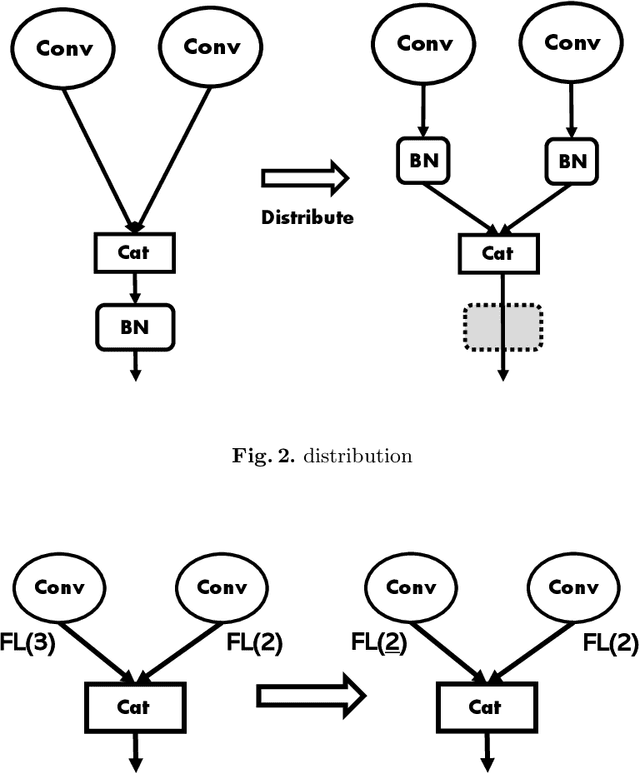

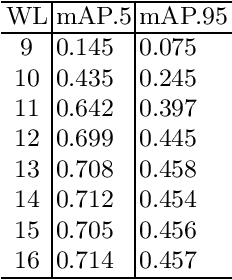

Development of Quantized DNN Library for Exact Hardware Emulation

Jun 15, 2021

Abstract:Quantization is used to speed up execution time and save power when runnning Deep neural networks (DNNs) on edge devices like AI chips. To investigate the effect of quantization, we need performing inference after quantizing the weights of DNN with 32-bit floating-point precision by a some bit width, and then quantizing them back to 32-bit floating-point precision. This is because the DNN library can only handle floating-point numbers. However, the accuracy of the emulation does not provide accurate precision. We need accurate precision to detect overflow in MAC operations or to verify the operation on edge de vices. We have developed PyParch, a DNN library that executes quantized DNNs (QNNs) with exactly the same be havior as hardware. In this paper, we describe a new proposal and implementation of PyParch. As a result of the evaluation, the accuracy of QNNs with arbitrary bit widths can be estimated for la rge and complex DNNs such as YOLOv5, and the overflow can be detected. We evaluated the overhead of the emulation time and found that it was 5.6 times slower for QNN and 42 times slower for QNN with overflow detection compared to the normal DNN execution time.

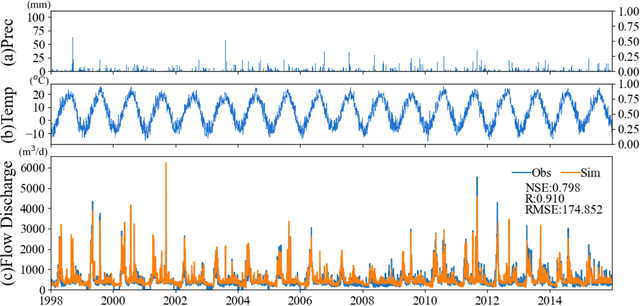

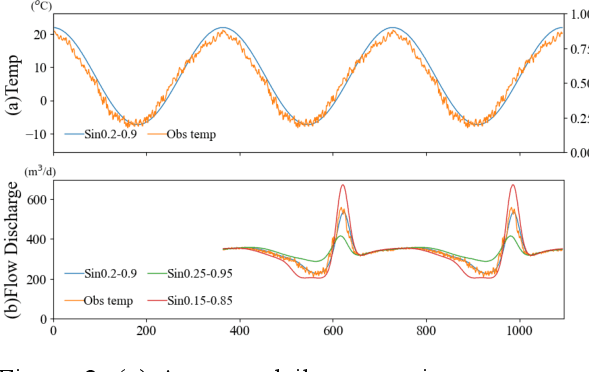

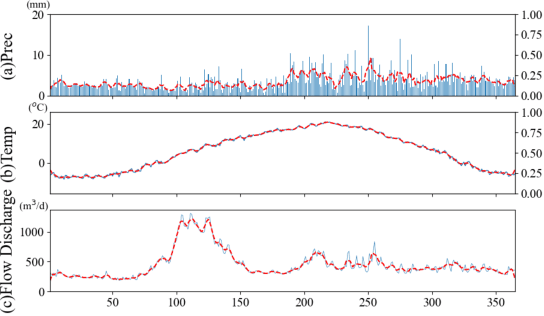

Capabilities of Deep Learning Models on Learning Physical Relationships: Case of Rainfall-Runoff Modeling with LSTM

Jun 15, 2021

Abstract:This study investigates the relationships which deep learning methods can identify between the input and output data. As a case study, rainfall-runoff modeling in a snow-dominated watershed by means of a long- and short-term memory (LSTM) network is selected. Daily precipitation and mean air temperature were used as model input to estimate daily flow discharge. After model training and verification, two experimental simulations were conducted with hypothetical inputs instead of observed meteorological data to clarify the response of the trained model to the inputs. The first numerical experiment showed that even without input precipitation, the trained model generated flow discharge, particularly winter low flow and high flow during the snow-melting period. The effects of warmer and colder conditions on the flow discharge were also replicated by the trained model without precipitation. Additionally, the model reflected only 17-39% of the total precipitation mass during the snow accumulation period in the total annual flow discharge, revealing a strong lack of water mass conservation. The results of this study indicated that a deep learning method may not properly learn the explicit physical relationships between input and target variables, although they are still capable of maintaining strong goodness-of-fit results.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge