Masahiko Saito

Event Classification with Multi-step Machine Learning

Jun 04, 2021

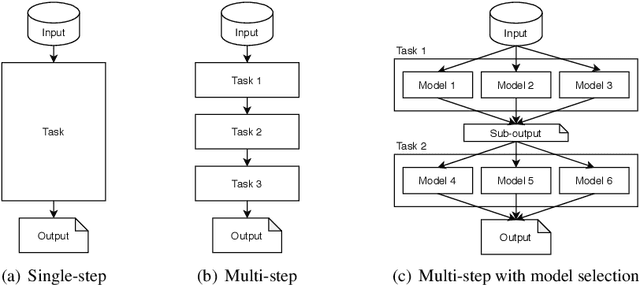

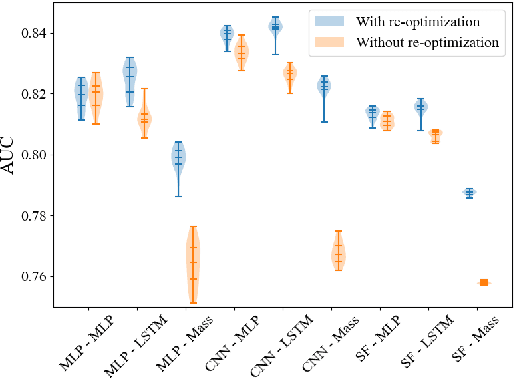

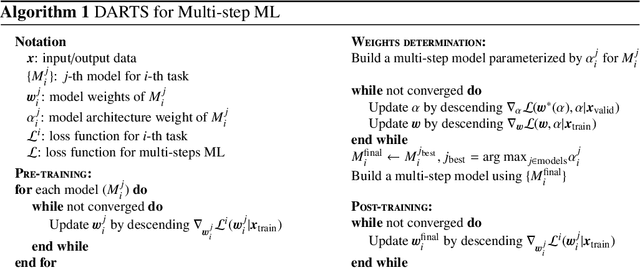

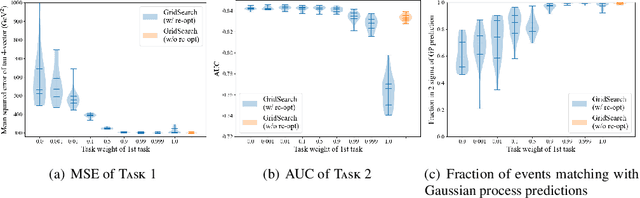

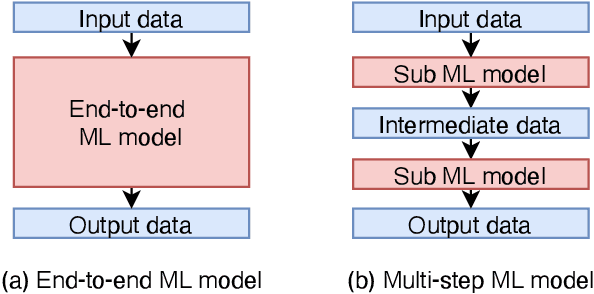

Abstract:The usefulness and value of Multi-step Machine Learning (ML), where a task is organized into connected sub-tasks with known intermediate inference goals, as opposed to a single large model learned end-to-end without intermediate sub-tasks, is presented. Pre-optimized ML models are connected and better performance is obtained by re-optimizing the connected one. The selection of an ML model from several small ML model candidates for each sub-task has been performed by using the idea based on Neural Architecture Search (NAS). In this paper, Differentiable Architecture Search (DARTS) and Single Path One-Shot NAS (SPOS-NAS) are tested, where the construction of loss functions is improved to keep all ML models smoothly learning. Using DARTS and SPOS-NAS as an optimization and selection as well as the connections for multi-step machine learning systems, we find that (1) such a system can quickly and successfully select highly performant model combinations, and (2) the selected models are consistent with baseline algorithms, such as grid search, and their outputs are well controlled.

An Improvement of Object Detection Performance using Multi-step Machine Learnings

Jan 19, 2021

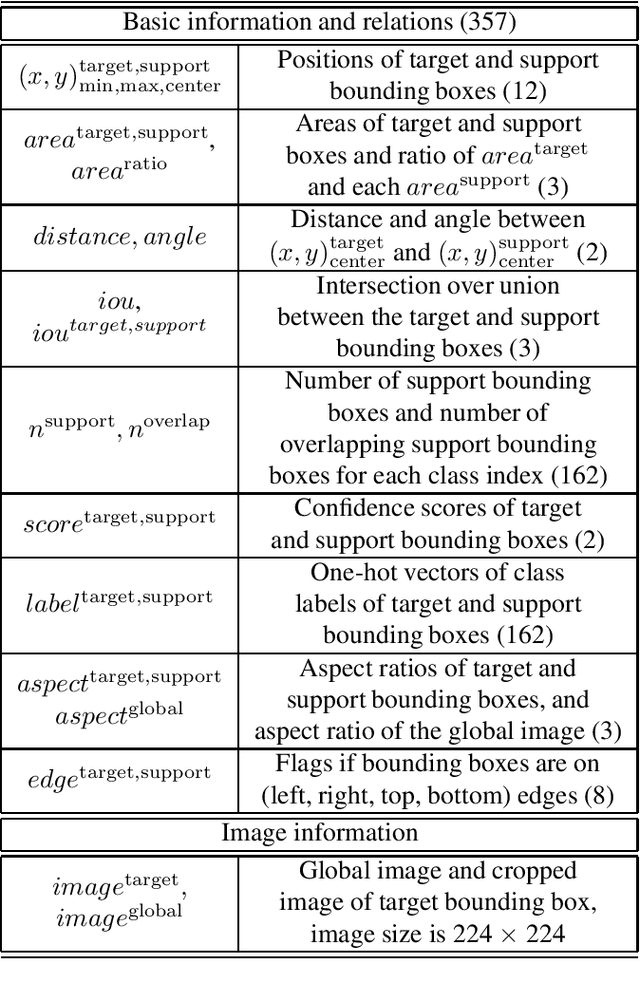

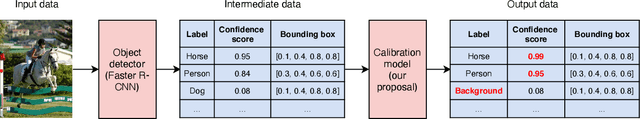

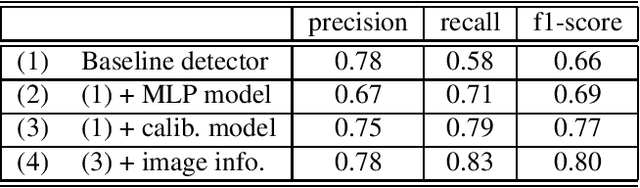

Abstract:Connecting multiple machine learning models into a pipeline is effective for handling complex problems. By breaking down the problem into steps, each tackled by a specific component model of the pipeline, the overall solution can be made accurate and explainable. This paper describes an enhancement of object detection based on this multi-step concept, where a post-processing step called the calibration model is introduced. The calibration model consists of a convolutional neural network, and utilizes rich contextual information based on the domain knowledge of the input. Improvements of object detection performance by 0.8-1.9 in average precision metric over existing object detectors have been observed using the new model.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge