Maryam Farajzadeh Dehkordi

Efficient and Sustainable Task Offloading in UAV-Assisted MEC Systems via Meta Deep Reinforcement Learning

Apr 01, 2025Abstract:Integrated into existing Mobile Edge Computing (MEC) systems, Unmanned Aerial Vehicles (UAVs) serve as a cornerstone in meeting the stringent requirements of future Internet of Things (IoT) networks. The current endeavor studies an MEC system, in which a computationally-empowered UAV, wirelessly linked to a cloud server, is destined for task offloading in uplink transmission of IoT devices. The performance of this system is studied by formulating a resource allocation problem, which aims to maximize the long-term computed task efficiency, while ensuring the stability of task buffers at the IoT devices, UAV and cloud. The problem jointly optimizes the uplink transmit power of IoT devices and their offloading decisions, the trajectory of the UAV and computing power at all transceivers. Regarding the non-convex and stochastic nature of the problem, we devise a multi-step solution approach. Initially, by invoking the fractional programming and Lyapunov theory, we transform the long-term optimization problem into an equivalent per-time-slot form. Subsequently, we recast the reformulated problem as a Markov Decision Process (MDP), which reflects the network dynamics. The MDP model, eventually, serves for training a Meta Twin Delayed Deep Deterministic Policy Gradient (MTD3) agent, in charge of adaptive resource allocation with respect to the MEC system variations derived from the mobility of the UAV and IoT devices. Simulations reveal the dominance of our proposed resource allocation approach over its Deep Reinforcement Learning (DRL)-powered counterparts, increasing computed task efficiency and reducing task buffer lengths.

Joint Long-Term Processed Task and Communication Delay Optimization in UAV-Assisted MEC Systems Using DQN

Sep 24, 2024

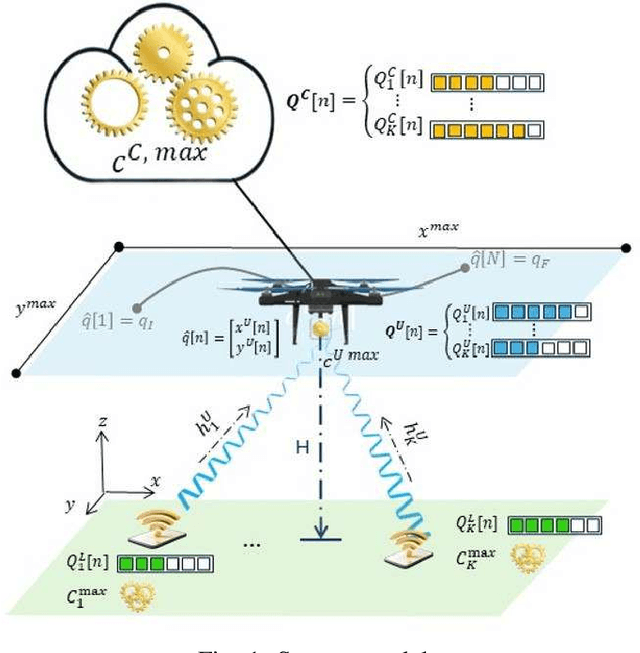

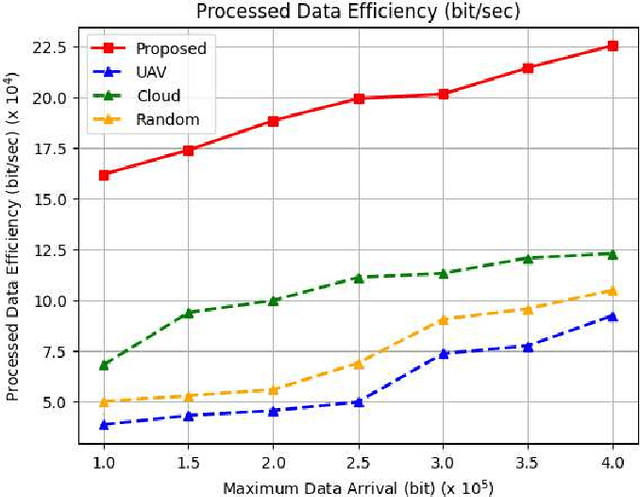

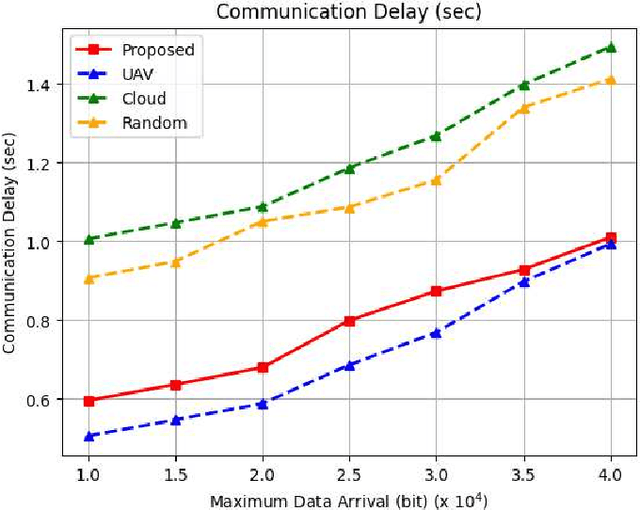

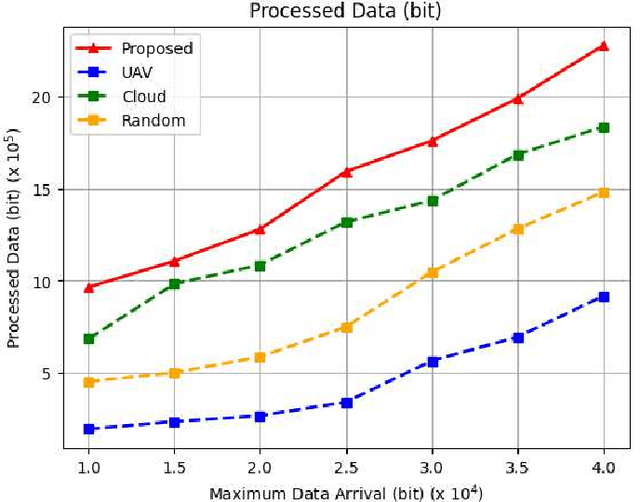

Abstract:Mobile Edge Computing (MEC) assisted by Unmanned Aerial Vehicle (UAV) has been widely investigated as a promising system for future Internet-of-Things (IoT) networks. In this context, delay-sensitive tasks of IoT devices may either be processed locally or offloaded for further processing to a UAV or to the cloud. This paper, by attributing task queues to each IoT device, the UAV, and the cloud, proposes a real-time resource allocation framework in a UAV-aided MEC system. Specifically, aimed at characterizing a long-term trade-off between the time-averaged aggregate processed data (PD) and the time-averaged aggregate communication delay (CD), a resource allocation optimization problem is formulated. This problem optimizes communication and computation resources as well as the UAV motion trajectory, while guaranteeing queue stability. To address this long-term time-averaged problem, a Lyapunov optimization framework is initially leveraged to obtain an equivalent short-term optimization problem. Subsequently, we reformulate the short-term problem in a Markov Decision Process (MDP) form, where a Deep Q Network (DQN) model is trained to optimize its variables. Extensive simulations demonstrate that the proposed resource allocation scheme improves the system performance by up to 36\% compared to baseline models.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge