Martin Schellenberger

Fully Convolutional Networks for Chip-wise Defect Detection Employing Photoluminescence Images

Oct 06, 2019

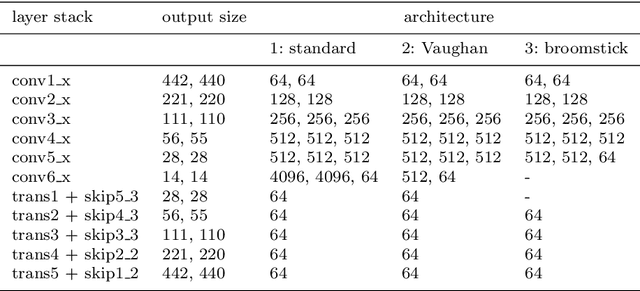

Abstract:Efficient quality control is inevitable in the manufacturing of light-emitting diodes (LEDs). Because defective LED chips may be traced back to different causes, a time and cost-intensive electrical and optical contact measurement is employed. Fast photoluminescence measurements, on the other hand, are commonly used to detect wafer separation damages but also hold the potential to enable an efficient detection of all kinds of defective LED chips. On a photoluminescence image, every pixel corresponds to an LED chip's brightness after photoexcitation, revealing performance information. But due to unevenly distributed brightness values and varying defect patterns, photoluminescence images are not yet employed for a comprehensive defect detection. In this work, we show that fully convolutional networks can be used for chip-wise defect detection, trained on a small data-set of photoluminescence images. Pixel-wise labels allow us to classify each and every chip as defective or not. Being measurement-based, labels are easy to procure and our experiments show that existing discrepancies between training images and labels do not hinder network training. Using weighted loss calculation, we were able to equalize our highly unbalanced class categories. Due to the consistent use of skip connections and residual shortcuts, our network is able to predict a variety of structures, from extensive defect clusters up to single defective LED chips.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge