Martin Černý

Reducing Optimism Bias in Incomplete Cooperative Games

Feb 02, 2024

Abstract:Cooperative game theory has diverse applications in contemporary artificial intelligence, including domains like interpretable machine learning, resource allocation, and collaborative decision-making. However, specifying a cooperative game entails assigning values to exponentially many coalitions, and obtaining even a single value can be resource-intensive in practice. Yet simply leaving certain coalition values undisclosed introduces ambiguity regarding individual contributions to the collective grand coalition. This ambiguity often leads to players holding overly optimistic expectations, stemming from either inherent biases or strategic considerations, frequently resulting in collective claims exceeding the actual grand coalition value. In this paper, we present a framework aimed at optimizing the sequence for revealing coalition values, with the overarching goal of efficiently closing the gap between players' expectations and achievable outcomes in cooperative games. Our contributions are threefold: (i) we study the individual players' optimistic completions of games with missing coalition values along with the arising gap, and investigate its analytical characteristics that facilitate more efficient optimization; (ii) we develop methods to minimize this gap over classes of games with a known prior by disclosing values of additional coalitions in both offline and online fashion; and (iii) we empirically demonstrate the algorithms' performance in practical scenarios, together with an investigation into the typical order of revealing coalition values.

Using Behavior Objects to Manage Complexity in Virtual Worlds

Nov 09, 2015

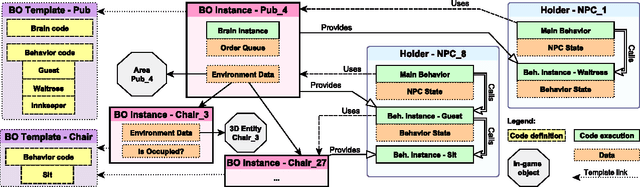

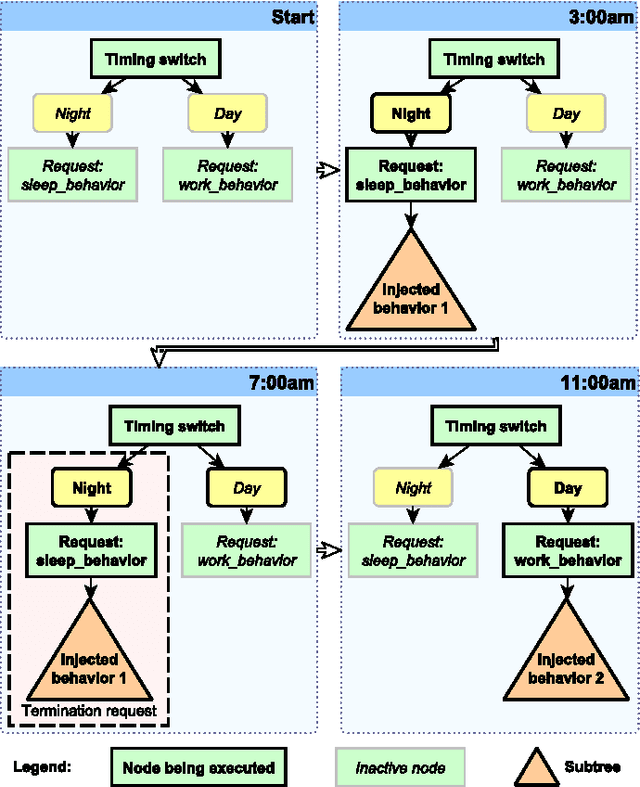

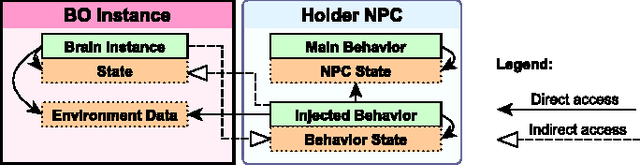

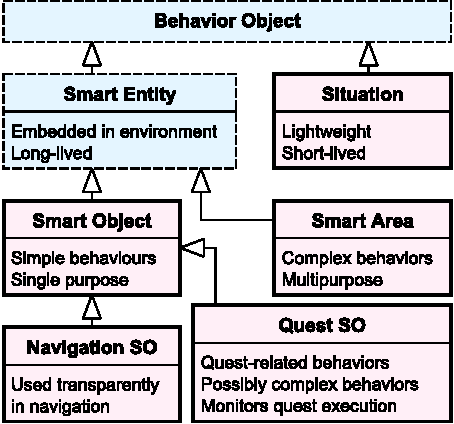

Abstract:The quality of high-level AI of non-player characters (NPCs) in commercial open-world games (OWGs) has been increasing during the past years. However, due to constraints specific to the game industry, this increase has been slow and it has been driven by larger budgets rather than adoption of new complex AI techniques. Most of the contemporary AI is still expressed as hard-coded scripts. The complexity and manageability of the script codebase is one of the key limiting factors for further AI improvements. In this paper we address this issue. We present behavior objects - a general approach to development of NPC behaviors for large OWGs. Behavior objects are inspired by object-oriented programming and extend the concept of smart objects. Our approach promotes encapsulation of data and code for multiple related behaviors in one place, hiding internal details and embedding intelligence in the environment. Behavior objects are a natural abstraction of five different techniques that we have implemented to manage AI complexity in an upcoming AAA OWG. We report the details of the implementations in the context of behavior trees and the lessons learned during development. Our work should serve as inspiration for AI architecture designers from both the academia and the industry.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge