Martijn van Otterlo

Regular Decision Processes for Grid Worlds

Nov 09, 2021

Abstract:Markov decision processes are typically used for sequential decision making under uncertainty. For many aspects however, ranging from constrained or safe specifications to various kinds of temporal (non-Markovian) dependencies in task and reward structures, extensions are needed. To that end, in recent years interest has grown into combinations of reinforcement learning and temporal logic, that is, combinations of flexible behavior learning methods with robust verification and guarantees. In this paper we describe an experimental investigation of the recently introduced regular decision processes that support both non-Markovian reward functions as well as transition functions. In particular, we provide a tool chain for regular decision processes, algorithmic extensions relating to online, incremental learning, an empirical evaluation of model-free and model-based solution algorithms, and applications in regular, but non-Markovian, grid worlds.

Personalization of Health Interventions using Cluster-Based Reinforcement Learning

Apr 10, 2018

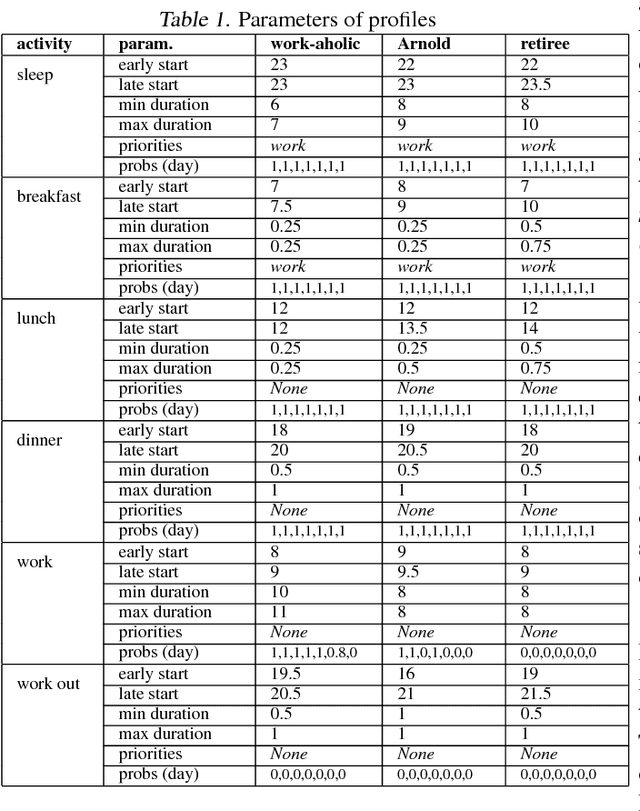

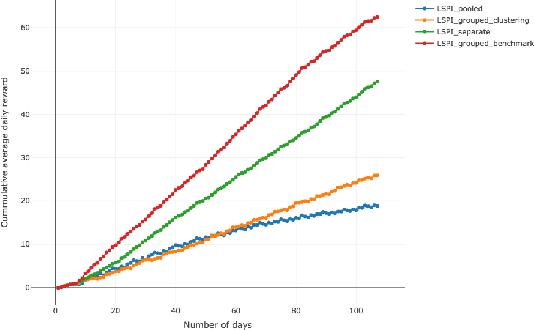

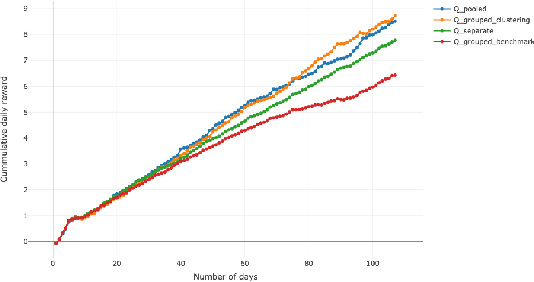

Abstract:Research has shown that personalization of health interventions can contribute to an improved effectiveness. Reinforcement learning algorithms can be used to perform such tailoring using data that is collected about users. Learning is however very fragile for health interventions as only limited time is available to learn from the user before disengagement takes place, or before the opportunity to intervene passes. In this paper, we present a cluster-based reinforcement learning approach which learns across groups of users. Such an approach can speed up the learning process while still giving a level of personalization. The clustering algorithm uses a distance metric over traces of states and rewards. We apply both online and batch learning to learn policies over the clusters and introduce a publicly available simulator which we have developed to evaluate the approach. The results show batch learning clearly outperforms online learning. Furthermore, clustering can be beneficial provided that a proper clustering is found.

Gatekeeping Algorithms with Human Ethical Bias: The ethics of algorithms in archives, libraries and society

Jan 05, 2018Abstract:In the age of algorithms, I focus on the question of how to ensure algorithms that will take over many of our familiar archival and library tasks, will behave according to human ethical norms that have evolved over many years. I start by characterizing physical archives in the context of related institutions such as libraries and museums. In this setting I analyze how ethical principles, in particular about access to information, have been formalized and communicated in the form of ethical codes, or: codes of conducts. After that I describe two main developments: digitalization, in which physical aspects of the world are turned into digital data, and algorithmization, in which intelligent computer programs turn this data into predictions and decisions. Both affect interactions that were once physical but now digital. In this new setting I survey and analyze the ethical aspects of algorithms and how they shape a vision on the future of archivists and librarians, in the form of algorithmic documentalists, or: codementalists. Finally I outline a general research strategy, called IntERMEeDIUM, to obtain algorithms that obey are human ethical values encoded in code of ethics.

From Algorithmic Black Boxes to Adaptive White Boxes: Declarative Decision-Theoretic Ethical Programs as Codes of Ethics

Nov 16, 2017

Abstract:Ethics of algorithms is an emerging topic in various disciplines such as social science, law, and philosophy, but also artificial intelligence (AI). The value alignment problem expresses the challenge of (machine) learning values that are, in some way, aligned with human requirements or values. In this paper I argue for looking at how humans have formalized and communicated values, in professional codes of ethics, and for exploring declarative decision-theoretic ethical programs (DDTEP) to formalize codes of ethics. This renders machine ethical reasoning and decision-making, as well as learning, more transparent and hopefully more accountable. The paper includes proof-of-concept examples of known toy dilemmas and gatekeeping domains such as archives and libraries.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge