Markus Kneer

Playing the Blame Game with Robots

Feb 08, 2021

Abstract:Recent research shows -- somewhat astonishingly -- that people are willing to ascribe moral blame to AI-driven systems when they cause harm [1]-[4]. In this paper, we explore the moral-psychological underpinnings of these findings. Our hypothesis was that the reason why people ascribe moral blame to AI systems is that they consider them capable of entertaining inculpating mental states (what is called mens rea in the law). To explore this hypothesis, we created a scenario in which an AI system runs a risk of poisoning people by using a novel type of fertilizer. Manipulating the computational (or quasi-cognitive) abilities of the AI system in a between-subjects design, we tested whether people's willingness to ascribe knowledge of a substantial risk of harm (i.e., recklessness) and blame to the AI system. Furthermore, we investigated whether the ascription of recklessness and blame to the AI system would influence the perceived blameworthiness of the system's user (or owner). In an experiment with 347 participants, we found (i) that people are willing to ascribe blame to AI systems in contexts of recklessness, (ii) that blame ascriptions depend strongly on the willingness to attribute recklessness and (iii) that the latter, in turn, depends on the perceived "cognitive" capacities of the system. Furthermore, our results suggest (iv) that the higher the computational sophistication of the AI system, the more blame is shifted from the human user to the AI system.

Guilty Artificial Minds

Jan 24, 2021

Abstract:The concepts of blameworthiness and wrongness are of fundamental importance in human moral life. But to what extent are humans disposed to blame artificially intelligent agents, and to what extent will they judge their actions to be morally wrong? To make progress on these questions, we adopted two novel strategies. First, we break down attributions of blame and wrongness into more basic judgments about the epistemic and conative state of the agent, and the consequences of the agent's actions. In this way, we are able to examine any differences between the way participants treat artificial agents in terms of differences in these more basic judgments. our second strategy is to compare attributions of blame and wrongness across human, artificial, and group agents (corporations). Others have compared attributions of blame and wrongness between human and artificial agents, but the addition of group agents is significant because these agents seem to provide a clear middle-ground between human agents (for whom the notions of blame and wrongness were created) and artificial agents (for whom the question remains open).

Implementations in Machine Ethics: A Survey

Jan 21, 2020

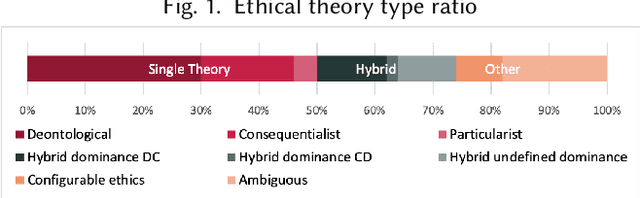

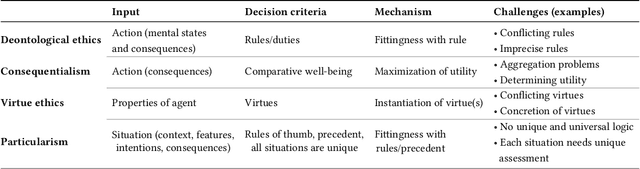

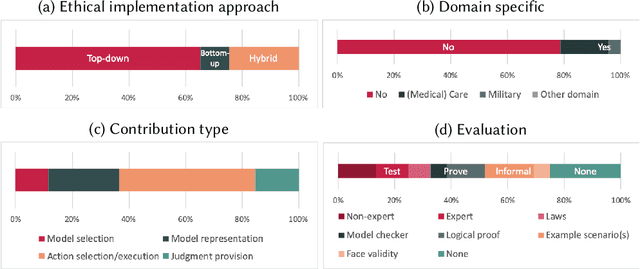

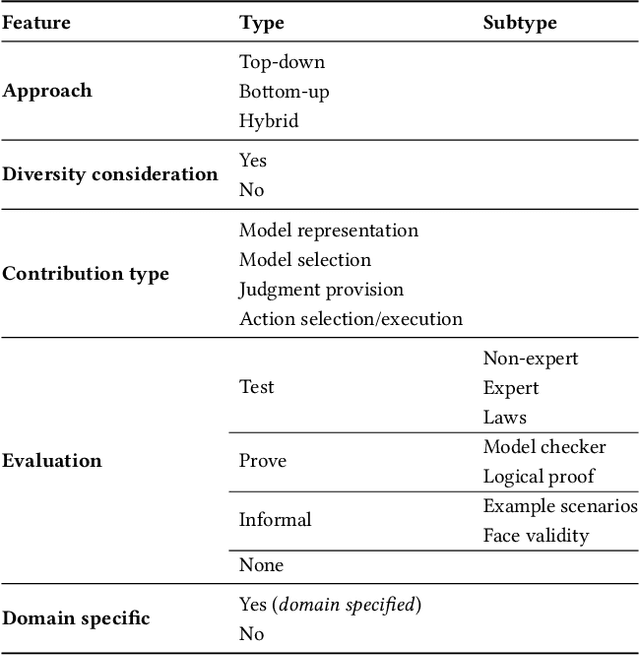

Abstract:Increasingly complex and autonomous systems require machine ethics to maximize the benefits and minimize the risks to society arising from the new technology. It is challenging to decide which type of ethical theory to employ and how to implement it effectively. This survey provides a threefold contribution. Firstly, it introduces a taxonomy to analyze the field of machine ethics from an ethical, implementational, and technical perspective. Secondly, an exhaustive selection and description of relevant works is presented. Thirdly, applying the new taxonomy to the selected works, dominant research patterns and lessons for the field are identified, and future directions for research are suggested.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge