Markus Eberts

An End-to-end Model for Entity-level Relation Extraction using Multi-instance Learning

Feb 11, 2021

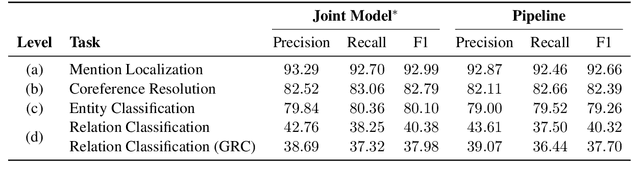

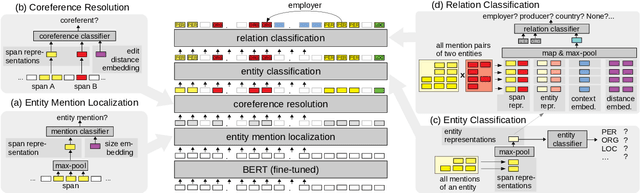

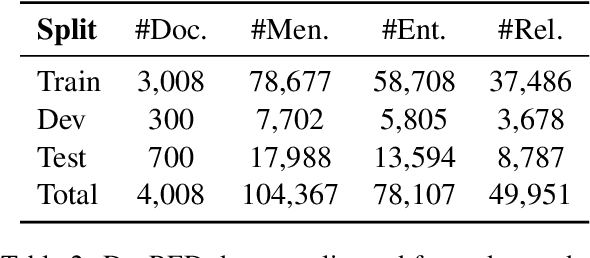

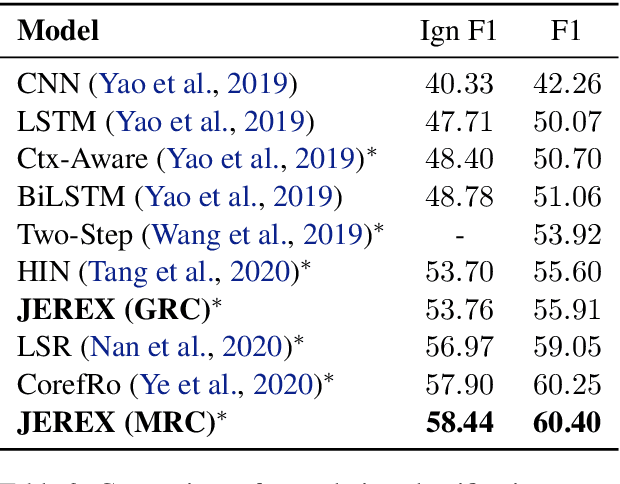

Abstract:We present a joint model for entity-level relation extraction from documents. In contrast to other approaches - which focus on local intra-sentence mention pairs and thus require annotations on mention level - our model operates on entity level. To do so, a multi-task approach is followed that builds upon coreference resolution and gathers relevant signals via multi-instance learning with multi-level representations combining global entity and local mention information. We achieve state-of-the-art relation extraction results on the DocRED dataset and report the first entity-level end-to-end relation extraction results for future reference. Finally, our experimental results suggest that a joint approach is on par with task-specific learning, though more efficient due to shared parameters and training steps.

Span-based Joint Entity and Relation Extraction with Transformer Pre-training

Oct 08, 2019

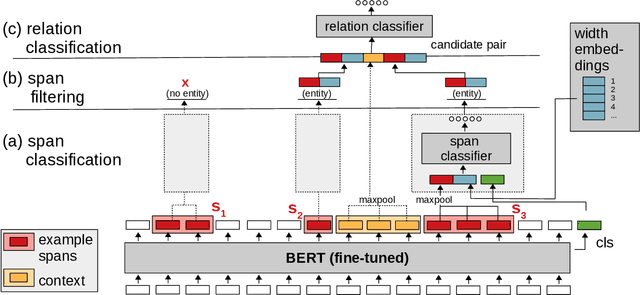

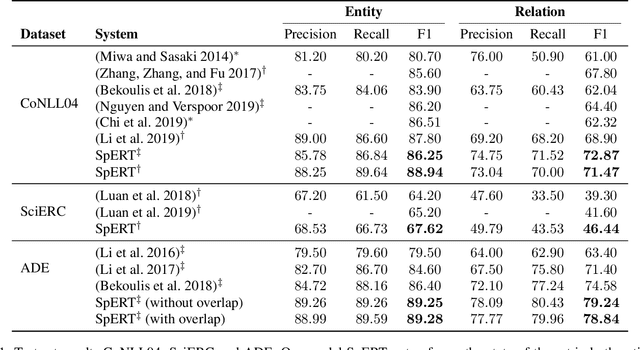

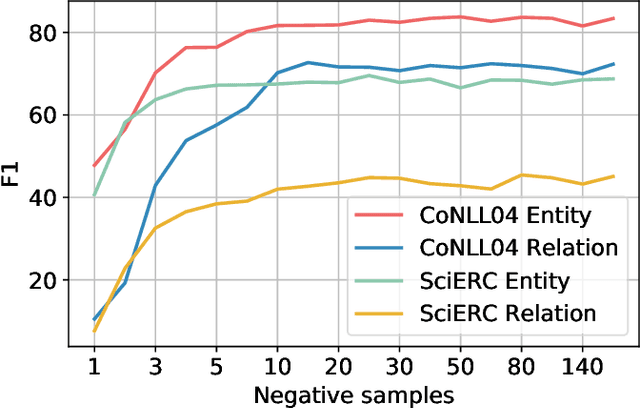

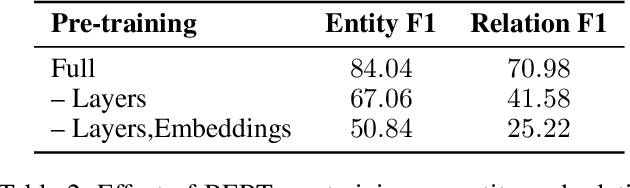

Abstract:We introduce SpERT, an attention model for span-based joint entity and relation extraction. Our approach employs the pre-trained Transformer network BERT as its core. We use BERT embeddings as shared inputs for a light-weight reasoning, which features entity recognition and filtering, as well as relation classification with a localized, marker-free context representation. The model is trained on strong within-sentence negative samples, which are efficiently extracted in a single BERT pass. These aspects facilitate a search over all spans in the sentence. In ablation studies, we demonstrate the benefits of pre-training, strong negative sampling and localized context. Our model outperforms prior work by up to 4.7% F1 score on several datasets for joint entity and relation extraction.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge