Marko van Eekelen

Open University of the Netherlands, Radboud University

What is understandable in Bayesian network explanations?

Oct 04, 2021

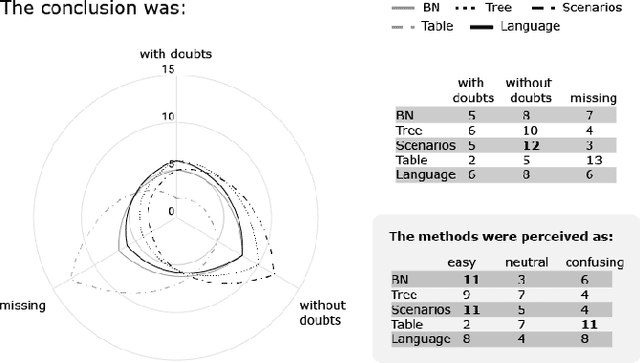

Abstract:Explaining predictions from Bayesian networks, for example to physicians, is non-trivial. Various explanation methods for Bayesian network inference have appeared in literature, focusing on different aspects of the underlying reasoning. While there has been a lot of technical research, there is very little known about how well humans actually understand these explanations. In this paper, we present ongoing research in which four different explanation approaches were compared through a survey by asking a group of human participants to interpret the explanations.

Sim-Env: Decoupling OpenAI Gym Environments from Simulation Models

Feb 22, 2021

Abstract:Reinforcement learning (RL) is one of the most active fields of AI research. Despite the interest demonstrated by the research community in reinforcement learning, the development methodology still lags behind, with a severe lack of standard APIs to foster the development of RL applications. OpenAI Gym is probably the most used environment to develop RL applications and simulations, but most of the abstractions proposed in such a framework are still assuming a semi-structured methodology. This is particularly relevant for agent-based models whose purpose is to analyse adaptive behaviour displayed by self-learning agents in the simulation. In order to bridge this gap, we present a workflow and tools for the decoupled development and maintenance of multi-purpose agent-based models and derived single-purpose reinforcement learning environments, enabling the researcher to swap out environments with ones representing different perspectives or different reward models, all while keeping the underlying domain model intact and separate. The Sim-Env Python library generates OpenAI-Gym-compatible reinforcement learning environments that use existing or purposely created domain models as their simulation back-ends. Its design emphasizes ease-of-use, modularity and code separation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge