Mark Henry

Evaluating Stability of Unreflective Alignment

Aug 27, 2024Abstract:Many theoretical obstacles to AI alignment are consequences of reflective stability - the problem of designing alignment mechanisms that the AI would not disable if given the option. However, problems stemming from reflective stability are not obviously present in current LLMs, leading to disagreement over whether they will need to be solved to enable safe delegation of cognitive labor. In this paper, we propose Counterfactual Priority Change (CPC) destabilization as a mechanism by which reflective stability problems may arise in future LLMs. We describe two risk factors for CPC-destabilization: 1) CPC-based stepping back and 2) preference instability. We develop preliminary evaluations for each of these risk factors, and apply them to frontier LLMs. Our findings indicate that in current LLMs, increased scale and capability are associated with increases in both CPC-based stepping back and preference instability, suggesting that CPC-destabilization may cause reflective stability problems in future LLMs.

EventScore: An Automated Real-time Early Warning Score for Clinical Events

Feb 14, 2021

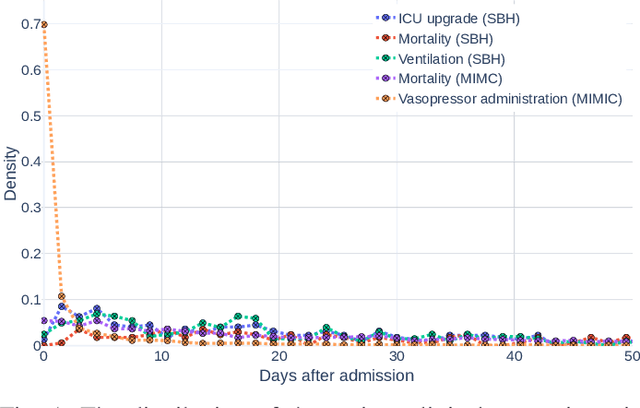

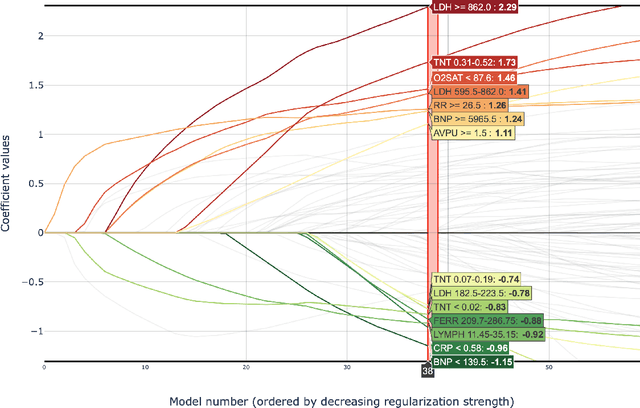

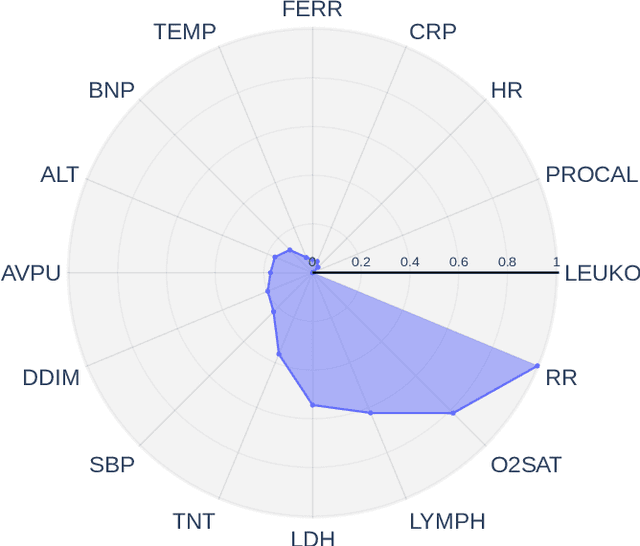

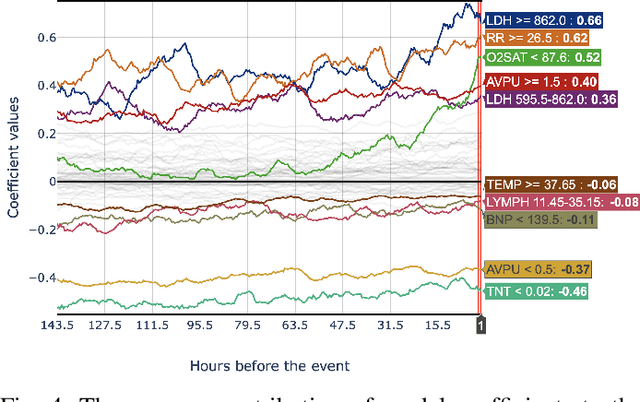

Abstract:Early prediction of patients at risk of clinical deterioration can help physicians intervene and alter their clinical course towards better outcomes. In addition to the accuracy requirement, early warning systems must make the predictions early enough to give physicians enough time to intervene. Interpretability is also one of the challenges when building such systems since being able to justify the reasoning behind model decisions is desirable in clinical practice. In this work, we built an interpretable model for the early prediction of various adverse clinical events indicative of clinical deterioration. The model is evaluated on two datasets and four clinical events. The first dataset is collected in a predominantly COVID-19 positive population at Stony Brook Hospital. The second dataset is the MIMIC III dataset. The model was trained to provide early warning scores for ventilation, ICU transfer, and mortality prediction tasks on the Stony Brook Hospital dataset and to predict mortality and the need for vasopressors on the MIMIC III dataset. Our model first separates each feature into multiple ranges and then uses logistic regression with lasso penalization to select the subset of ranges for each feature. The model training is completely automated and doesn't require expert knowledge like other early warning scores. We compare our model to the Modified Early Warning Score (MEWS) and quick SOFA (qSOFA), commonly used in hospitals. We show that our model outperforms these models in the area under the receiver operating characteristic curve (AUROC) while having a similar or better median detection time on all clinical events, even when using fewer features. Unlike MEWS and qSOFA, our model can be entirely automated without requiring any manually recorded features. We also show that discretization improves model performance by comparing our model to a baseline logistic regression model.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge