Mark Cesar

Unifying Privacy Loss Composition for Data Analytics

Apr 15, 2020

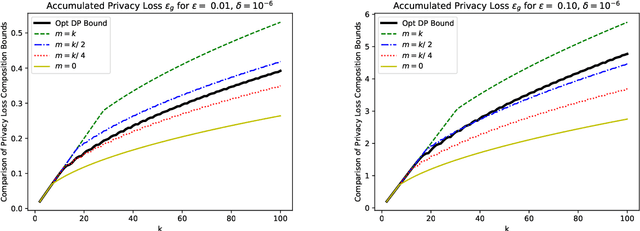

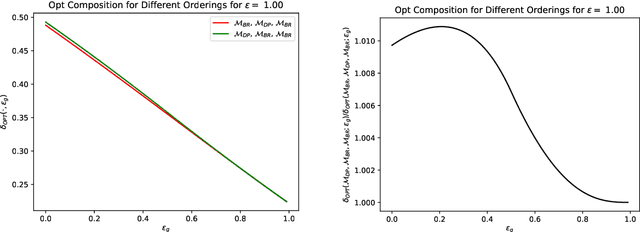

Abstract:Differential privacy (DP) provides rigorous privacy guarantees on individual's data while also allowing for accurate statistics to be conducted on the overall, sensitive dataset. To design a private system, first private algorithms must be designed that can quantify the privacy loss of each outcome that is released. However, private algorithms that inject noise into the computation are not sufficient to ensure individuals' data is protected due to many noisy results ultimately concentrating to the true, non-privatized result. Hence there have been several works providing precise formulas for how the privacy loss accumulates over multiple interactions with private algorithms. However, these formulas either provide very general bounds on the privacy loss, at the cost of being overly pessimistic for certain types of private algorithms, or they can be too narrow in scope to apply to general privacy systems. In this work, we unify existing privacy loss composition bounds for special classes of differentially private (DP) algorithms along with general DP composition bounds. In particular, we provide strong privacy loss bounds when an analyst may select pure DP, bounded range (e.g. exponential mechanisms), or concentrated DP mechanisms in any order. We also provide optimal privacy loss bounds that apply when an analyst can select pure DP and bounded range mechanisms in a batch, i.e. non-adaptively. Further, when an analyst selects mechanisms within each class adaptively, we show a difference in privacy loss between different, predetermined orderings of pure DP and bounded range mechanisms. Lastly, we compare the composition bounds of Laplace and Gaussian mechanisms based on histogram datasets.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge