Marisa Klopper

Automatic Tuberculosis and COVID-19 cough classification using deep learning

May 11, 2022

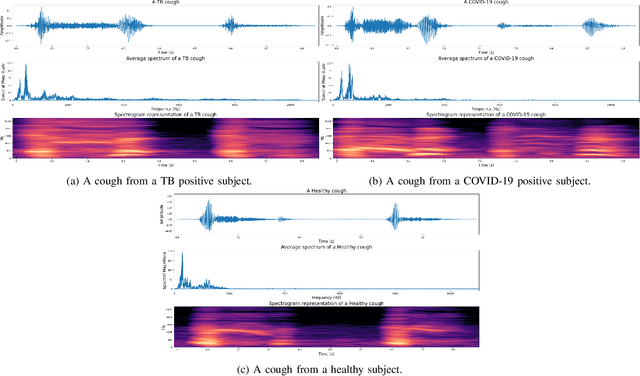

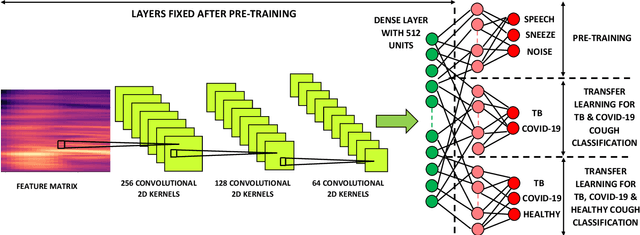

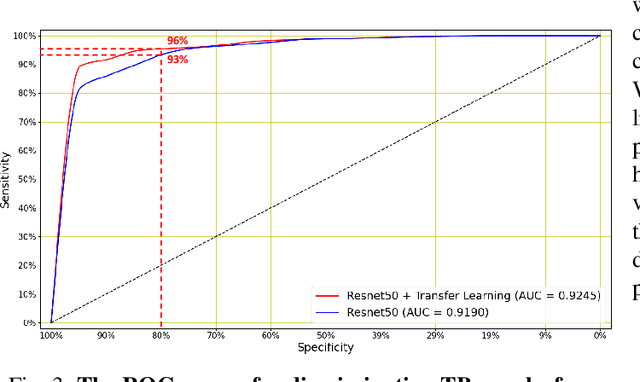

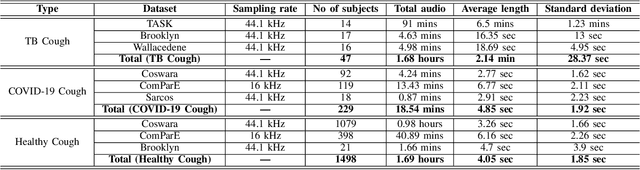

Abstract:We present a deep learning based automatic cough classifier which can discriminate tuberculosis (TB) coughs from COVID-19 coughs and healthy coughs. Both TB and COVID-19 are respiratory disease, have cough as a predominant symptom and claim thousands of lives each year. The cough audio recordings were collected at both indoor and outdoor settings and also uploaded using smartphones from subjects around the globe, thus contain various levels of noise. This cough data include 1.68 hours of TB coughs, 18.54 minutes of COVID-19 coughs and 1.69 hours of healthy coughs from 47 TB patients, 229 COVID-19 patients and 1498 healthy patients and were used to train and evaluate a CNN, LSTM and Resnet50. These three deep architectures were also pre-trained on 2.14 hours of sneeze, 2.91 hours of speech and 2.79 hours of noise for improved performance. The class-imbalance in our dataset was addressed by using SMOTE data balancing technique and using performance metrics such as F1-score and AUC. Our study shows that the highest F1-scores of 0.9259 and 0.8631 have been achieved from a pre-trained Resnet50 for two-class (TB vs COVID-19) and three-class (TB vs COVID-19 vs healthy) cough classification tasks, respectively. The application of deep transfer learning has improved the classifiers' performance and makes them more robust as they generalise better over the cross-validation folds. Their performances exceed the TB triage test requirements set by the world health organisation (WHO). The features producing the best performance contain higher order of MFCCs suggesting that the differences between TB and COVID-19 coughs are not perceivable by the human ear. This type of cough audio classification is non-contact, cost-effective and can easily be deployed on a smartphone, thus it can be an excellent tool for both TB and COVID-19 screening.

Wake-Cough: cough spotting and cougher identification for personalised long-term cough monitoring

Oct 07, 2021

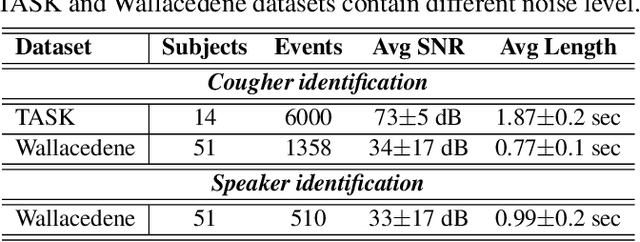

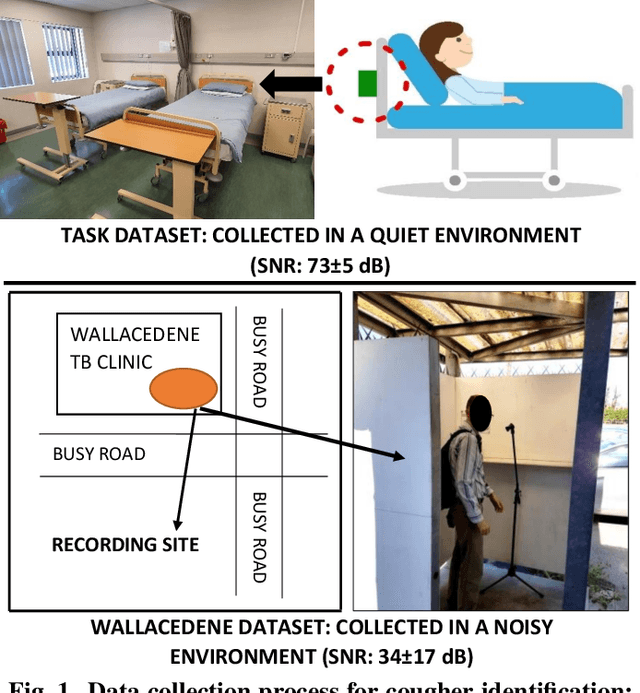

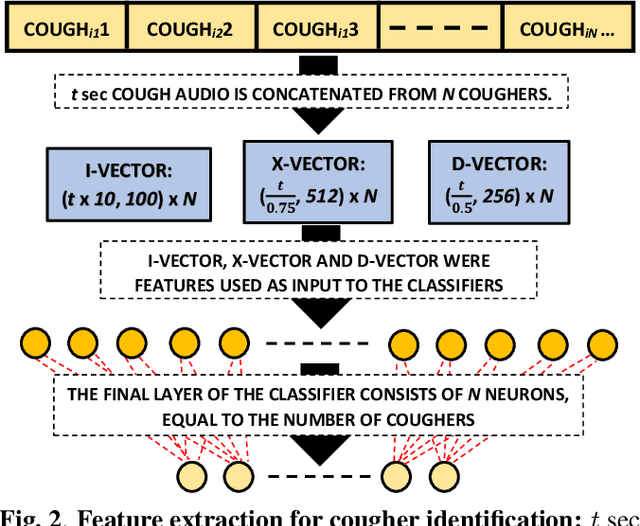

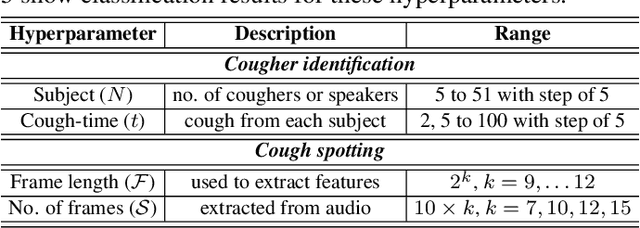

Abstract:We present 'wake-cough', an application of wake-word spotting to coughs using Resnet50 and identifying coughers using i-vectors, for the purpose of a long-term, personalised cough monitoring system. Coughs, recorded in a quiet (73$\pm$5 dB) and noisy (34$\pm$17 dB) environment, were used to extract i-vectors, x-vectors and d-vectors, used as features to the classifiers. The system achieves 90.02\% accuracy from an MLP to discriminate 51 coughers using 2-sec long cough segments in the noisy environment. When discriminating between 5 and 14 coughers using longer (100 sec) segments in the quiet environment, this accuracy rises to 99.78\% and 98.39\% respectively. Unlike speech, i-vectors outperform x-vectors and d-vectors in identifying coughers. These coughs were added as an extra class in the Google Speech Commands dataset and features were extracted by preserving the end-to-end time-domain information in an event. The highest accuracy of 88.58\% is achieved in spotting coughs among 35 other trigger phrases using a Resnet50. Wake-cough represents a personalised, non-intrusive, cough monitoring system, which is power efficient as using wake-word detection method can keep a smartphone-based monitoring device mostly dormant. This makes wake-cough extremely attractive in multi-bed ward environments to monitor patient's long-term recovery from lung ailments such as tuberculosis and COVID-19.

Automatic Cough Classification for Tuberculosis Screening in a Real-World Environment

Mar 23, 2021

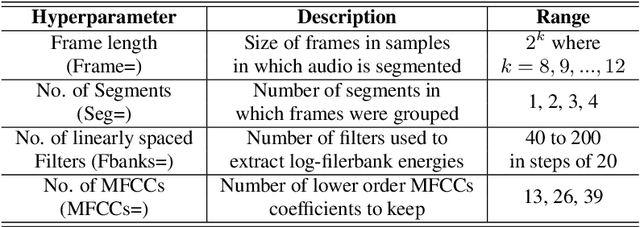

Abstract:We present first results showing that it is possible to automatically discriminate between the coughing sounds produced by patients with tuberculosis (TB) and those produced by patients with other lung ailments in a real-world noisy environment. Our experiments are based on a dataset of cough recordings obtained in a real-world clinic setting from 16 patients confirmed to be suffering from TB and 33 patients that are suffering from respiratory conditions, confirmed as other than TB. We have trained and evaluated several machine learning classifiers, including logistic regression (LR), support vector machines (SVM), k-nearest neighbour (KNN), multilayer perceptrons (MLP) and convolutional neural networks (CNN) inside a nested k-fold cross-validation and find that, although classification is possible in all cases, the best performance is achieved using the LR classifier. In combination with feature selection by sequential forward search (SFS), our best LR system achieves an area under the ROC curve (AUC) of 0.94 using 23 features selected from a set of 78 high-resolution mel-frequency cepstral coefficients (MFCCs). This system achieves a sensitivity of 93% at a specificity of 95% and thus exceeds the 90\% sensitivity at 70% specificity specification considered by the WHO as minimal requirements for community-based TB triage test. We conclude that automatic classification of cough audio sounds is promising as a viable means of low-cost easily-deployable front-line screening for TB, which will greatly benefit developing countries with a heavy TB burden.

COVID-19 Cough Classification using Machine Learning and Global Smartphone Recordings

Dec 02, 2020

Abstract:We present a machine learning based COVID-19 cough classifier which is able to discriminate COVID-19 positive coughs from both COVID-19 negative and healthy coughs recorded on a smartphone. This type of screening is non-contact and easily applied, and could help reduce workload in testing centers as well as limit transmission by recommending early self-isolation to those who have a cough suggestive of COVID-19. The two dataset used in this study include subjects from all six continents and contain both forced and natural coughs. The publicly available Coswara dataset contains 92 COVID-19 positive and 1079 healthy subjects, while the second smaller dataset was collected mostly in South Africa and contains 8 COVID-19 positive and 13 COVID-19 negative subjects who have undergone a SARS-CoV laboratory test. Dataset skew was addressed by applying synthetic minority oversampling (SMOTE) and leave-p-out cross validation was used to train and evaluate classifiers. Logistic regression (LR), support vector machines (SVM), multilayer perceptrons (MLP), convolutional neural networks (CNN), long-short term memory (LSTM) and a residual-based neural network architecture (Resnet50) were considered as classifiers. Our results show that the Resnet50 classifier was best able to discriminate between the COVID-19 positive and the healthy coughs with an area under the ROC curve (AUC) of 0.98 while a LSTM classifier was best able to discriminate between the COVID-19 positive and COVID-19 negative coughs with an AUC of 0.94. The LSTM classifier achieved these results using 13 features selected by sequential forward search (SFS). Since it can be implemented on a smartphone, cough audio classification is cost-effective and easy to apply and deploy, and therefore is potentially a useful and viable means of non-contact COVID-19 screening.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge