Marc Kassubeck

N-SfC: Robust and Fast Shape Estimation from Caustic Images

Dec 13, 2021

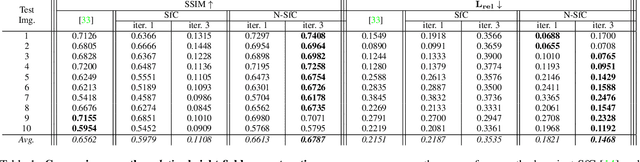

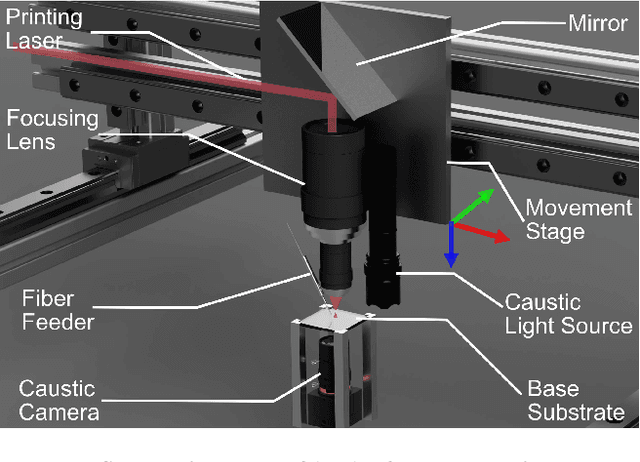

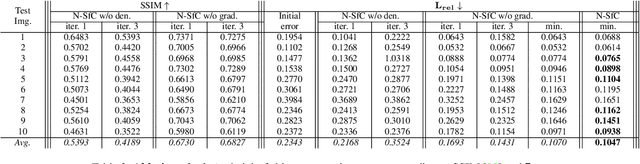

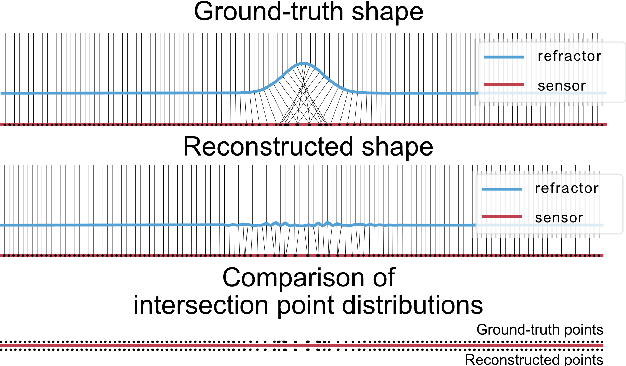

Abstract:This paper deals with the highly challenging problem of reconstructing the shape of a refracting object from a single image of its resulting caustic. Due to the ubiquity of transparent refracting objects in everyday life, reconstruction of their shape entails a multitude of practical applications. The recent Shape from Caustics (SfC) method casts the problem as the inverse of a light propagation simulation for synthesis of the caustic image, that can be solved by a differentiable renderer. However, the inherent complexity of light transport through refracting surfaces currently limits the practicability with respect to reconstruction speed and robustness. To address these issues, we introduce Neural-Shape from Caustics (N-SfC), a learning-based extension that incorporates two components into the reconstruction pipeline: a denoising module, which alleviates the computational cost of the light transport simulation, and an optimization process based on learned gradient descent, which enables better convergence using fewer iterations. Extensive experiments demonstrate the effectiveness of our neural extensions in the scenario of quality control in 3D glass printing, where we significantly outperform the current state-of-the-art in terms of computational speed and final surface error.

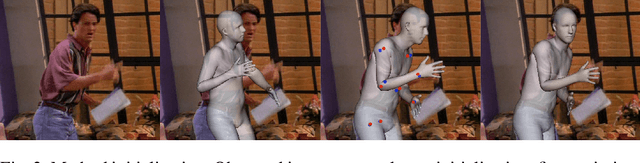

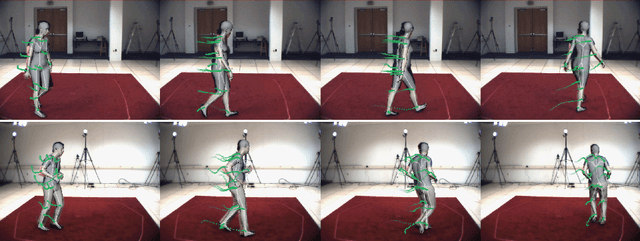

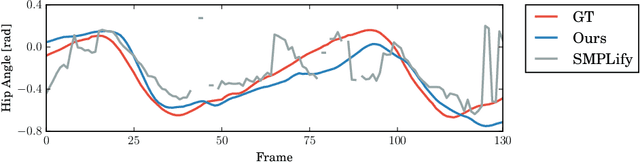

Optical Flow-based 3D Human Motion Estimation from Monocular Video

Mar 21, 2017

Abstract:We present a generative method to estimate 3D human motion and body shape from monocular video. Under the assumption that starting from an initial pose optical flow constrains subsequent human motion, we exploit flow to find temporally coherent human poses of a motion sequence. We estimate human motion by minimizing the difference between computed flow fields and the output of an artificial flow renderer. A single initialization step is required to estimate motion over multiple frames. Several regularization functions enhance robustness over time. Our test scenarios demonstrate that optical flow effectively regularizes the under-constrained problem of human shape and motion estimation from monocular video.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge