Manuel M. Oliveira

Digital Image Forensics vs. Image Composition: An Indirect Arms Race

Jan 13, 2016

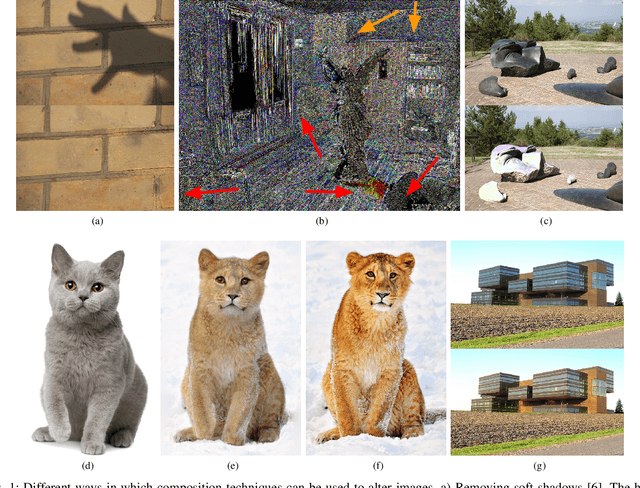

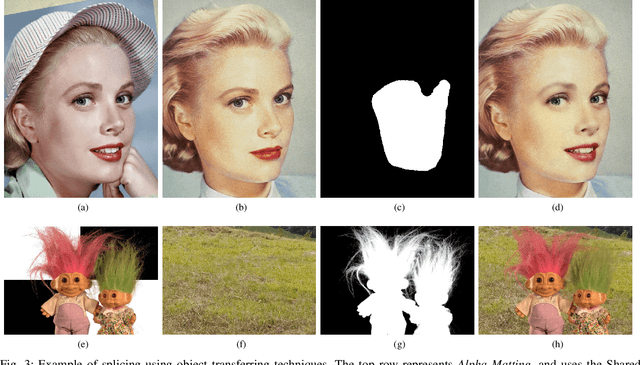

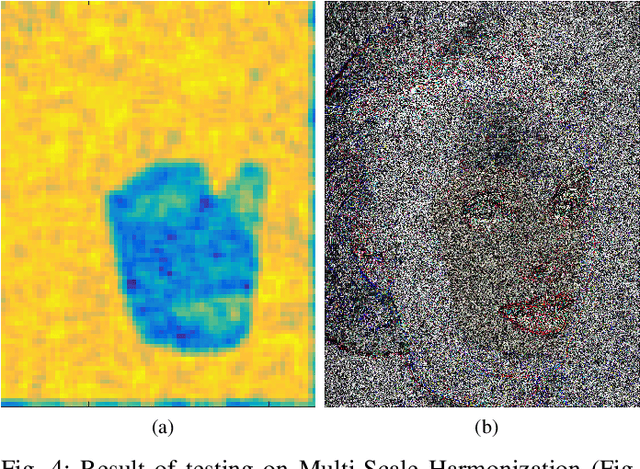

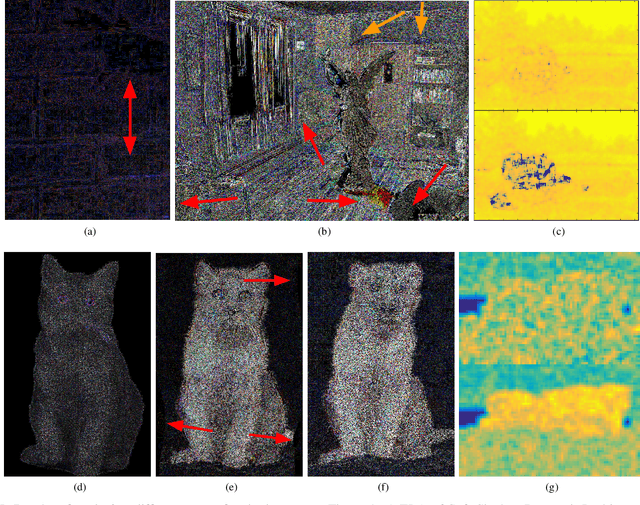

Abstract:The field of image composition is constantly trying to improve the ways in which an image can be altered and enhanced. While this is usually done in the name of aesthetics and practicality, it also provides tools that can be used to maliciously alter images. In this sense, the field of digital image forensics has to be prepared to deal with the influx of new technology, in a constant arms-race. In this paper, the current state of this arms-race is analyzed, surveying the state-of-the-art and providing means to compare both sides. A novel scale to classify image forensics assessments is proposed, and experiments are performed to test composition techniques in regards to different forensics traces. We show that even though research in forensics seems unaware of the advanced forms of image composition, it possesses the basic tools to detect it.

Humans Are Easily Fooled by Digital Images

Sep 17, 2015

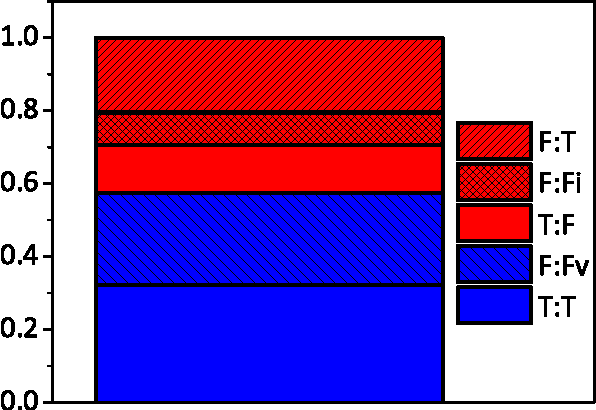

Abstract:Digital images are ubiquitous in our modern lives, with uses ranging from social media to news, and even scientific papers. For this reason, it is crucial evaluate how accurate people are when performing the task of identify doctored images. In this paper, we performed an extensive user study evaluating subjects capacity to detect fake images. After observing an image, users have been asked if it had been altered or not. If the user answered the image has been altered, he had to provide evidence in the form of a click on the image. We collected 17,208 individual answers from 383 users, using 177 images selected from public forensic databases. Different from other previously studies, our method propose different ways to avoid lucky guess when evaluating users answers. Our results indicate that people show inaccurate skills at differentiating between altered and non-altered images, with an accuracy of 58%, and only identifying the modified images 46.5% of the time. We also track user features such as age, answering time, confidence, providing deep analysis of how such variables influence on the users' performance.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge