Manu Nandan

Fast SVM training using approximate extreme points

Apr 04, 2013

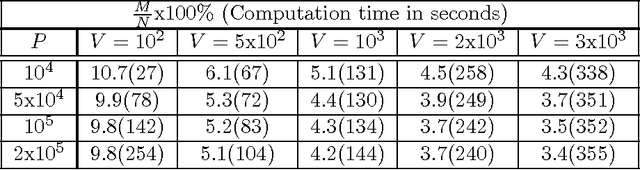

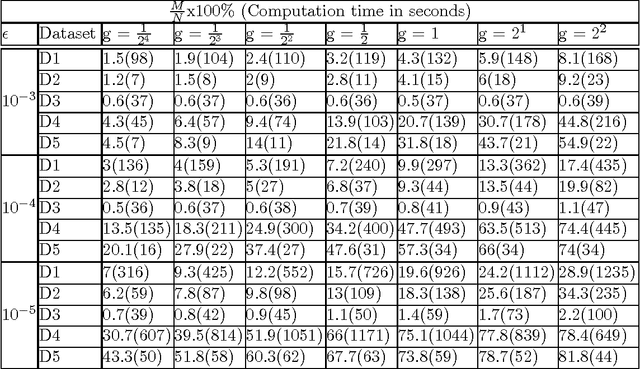

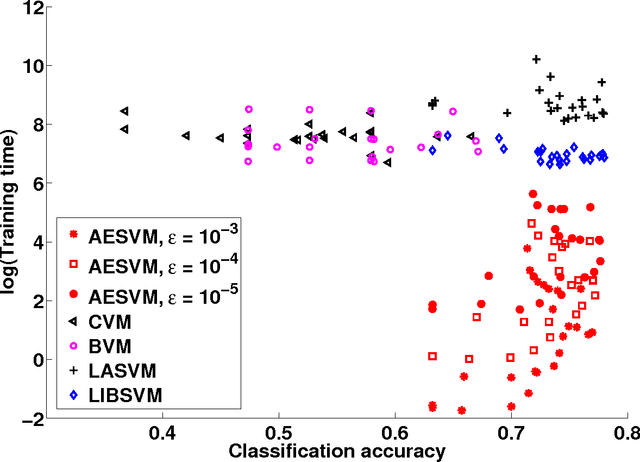

Abstract:Applications of non-linear kernel Support Vector Machines (SVMs) to large datasets is seriously hampered by its excessive training time. We propose a modification, called the approximate extreme points support vector machine (AESVM), that is aimed at overcoming this burden. Our approach relies on conducting the SVM optimization over a carefully selected subset, called the representative set, of the training dataset. We present analytical results that indicate the similarity of AESVM and SVM solutions. A linear time algorithm based on convex hulls and extreme points is used to compute the representative set in kernel space. Extensive computational experiments on nine datasets compared AESVM to LIBSVM \citep{LIBSVM}, CVM \citep{Tsang05}, BVM \citep{Tsang07}, LASVM \citep{Bordes05}, $\text{SVM}^{\text{perf}}$ \citep{Joachims09}, and the random features method \citep{rahimi07}. Our AESVM implementation was found to train much faster than the other methods, while its classification accuracy was similar to that of LIBSVM in all cases. In particular, for a seizure detection dataset, AESVM training was almost $10^3$ times faster than LIBSVM and LASVM and more than forty times faster than CVM and BVM. Additionally, AESVM also gave competitively fast classification times.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge