Mansoor Zolghadri Jahromi

Local Distance Metric Learning for Nearest Neighbor Algorithm

Mar 15, 2018

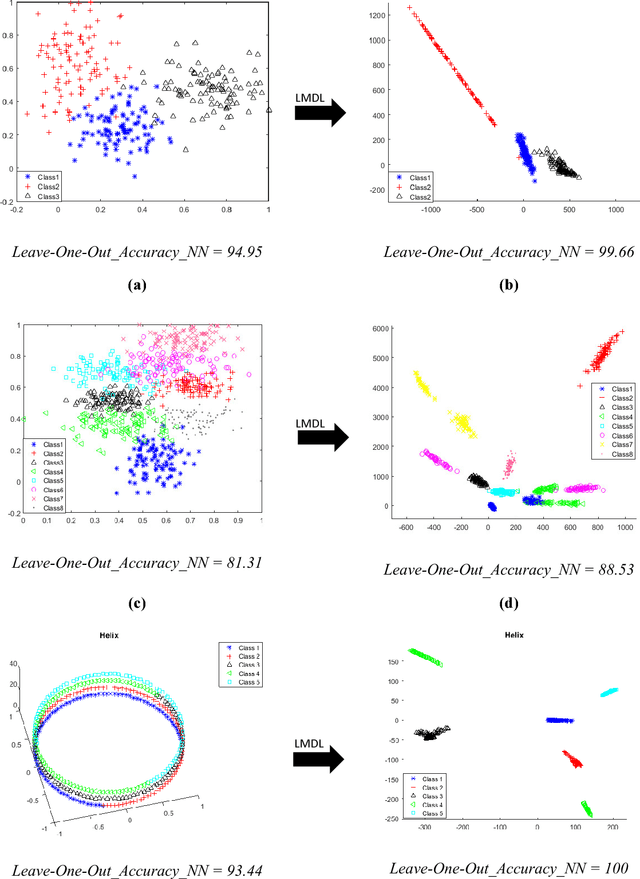

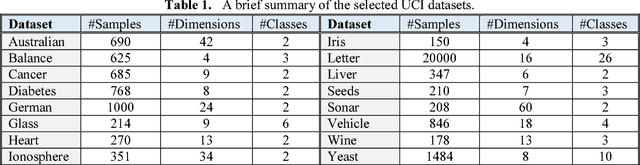

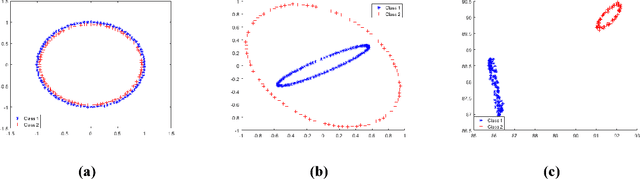

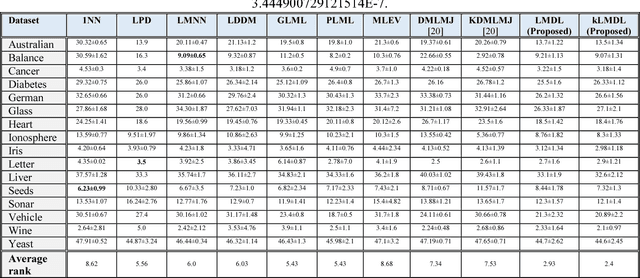

Abstract:Distance metric learning is a successful way to enhance the performance of the nearest neighbor classifier. In most cases, however, the distribution of data does not obey a regular form and may change in different parts of the feature space. Regarding that, this paper proposes a novel local distance metric learning method, namely Local Mahalanobis Distance Learning (LMDL), in order to enhance the performance of the nearest neighbor classifier. LMDL considers the neighborhood influence and learns multiple distance metrics for a reduced set of input samples. The reduced set is called as prototypes which try to preserve local discriminative information as much as possible. The proposed LMDL can be kernelized very easily, which is significantly desirable in the case of highly nonlinear data. The quality as well as the efficiency of the proposed method assesses through a set of different experiments on various datasets and the obtained results show that LDML as well as the kernelized version is superior to the other related state-of-the-art methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge