Mahmoud Namazi

Compression of Higher Order Ambisonics with Multichannel RVQGAN

Nov 18, 2024

Abstract:A multichannel extension to the RVQGAN neural coding method is proposed, and realized for data-driven compression of third-order Ambisonics audio. The input- and output layers of the generator and discriminator models are modified to accept multiple (16) channels without increasing the model bitrate. We also propose a loss function for accounting for spatial perception in immersive reproduction, and transfer learning from single-channel models. Listening test results with 7.1.4 immersive playback show that the proposed extension is suitable for coding scene-based, 16-channel Ambisonics content with good quality at 16 kbit/s.

Improved Lossless Coding for Storage and Transmission of Multichannel Immersive Audio

Oct 27, 2023

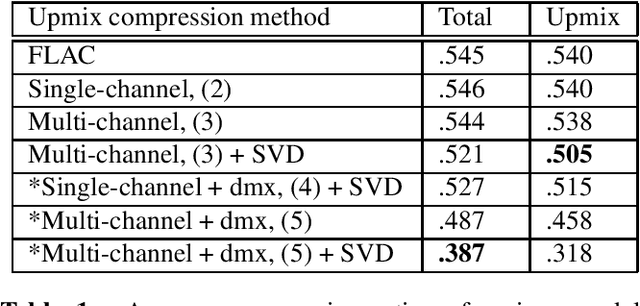

Abstract:In this paper, techniques for improving multichannel lossless coding are examined. A method is proposed for the simultaneous coding of two or more different renderings (mixes) of the same content. The signal model uses both past samples of the upmix, and the current time samples of downmix samples to predict the upmix. Model parameters are optimized via a general linear solver, and the prediction residual is Rice coded. Additionally, the use of an SVD projection prior to residual coding is proposed. A comparison is made against various baselines, including FLAC. The proposed methods show improved compression ratios for the storage and transmission of immersive audio.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge