Maarten W. A. Wijntjes

What Can Style Transfer and Paintings Do For Model Robustness?

Nov 30, 2020

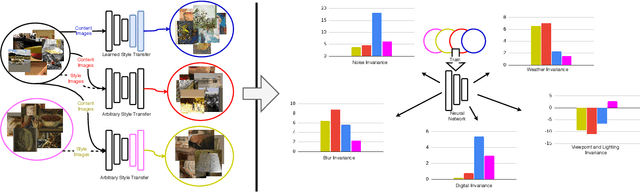

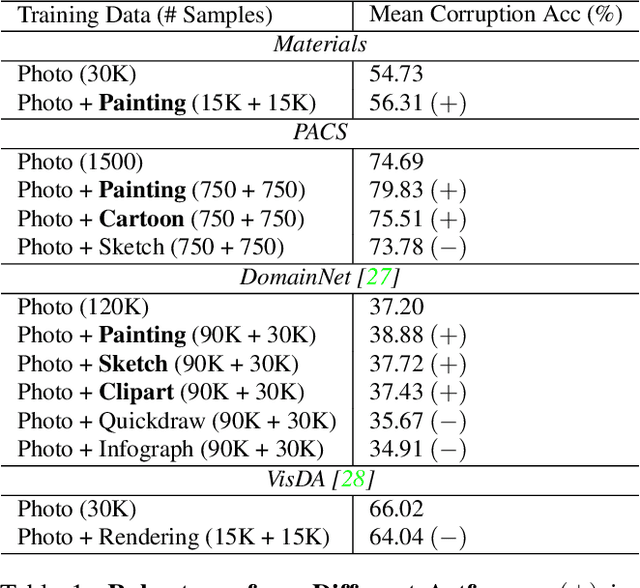

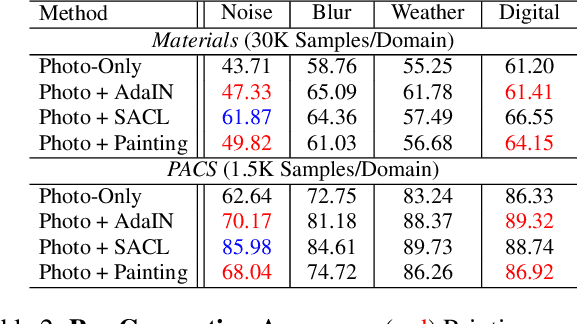

Abstract:A common strategy for improving model robustness is through data augmentations. Data augmentations encourage models to learn desired invariances, such as invariance to horizontal flipping or small changes in color. Recent work has shown that arbitrary style transfer can be used as a form of data augmentation to encourage invariance to textures by creating painting-like images from photographs. However, a stylized photograph is not quite the same as an artist-created painting. Artists depict perceptually meaningful cues in paintings so that humans can recognize salient components in scenes, an emphasis which is not enforced in style transfer. Therefore, we study how style transfer and paintings differ in their impact on model robustness. First, we investigate the role of paintings as style images for stylization-based data augmentation. We find that style transfer functions well even without paintings as style images. Second, we show that learning from paintings as a form of perceptual data augmentation can improve model robustness. Finally, we investigate the invariances learned from stylization and from paintings, and show that models learn different invariances from these differing forms of data. Our results provide insights into how stylization improves model robustness, and provide evidence that artist-created paintings can be a valuable source of data for model robustness.

Insights From A Large-Scale Database of Material Depictions In Paintings

Nov 24, 2020

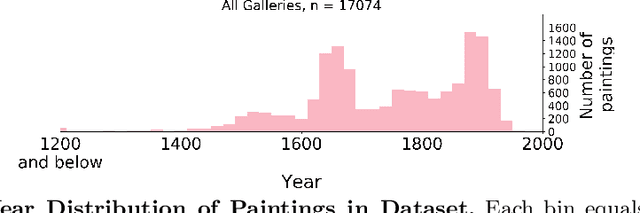

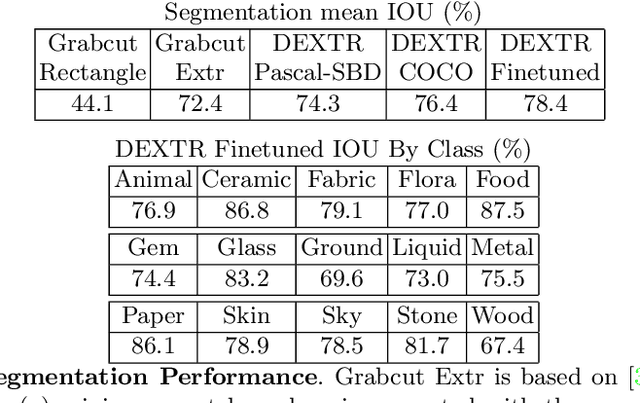

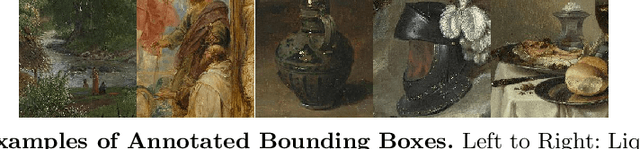

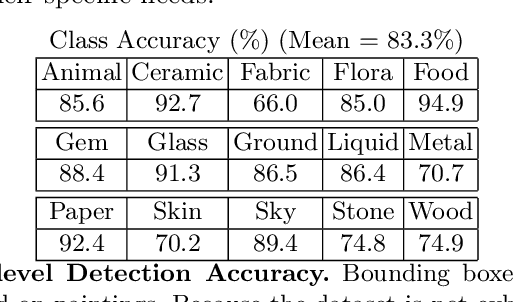

Abstract:Deep learning has paved the way for strong recognition systems which are often both trained on and applied to natural images. In this paper, we examine the give-and-take relationship between such visual recognition systems and the rich information available in the fine arts. First, we find that visual recognition systems designed for natural images can work surprisingly well on paintings. In particular, we find that interactive segmentation tools can be used to cleanly annotate polygonal segments within paintings, a task which is time consuming to undertake by hand. We also find that FasterRCNN, a model which has been designed for object recognition in natural scenes, can be quickly repurposed for detection of materials in paintings. Second, we show that learning from paintings can be beneficial for neural networks that are intended to be used on natural images. We find that training on paintings instead of natural images can improve the quality of learned features and we further find that a large number of paintings can be a valuable source of test data for evaluating domain adaptation algorithms. Our experiments are based on a novel large-scale annotated database of material depictions in paintings which we detail in a separate manuscript.

Annotating shadows, highlights and faces: the contribution of a 'human in the loop' for digital art history

Sep 10, 2018

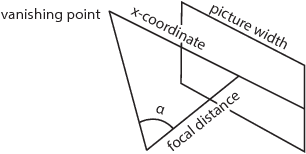

Abstract:While automatic computational techniques appear to reveal novel insights in digital art history, a complementary approach seems to get less attention: that of human annotation. We argue and exemplify that a 'human in the loop' can reveal insights that may be difficult to detect automatically. Specifically, we focussed on perceptual aspects within pictorial art. Using rather simple annotation tasks (e.g. delineate human lengths, indicate highlights and classify gaze direction) we could both replicate earlier findings and reveal novel insights into pictorial conventions. We found that Canaletto depicted human figures in rather accurate perspective, varied viewpoint elevation between approximately 3 and 9 meters and highly preferred light directions parallel to the projection plane. Furthermore, we found that taking the averaged images of leftward looking faces reveals a woman, and for rightward looking faces showed a male, confirming earlier accounts on lateral gender bias in pictorial art. Lastly, we confirmed and refined the well-known light-from-the-left bias. Together, the annotations, analyses and results exemplify how human annotation can contribute and complement to technical and digital art history.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge