M. Prannoy

Hyperbolic Embeddings for Learning Options in Hierarchical Reinforcement Learning

Dec 04, 2018

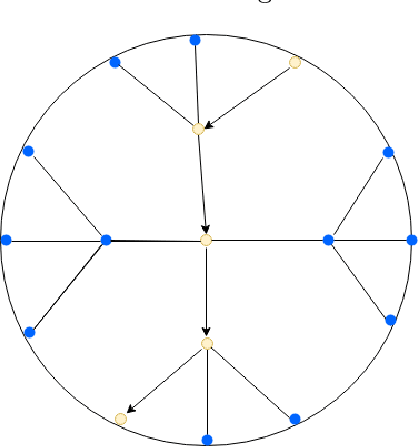

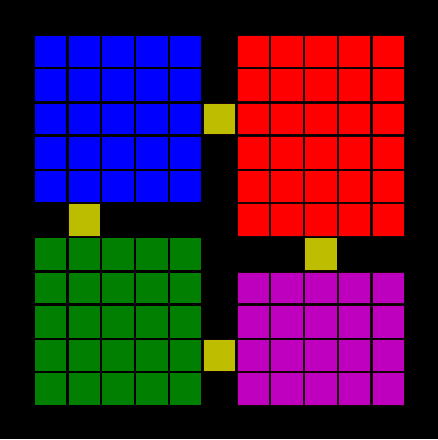

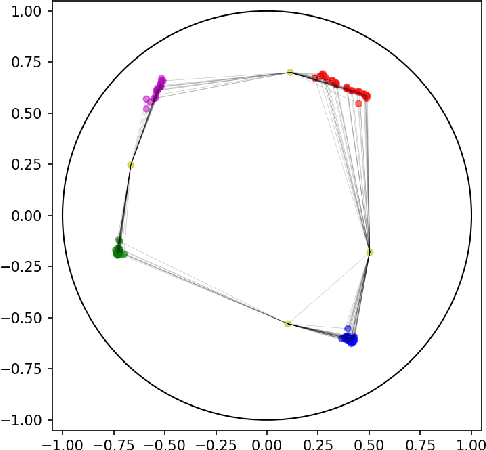

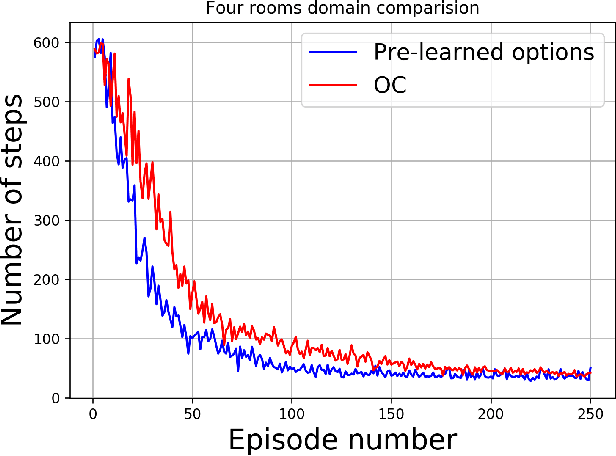

Abstract:Hierarchical reinforcement learning deals with the problem of breaking down large tasks into meaningful sub-tasks. Autonomous discovery of these sub-tasks has remained a challenging problem. We propose a novel method of learning sub-tasks by combining paradigms of routing in computer networks and graph based skill discovery within the options framework to define meaningful sub-goals. We apply the recent advancements of learning embeddings using Riemannian optimisation in the hyperbolic space to embed the state set into the hyperbolic space and create a model of the environment. In doing so we enforce a global topology on the states and are able to exploit this topology to learn meaningful sub-tasks. We demonstrate empirically, both in discrete and continuous domains, how these embeddings can improve the learning of meaningful sub-tasks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge