M. Caner Tol

μRL: Discovering Transient Execution Vulnerabilities Using Reinforcement Learning

Feb 20, 2025Abstract:We propose using reinforcement learning to address the challenges of discovering microarchitectural vulnerabilities, such as Spectre and Meltdown, which exploit subtle interactions in modern processors. Traditional methods like random fuzzing fail to efficiently explore the vast instruction space and often miss vulnerabilities that manifest under specific conditions. To overcome this, we introduce an intelligent, feedback-driven approach using RL. Our RL agents interact with the processor, learning from real-time feedback to prioritize instruction sequences more likely to reveal vulnerabilities, significantly improving the efficiency of the discovery process. We also demonstrate that RL systems adapt effectively to various microarchitectures, providing a scalable solution across processor generations. By automating the exploration process, we reduce the need for human intervention, enabling continuous learning that uncovers hidden vulnerabilities. Additionally, our approach detects subtle signals, such as timing anomalies or unusual cache behavior, that may indicate microarchitectural weaknesses. This proposal advances hardware security testing by introducing a more efficient, adaptive, and systematic framework for protecting modern processors. When unleashed on Intel Skylake-X and Raptor Lake microarchitectures, our RL agent was indeed able to generate instruction sequences that cause significant observable byte leakages through transient execution without generating any $\mu$code assists, faults or interrupts. The newly identified leaky sequences stem from a variety of Intel instructions, e.g. including SERIALIZE, VERR/VERW, CLMUL, MMX-x87 transitions, LSL+RDSCP and LAR. These initial results give credence to the proposed approach.

ZeroLeak: Using LLMs for Scalable and Cost Effective Side-Channel Patching

Aug 24, 2023Abstract:Security critical software, e.g., OpenSSL, comes with numerous side-channel leakages left unpatched due to a lack of resources or experts. The situation will only worsen as the pace of code development accelerates, with developers relying on Large Language Models (LLMs) to automatically generate code. In this work, we explore the use of LLMs in generating patches for vulnerable code with microarchitectural side-channel leakages. For this, we investigate the generative abilities of powerful LLMs by carefully crafting prompts following a zero-shot learning approach. All generated code is dynamically analyzed by leakage detection tools, which are capable of pinpointing information leakage at the instruction level leaked either from secret dependent accesses or branches or vulnerable Spectre gadgets, respectively. Carefully crafted prompts are used to generate candidate replacements for vulnerable code, which are then analyzed for correctness and for leakage resilience. From a cost/performance perspective, the GPT4-based configuration costs in API calls a mere few cents per vulnerability fixed. Our results show that LLM-based patching is far more cost-effective and thus provides a scalable solution. Finally, the framework we propose will improve in time, especially as vulnerability detection tools and LLMs mature.

An Optimization Perspective on Realizing Backdoor Injection Attacks on Deep Neural Networks in Hardware

Oct 14, 2021

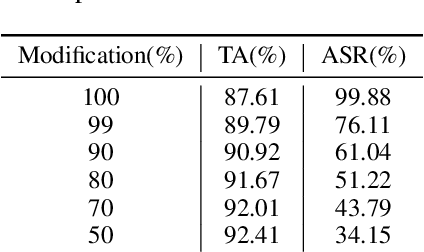

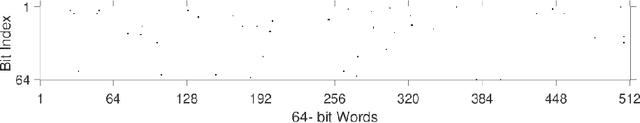

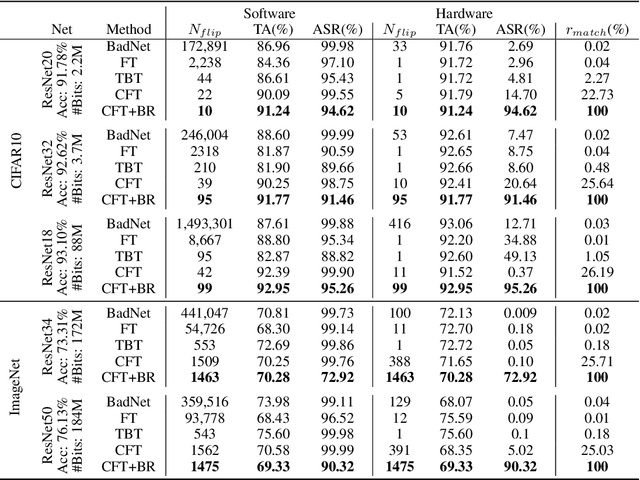

Abstract:State-of-the-art deep neural networks (DNNs) have been proven to be vulnerable to adversarial manipulation and backdoor attacks. Backdoored models deviate from expected behavior on inputs with predefined triggers while retaining performance on clean data. Recent works focus on software simulation of backdoor injection during the inference phase by modifying network weights, which we find often unrealistic in practice due to the hardware restriction such as bit allocation in memory. In contrast, in this work, we investigate the viability of backdoor injection attacks in real-life deployments of DNNs on hardware and address such practical issues in hardware implementation from a novel optimization perspective. We are motivated by the fact that the vulnerable memory locations are very rare, device-specific, and sparsely distributed. Consequently, we propose a novel network training algorithm based on constrained optimization for realistic backdoor injection attack in hardware. By modifying parameters uniformly across the convolutional and fully-connected layers as well as optimizing the trigger pattern together, we achieve the state-of-the-art attack performance with fewer bit flips. For instance, our method on a hardware-deployed ResNet-20 model trained on CIFAR-10 can achieve over 91% test accuracy and 94% attack success rate by flipping only 10 bits out of 2.2 million bits.

FastSpec: Scalable Generation and Detection of Spectre Gadgets Using Neural Embeddings

Jun 25, 2020

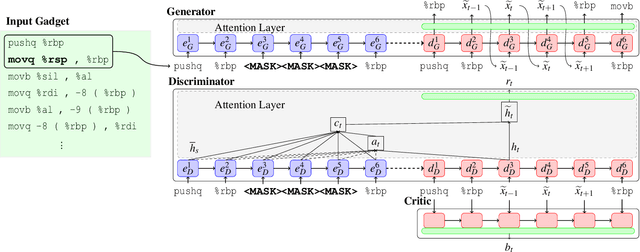

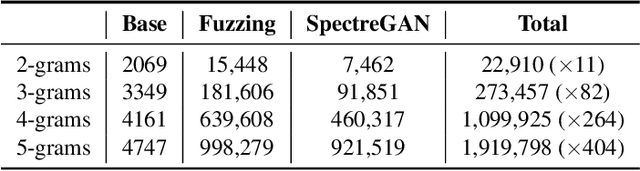

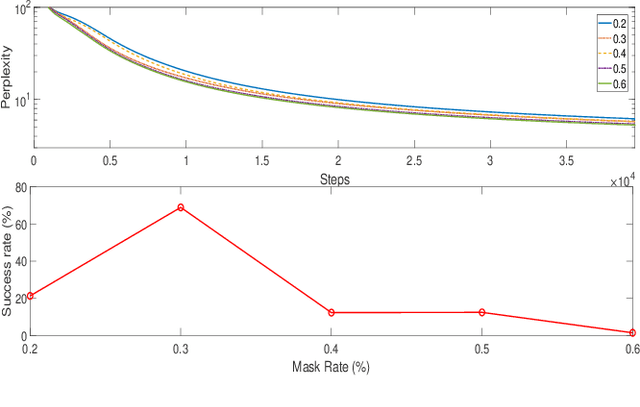

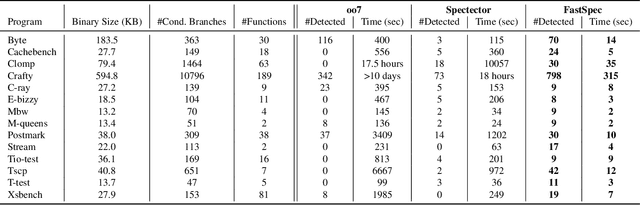

Abstract:Several techniques have been proposed to detect vulnerable Spectre gadgets in widely deployed commercial software. Unfortunately, detection techniques proposed so far rely on hand-written rules which fall short in covering subtle variations of known Spectre gadgets as well as demand a huge amount of time to analyze each conditional branch in software. Since it requires arduous effort to craft new gadgets manually, the evaluations of detection mechanisms are based only on a handful of these gadgets. In this work, we employ deep learning techniques for automated generation and detection of Spectre gadgets. We first create a diverse set of Spectre-V1 gadgets by introducing perturbations to the known gadgets. Using mutational fuzzing, we produce a data set with more than 1 million Spectre-V1 gadgets which is the largest Spectre gadget data set built to date. Next, we conduct the first empirical usability study of Generative Adversarial Networks (GANs) for creating assembly code without any human interaction. We introduce SpectreGAN which leverages masking implementation of GANs for both learning the gadget structures and generating new gadgets. This provides the first scalable solution to extend the variety of Spectre gadgets. Finally, we propose FastSpec which builds a classifier with the generated Spectre gadgets based on the novel high dimensional Neural Embedding technique BERT. For case studies, we demonstrate that FastSpec discovers potential gadgets in OpenSSL libraries and Phoronix benchmarks. Further, FastSpec offers much greater flexibility and much faster classification compared to what is offered by the existing tools. Therefore FastSpec can be used for gadget detection in large-scale projects.

Gimme That Model!: A Trusted ML Model Trading Protocol

Mar 03, 2020

Abstract:We propose a HE-based protocol for trading ML models and describe possible improvements to the protocol to make the overall transaction more efficient and secure.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge