M. Arthur Munson

Reframing Threat Detection: Inside esINSIDER

Apr 07, 2019

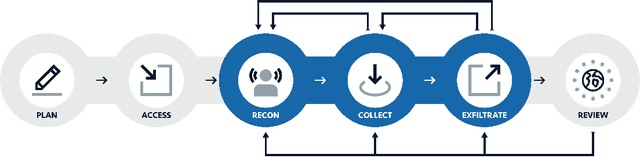

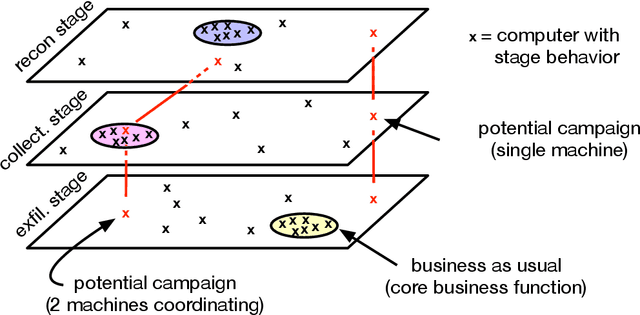

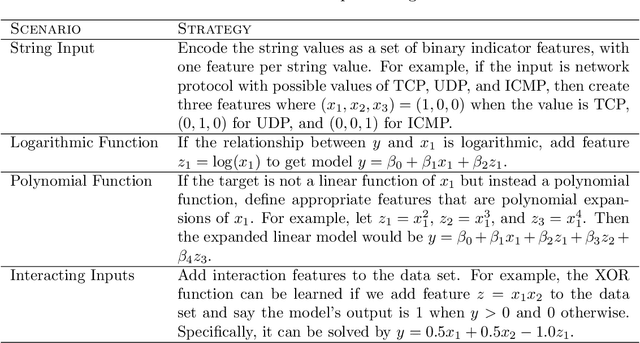

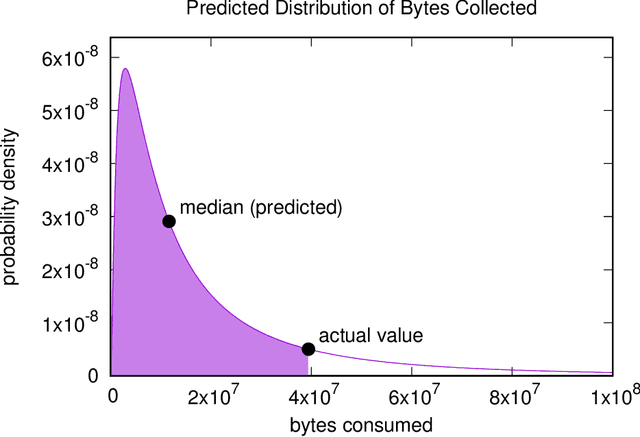

Abstract:We describe the motivation and design for esINSIDER, an automated tool that detects potential persistent and insider threats in a network. esINSIDER aggregates clues from log data, over extended time periods, and proposes a small number of cases for human experts to review. The proposed cases package together related information so the analyst can see a bigger picture of what is happening, and their evidence includes internal network activity resembling reconnaissance and data collection. The core ideas are to 1) detect fundamental campaign behaviors by following data movements over extended time periods, 2) link together behaviors associated with different meta-goals, and 3) use machine learning to understand what activities are expected and consistent for each individual network. We call this approach campaign analytics because it focuses on the threat actor's campaign goals and the intrinsic steps to achieve them. Linking different campaign behaviors (internal reconnaissance, collection, exfiltration) reduces false positives from business-as-usual activities and creates opportunities to detect threats before a large exfiltration occurs. Machine learning makes it practical to deploy this approach by reducing the amount of tuning needed.

COMET: A Recipe for Learning and Using Large Ensembles on Massive Data

Sep 08, 2011

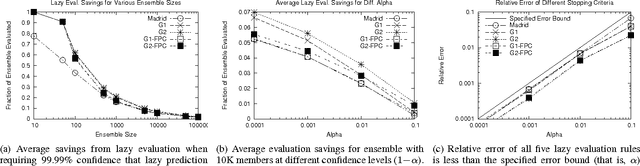

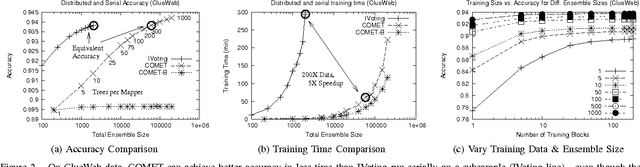

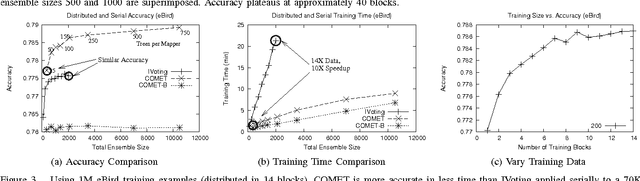

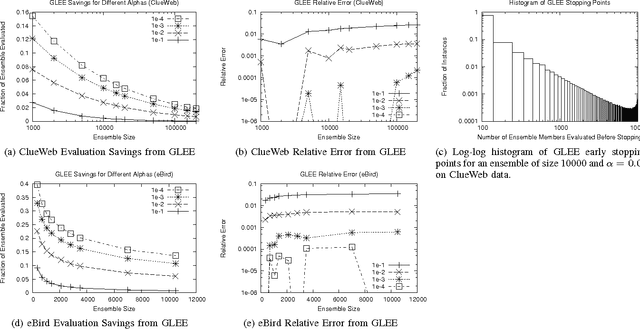

Abstract:COMET is a single-pass MapReduce algorithm for learning on large-scale data. It builds multiple random forest ensembles on distributed blocks of data and merges them into a mega-ensemble. This approach is appropriate when learning from massive-scale data that is too large to fit on a single machine. To get the best accuracy, IVoting should be used instead of bagging to generate the training subset for each decision tree in the random forest. Experiments with two large datasets (5GB and 50GB compressed) show that COMET compares favorably (in both accuracy and training time) to learning on a subsample of data using a serial algorithm. Finally, we propose a new Gaussian approach for lazy ensemble evaluation which dynamically decides how many ensemble members to evaluate per data point; this can reduce evaluation cost by 100X or more.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge