Lulu Gong

Strong anti-Hebbian plasticity alters the convexity of network attractor landscapes

Dec 22, 2023

Abstract:In this paper, we study recurrent neural networks in the presence of pairwise learning rules. We are specifically interested in how the attractor landscapes of such networks become altered as a function of the strength and nature (Hebbian vs. anti-Hebbian) of learning, which may have a bearing on the ability of such rules to mediate large-scale optimization problems. Through formal analysis, we show that a transition from Hebbian to anti-Hebbian learning brings about a pitchfork bifurcation that destroys convexity in the network attractor landscape. In larger-scale settings, this implies that anti-Hebbian plasticity will bring about multiple stable equilibria, and such effects may be outsized at interconnection or `choke' points. Furthermore, attractor landscapes are more sensitive to slower learning rates than faster ones. These results provide insight into the types of objective functions that can be encoded via different pairwise plasticity rules.

Astrocytes as a mechanism for meta-plasticity and contextually-guided network function

Nov 10, 2023

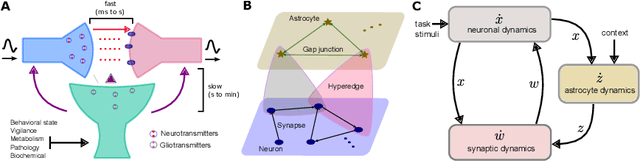

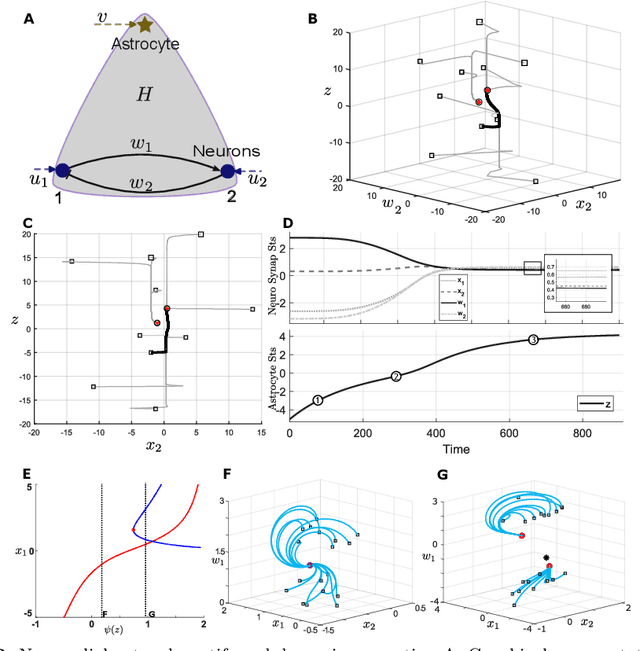

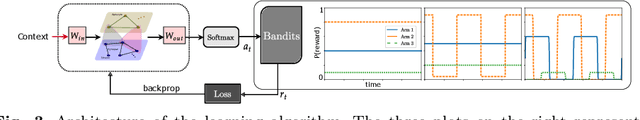

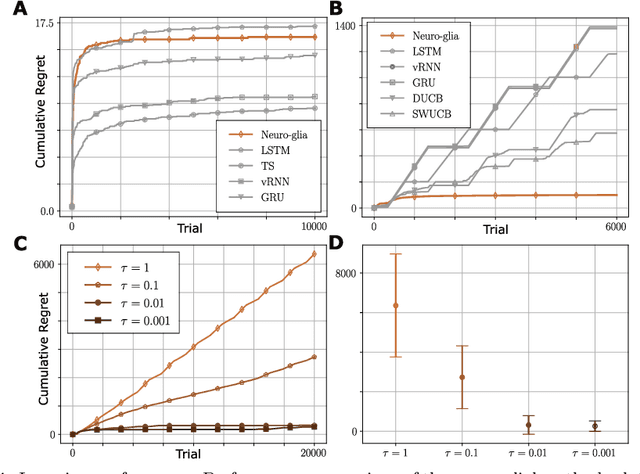

Abstract:Astrocytes are a ubiquitous and enigmatic type of non-neuronal cell and are found in the brain of all vertebrates. While traditionally viewed as being supportive of neurons, it is increasingly recognized that astrocytes may play a more direct and active role in brain function and neural computation. On account of their sensitivity to a host of physiological covariates and ability to modulate neuronal activity and connectivity on slower time scales, astrocytes may be particularly well poised to modulate the dynamics of neural circuits in functionally salient ways. In the current paper, we seek to capture these features via actionable abstractions within computational models of neuron-astrocyte interaction. Specifically, we engage how nested feedback loops of neuron-astrocyte interaction, acting over separated time-scales may endow astrocytes with the capability to enable learning in context-dependent settings, where fluctuations in task parameters may occur much more slowly than within-task requirements. We pose a general model of neuron-synapse-astrocyte interaction and use formal analysis to characterize how astrocytic modulation may constitute a form of meta-plasticity, altering the ways in which synapses and neurons adapt as a function of time. We then embed this model in a bandit-based reinforcement learning task environment, and show how the presence of time-scale separated astrocytic modulation enables learning over multiple fluctuating contexts. Indeed, these networks learn far more reliably versus dynamically homogeneous networks and conventional non-network-based bandit algorithms. Our results indicate how the presence of neuron-astrocyte interaction in the brain may benefit learning over different time-scales and the conveyance of task-relevant contextual information onto circuit dynamics.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge