Loïc Cordone

Object Detection with Spiking Neural Networks on Automotive Event Data

May 09, 2022

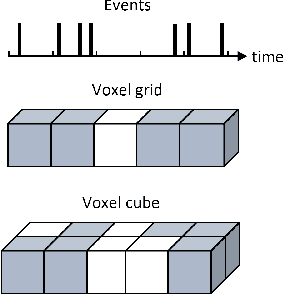

Abstract:Automotive embedded algorithms have very high constraints in terms of latency, accuracy and power consumption. In this work, we propose to train spiking neural networks (SNNs) directly on data coming from event cameras to design fast and efficient automotive embedded applications. Indeed, SNNs are more biologically realistic neural networks where neurons communicate using discrete and asynchronous spikes, a naturally energy-efficient and hardware friendly operating mode. Event data, which are binary and sparse in space and time, are therefore the ideal input for spiking neural networks. But to date, their performance was insufficient for automotive real-world problems, such as detecting complex objects in an uncontrolled environment. To address this issue, we took advantage of the latest advancements in matter of spike backpropagation - surrogate gradient learning, parametric LIF, SpikingJelly framework - and of our new \textit{voxel cube} event encoding to train 4 different SNNs based on popular deep learning networks: SqueezeNet, VGG, MobileNet, and DenseNet. As a result, we managed to increase the size and the complexity of SNNs usually considered in the literature. In this paper, we conducted experiments on two automotive event datasets, establishing new state-of-the-art classification results for spiking neural networks. Based on these results, we combined our SNNs with SSD to propose the first spiking neural networks capable of performing object detection on the complex GEN1 Automotive Detection event dataset.

Learning from Event Cameras with Sparse Spiking Convolutional Neural Networks

Apr 26, 2021

Abstract:Convolutional neural networks (CNNs) are now the de facto solution for computer vision problems thanks to their impressive results and ease of learning. These networks are composed of layers of connected units called artificial neurons, loosely modeling the neurons in a biological brain. However, their implementation on conventional hardware (CPU/GPU) results in high power consumption, making their integration on embedded systems difficult. In a car for example, embedded algorithms have very high constraints in term of energy, latency and accuracy. To design more efficient computer vision algorithms, we propose to follow an end-to-end biologically inspired approach using event cameras and spiking neural networks (SNNs). Event cameras output asynchronous and sparse events, providing an incredibly efficient data source, but processing these events with synchronous and dense algorithms such as CNNs does not yield any significant benefits. To address this limitation, we use spiking neural networks (SNNs), which are more biologically realistic neural networks where units communicate using discrete spikes. Due to the nature of their operations, they are hardware friendly and energy-efficient, but training them still remains a challenge. Our method enables the training of sparse spiking convolutional neural networks directly on event data, using the popular deep learning framework PyTorch. The performances in terms of accuracy, sparsity and training time on the popular DVS128 Gesture Dataset make it possible to use this bio-inspired approach for the future embedding of real-time applications on low-power neuromorphic hardware.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge