Livio Corain

NCIS: Deep Color Gradient Maps Regression and Three-Class Pixel Classification for Enhanced Neuronal Cell Instance Segmentation in Nissl-Stained Histological Images

Jun 27, 2023

Abstract:Deep learning has proven to be more effective than other methods in medical image analysis, including the seemingly simple but challenging task of segmenting individual cells, an essential step for many biological studies. Comparative neuroanatomy studies are an example where the instance segmentation of neuronal cells is crucial for cytoarchitecture characterization. This paper presents an end-to-end framework to automatically segment single neuronal cells in Nissl-stained histological images of the brain, thus aiming to enable solid morphological and structural analyses for the investigation of changes in the brain cytoarchitecture. A U-Net-like architecture with an EfficientNet as the encoder and two decoding branches is exploited to regress four color gradient maps and classify pixels into contours between touching cells, cell bodies, or background. The decoding branches are connected through attention gates to share relevant features, and their outputs are combined to return the instance segmentation of the cells. The method was tested on images of the cerebral cortex and cerebellum, outperforming other recent deep-learning-based approaches for the instance segmentation of cells.

MR-NOM: Multi-scale Resolution of Neuronal cells in Nissl-stained histological slices via deliberate Over-segmentation and Merging

Nov 14, 2022

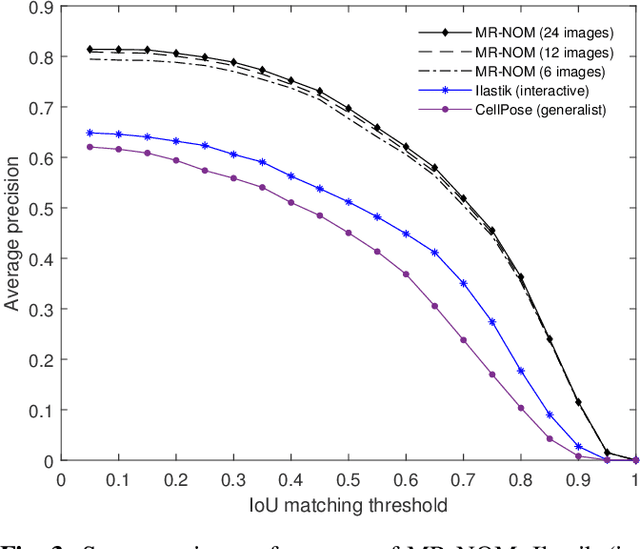

Abstract:In comparative neuroanatomy, the characterization of brain cytoarchitecture is critical to a better understanding of brain structure and function, as it helps to distill information on the development, evolution, and distinctive features of different populations. The automatic segmentation of individual brain cells is a primary prerequisite and yet remains challenging. A new method (MR-NOM) was developed for the instance segmentation of cells in Nissl-stained histological images of the brain. MR-NOM exploits a multi-scale approach to deliberately over-segment the cells into superpixels and subsequently merge them via a classifier based on shape, structure, and intensity features. The method was tested on images of the cerebral cortex, proving successful in dealing with cells of varying characteristics that partially touch or overlap, showing better performance than two state-of-the-art methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge