Leandro Santiago de Araujo

Time series forecasting for multidimensional telemetry data using GAN and BiLSTM in a Digital Twin

Jan 14, 2025

Abstract:The research related to digital twins has been increasing in recent years. Besides the mirroring of the physical word into the digital, there is the need of providing services related to the data collected and transferred to the virtual world. One of these services is the forecasting of physical part future behavior, that could lead to applications, like preventing harmful events or designing improvements to get better performance. One strategy used to predict any system operation it is the use of time series models like ARIMA or LSTM, and improvements were implemented using these algorithms. Recently, deep learning techniques based on generative models such as Generative Adversarial Networks (GANs) have been proposed to create time series and the use of LSTM has gained more relevance in time series forecasting, but both have limitations that restrict the forecasting results. Another issue found in the literature is the challenge of handling multivariate environments/applications in time series generation. Therefore, new methods need to be studied in order to fill these gaps and, consequently, provide better resources for creating useful digital twins. In this proposal, it is going to be studied the integration of a BiLSTM layer with a time series obtained by GAN in order to improve the forecasting of all the features provided by the dataset in terms of accuracy and, consequently, improving behaviour prediction.

Weightless Neural Networks for Efficient Edge Inference

Mar 03, 2022

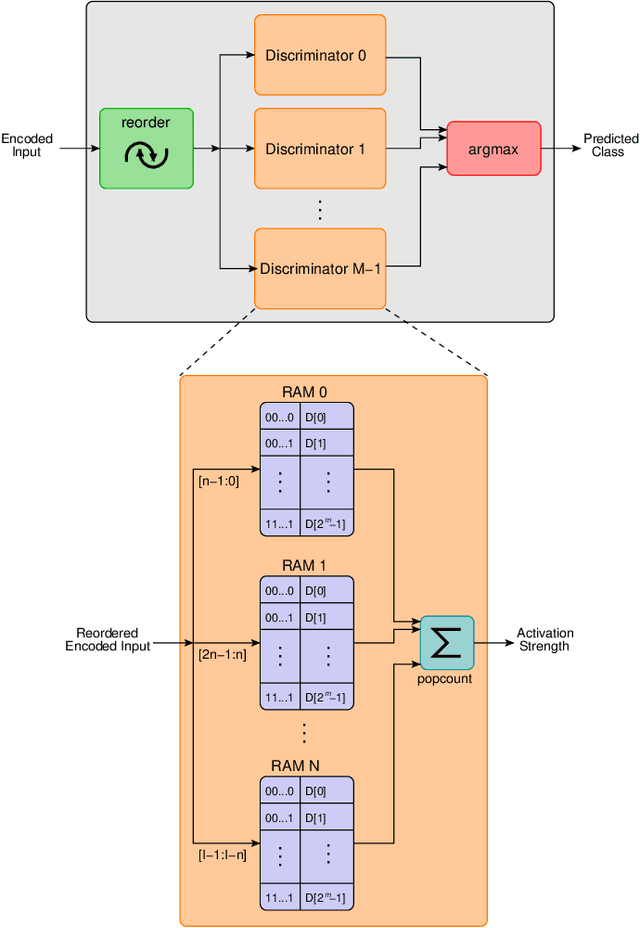

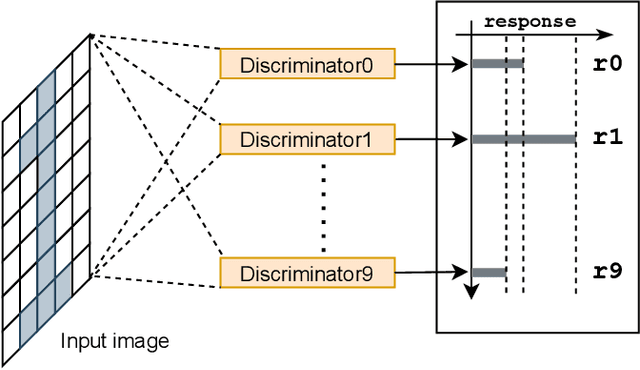

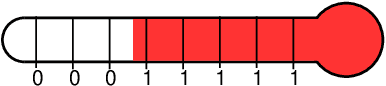

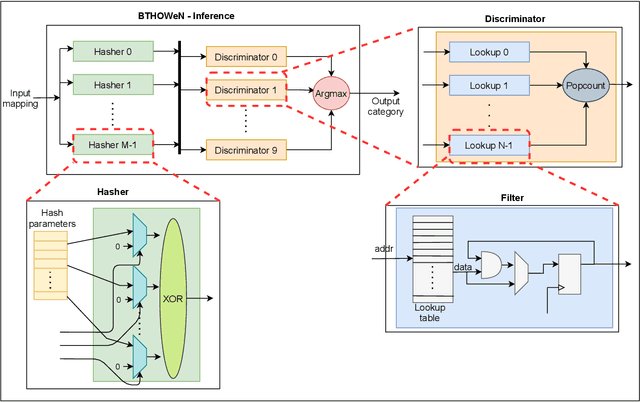

Abstract:Weightless Neural Networks (WNNs) are a class of machine learning model which use table lookups to perform inference. This is in contrast with Deep Neural Networks (DNNs), which use multiply-accumulate operations. State-of-the-art WNN architectures have a fraction of the implementation cost of DNNs, but still lag behind them on accuracy for common image recognition tasks. Additionally, many existing WNN architectures suffer from high memory requirements. In this paper, we propose a novel WNN architecture, BTHOWeN, with key algorithmic and architectural improvements over prior work, namely counting Bloom filters, hardware-friendly hashing, and Gaussian-based nonlinear thermometer encodings to improve model accuracy and reduce area and energy consumption. BTHOWeN targets the large and growing edge computing sector by providing superior latency and energy efficiency to comparable quantized DNNs. Compared to state-of-the-art WNNs across nine classification datasets, BTHOWeN on average reduces error by more than than 40% and model size by more than 50%. We then demonstrate the viability of the BTHOWeN architecture by presenting an FPGA-based accelerator, and compare its latency and resource usage against similarly accurate quantized DNN accelerators, including Multi-Layer Perceptron (MLP) and convolutional models. The proposed BTHOWeN models consume almost 80% less energy than the MLP models, with nearly 85% reduction in latency. In our quest for efficient ML on the edge, WNNs are clearly deserving of additional attention.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge