Laurie R. Margolies

Self-Supervised Deep Learning to Enhance Breast Cancer Detection on Screening Mammography

Mar 16, 2022

Abstract:A major limitation in applying deep learning to artificial intelligence (AI) systems is the scarcity of high-quality curated datasets. We investigate strong augmentation based self-supervised learning (SSL) techniques to address this problem. Using breast cancer detection as an example, we first identify a mammogram-specific transformation paradigm and then systematically compare four recent SSL methods representing a diversity of approaches. We develop a method to convert a pretrained model from making predictions on uniformly tiled patches to whole images, and an attention-based pooling method that improves the classification performance. We found that the best SSL model substantially outperformed the baseline supervised model. The best SSL model also improved the data efficiency of sample labeling by nearly 4-fold and was highly transferrable from one dataset to another. SSL represents a major breakthrough in computer vision and may help the AI for medical imaging field to shift away from supervised learning and dependency on scarce labels.

End-to-end Training for Whole Image Breast Cancer Screening using An All Convolutional Design

Sep 22, 2018

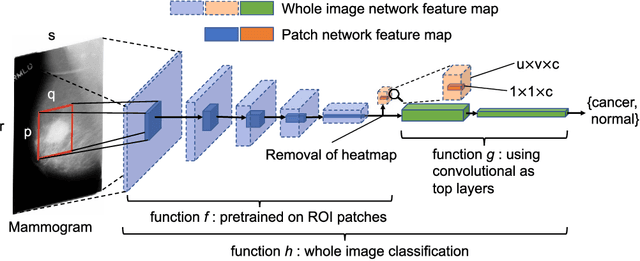

Abstract:An end-to-end training algorithm for the detection and classification of breast cancer on digital mammograms was created. In the initial training stage lesion annotations were used, but in subsequent stages, a whole image classifier was trained using only image-level labels, eliminating the reliance on rarely available lesion annotations. The simple all convolutional design provided superior performance in comparison with previous methods. For example, on the Digital Database for Screening Mammography (DDSM), the best single model achieved a per-image AUC of 0.88 on a holdout test set, and three-model averaging increased the AUC to 0.91. On an independent holdout set of images from the INbreast database, the best single model achieved a per-image AUC of 0.96. We also demonstrated that a whole image model trained on DDSM can be transferred to INbreast without using its lesion annotations and using only a small amount of INbreast data for fine-tuning. Code and model available at: https://github.com/lishen/end2end-all-conv

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge