Larry Smarr

Towards a Dynamic Composability Approach for using Heterogeneous Systems in Remote Sensing

Nov 13, 2022Abstract:Influenced by the advances in data and computing, the scientific practice increasingly involves machine learning and artificial intelligence driven methods which requires specialized capabilities at the system-, science- and service-level in addition to the conventional large-capacity supercomputing approaches. The latest distributed architectures built around the composability of data-centric applications led to the emergence of a new ecosystem for container coordination and integration. However, there is still a divide between the application development pipelines of existing supercomputing environments, and these new dynamic environments that disaggregate fluid resource pools through accessible, portable and re-programmable interfaces. New approaches for dynamic composability of heterogeneous systems are needed to further advance the data-driven scientific practice for the purpose of more efficient computing and usable tools for specific scientific domains. In this paper, we present a novel approach for using composable systems in the intersection between scientific computing, artificial intelligence (AI), and remote sensing domain. We describe the architecture of a first working example of a composable infrastructure that federates Expanse, an NSF-funded supercomputer, with Nautilus, a Kubernetes-based GPU geo-distributed cluster. We also summarize a case study in wildfire modeling, that demonstrates the application of this new infrastructure in scientific workflows: a composed system that bridges the insights from edge sensing, AI and computing capabilities with a physics-driven simulation.

Workflow-Driven Distributed Machine Learning in CHASE-CI: A Cognitive Hardware and Software Ecosystem Community Infrastructure

Feb 26, 2019

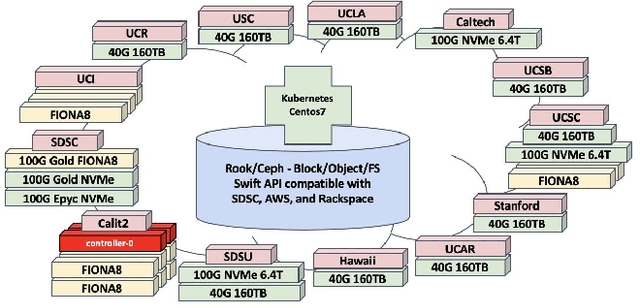

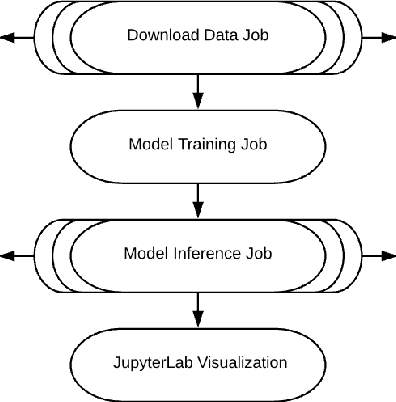

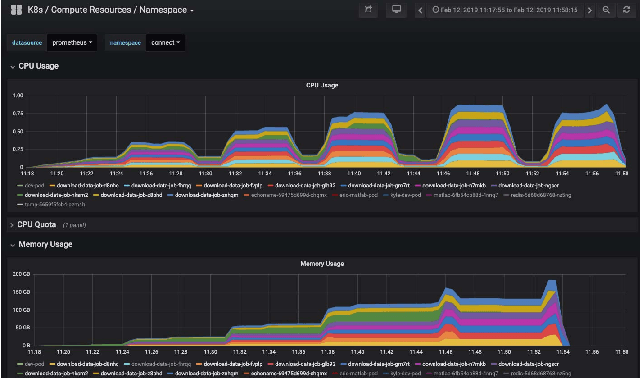

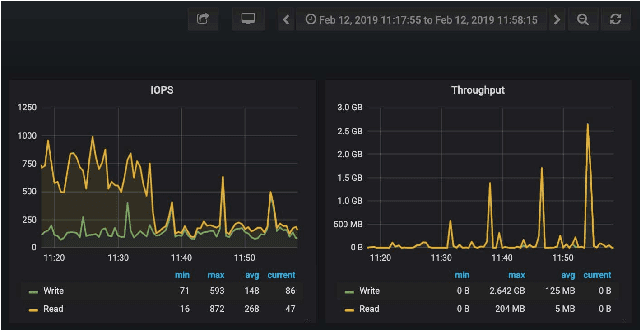

Abstract:The advances in data, computing and networking over the last two decades led to a shift in many application domains that includes machine learning on big data as a part of the scientific process, requiring new capabilities for integrated and distributed hardware and software infrastructure. This paper contributes a workflow-driven approach for dynamic data-driven application development on top of a new kind of networked Cyberinfrastructure called CHASE-CI. In particular, we present: 1) The architecture for CHASE-CI, a network of distributed fast GPU appliances for machine learning and storage managed through Kubernetes on the high-speed (10-100Gbps) Pacific Research Platform (PRP); 2) A machine learning software containerization approach and libraries required for turning such a network into a distributed computer for big data analysis; 3) An atmospheric science case study that can only be made scalable with an infrastructure like CHASE-CI; 4) Capabilities for virtual cluster management for data communication and analysis in a dynamically scalable fashion, and visualization across the network in specialized visualization facilities in near real-time; and, 5) A step-by-step workflow and performance measurement approach that enables taking advantage of the dynamic architecture of the CHASE-CI network and container management infrastructure.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge