Léopold Crestel

The Piano Inpainting Application

Jul 13, 2021

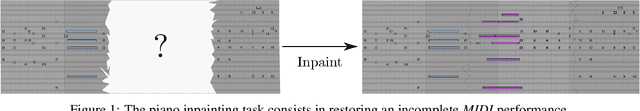

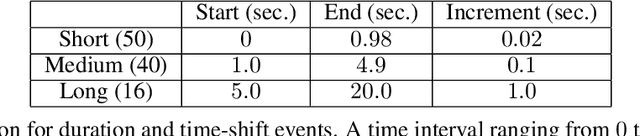

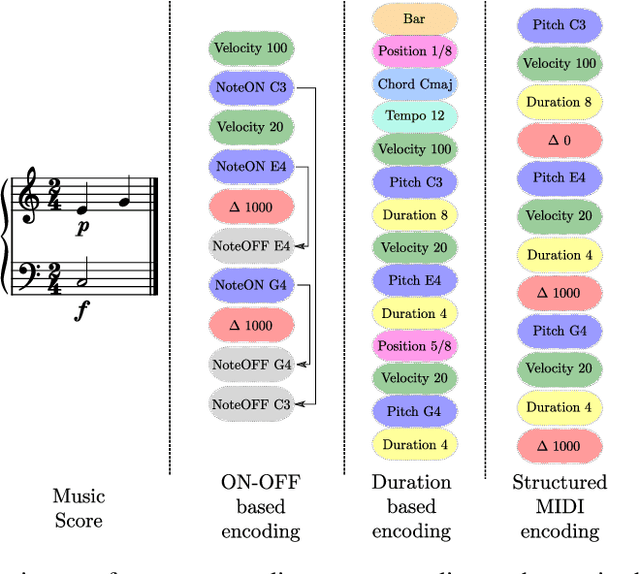

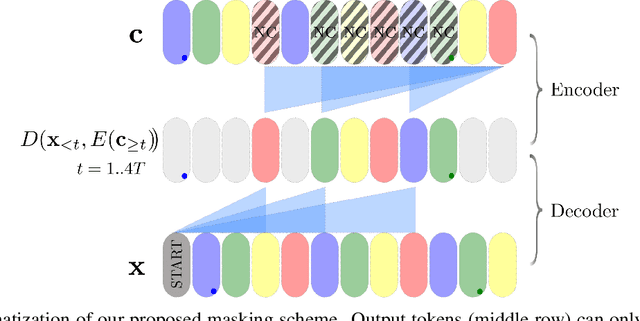

Abstract:Autoregressive models are now capable of generating high-quality minute-long expressive MIDI piano performances. Even though this progress suggests new tools to assist music composition, we observe that generative algorithms are still not widely used by artists due to the limited control they offer, prohibitive inference times or the lack of integration within musicians' workflows. In this work, we present the Piano Inpainting Application (PIA), a generative model focused on inpainting piano performances, as we believe that this elementary operation (restoring missing parts of a piano performance) encourages human-machine interaction and opens up new ways to approach music composition. Our approach relies on an encoder-decoder Linear Transformer architecture trained on a novel representation for MIDI piano performances termed Structured MIDI Encoding. By uncovering an interesting synergy between Linear Transformers and our inpainting task, we are able to efficiently inpaint contiguous regions of a piano performance, which makes our model suitable for interactive and responsive A.I.-assisted composition. Finally, we introduce our freely-available Ableton Live PIA plugin, which allows musicians to smoothly generate or modify any MIDI clip using PIA within a widely-used professional Digital Audio Workstation.

Vector Quantized Contrastive Predictive Coding for Template-based Music Generation

Apr 21, 2020

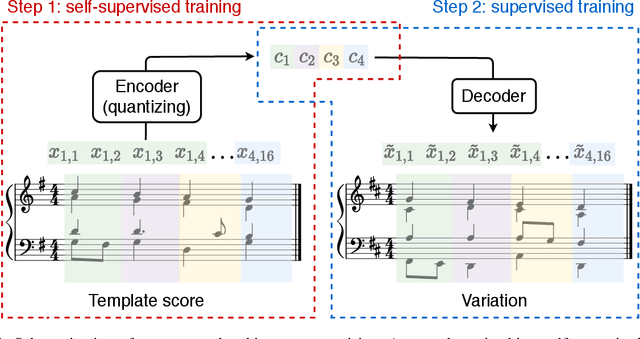

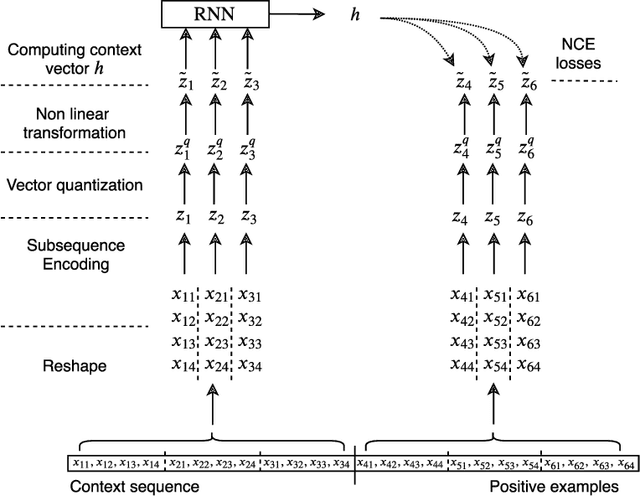

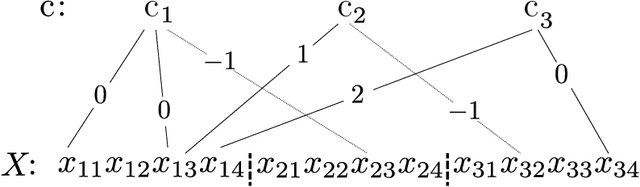

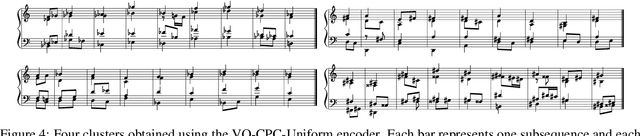

Abstract:In this work, we propose a flexible method for generating variations of discrete sequences in which tokens can be grouped into basic units, like sentences in a text or bars in music. More precisely, given a template sequence, we aim at producing novel sequences sharing perceptible similarities with the original template without relying on any annotation; so our problem of generating variations is intimately linked to the problem of learning relevant high-level representations without supervision. Our contribution is two-fold: First, we propose a self-supervised encoding technique, named Vector Quantized Contrastive Predictive Coding which allows to learn a meaningful assignment of the basic units over a discrete set of codes, together with mechanisms allowing to control the information content of these learnt discrete representations. Secondly, we show how these compressed representations can be used to generate variations of a template sequence by using an appropriate attention pattern in the Transformer architecture. We illustrate our approach on the corpus of J.S. Bach chorales where we discuss the musical meaning of the learnt discrete codes and show that our proposed method allows to generate coherent and high-quality variations of a given template.

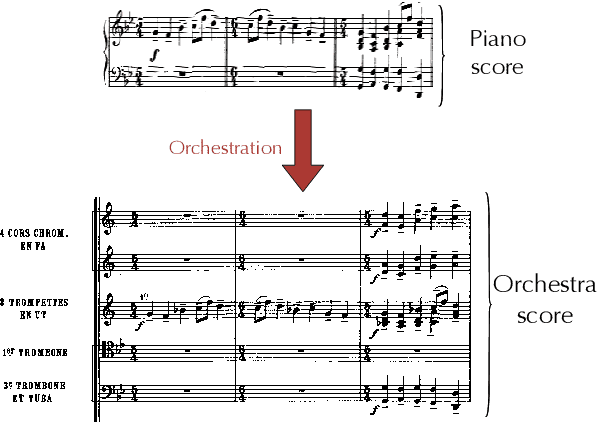

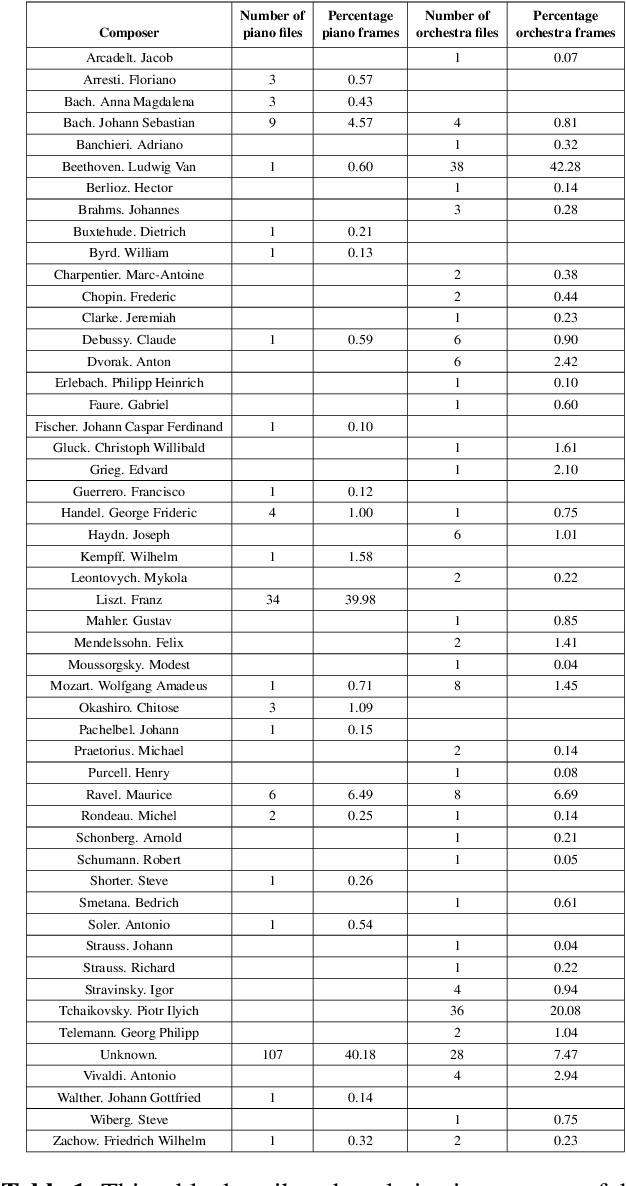

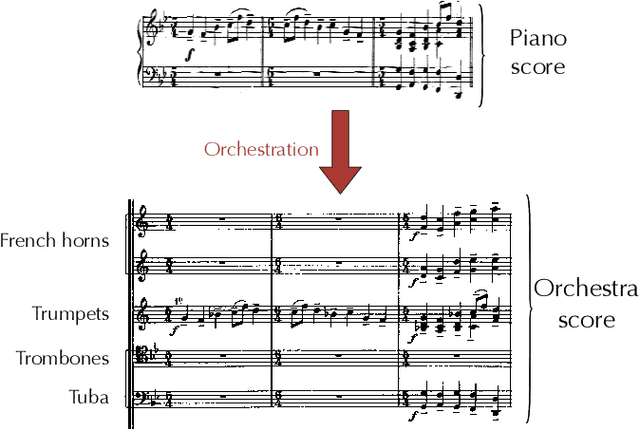

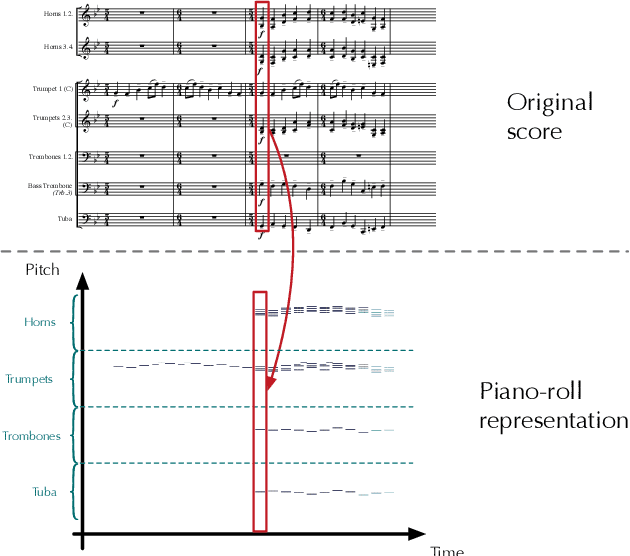

A database linking piano and orchestral MIDI scores with application to automatic projective orchestration

Oct 19, 2018

Abstract:This article introduces the Projective Orchestral Database (POD), a collection of MIDI scores composed of pairs linking piano scores to their corresponding orchestrations. To the best of our knowledge, this is the first database of its kind, which performs piano or orchestral prediction, but more importantly which tries to learn the correlations between piano and orchestral scores. Hence, we also introduce the projective orchestration task, which consists in learning how to perform the automatic orchestration of a piano score. We show how this task can be addressed using learning methods and also provide methodological guidelines in order to properly use this database.

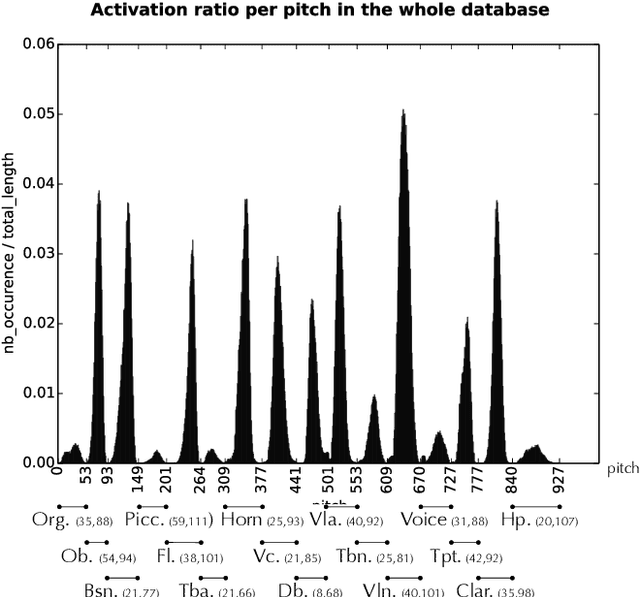

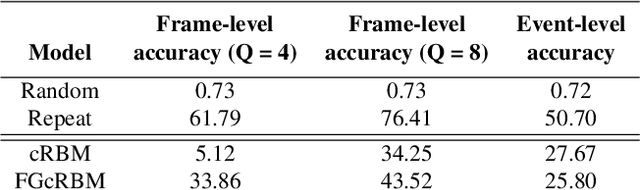

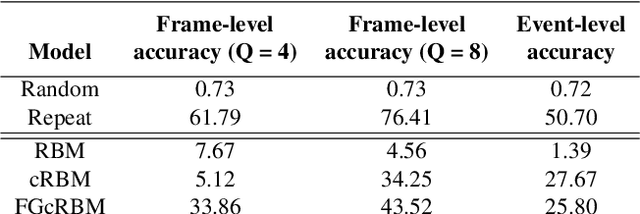

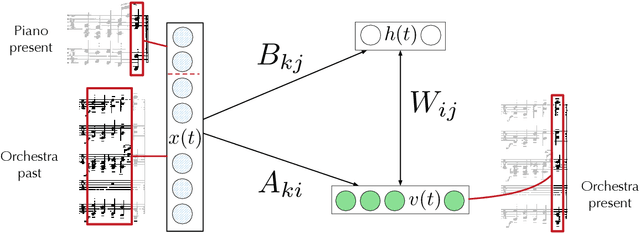

Live Orchestral Piano, a system for real-time orchestral music generation

May 18, 2017

Abstract:This paper introduces the first system for performing automatic orchestration based on a real-time piano input. We believe that it is possible to learn the underlying regularities existing between piano scores and their orchestrations by notorious composers, in order to automatically perform this task on novel piano inputs. To that end, we investigate a class of statistical inference models called conditional Restricted Boltzmann Machine (cRBM). We introduce a specific evaluation framework for orchestral generation based on a prediction task in order to assess the quality of different models. As prediction and creation are two widely different endeavours, we discuss the potential biases in evaluating temporal generative models through prediction tasks and their impact on a creative system. Finally, we introduce an implementation of the proposed model called Live Orchestral Piano (LOP), which allows to perform real-time projective orchestration of a MIDI keyboard input.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge