Kyungmi Lee

Rethinking Empirical Evaluation of Adversarial Robustness Using First-Order Attack Methods

Jun 01, 2020

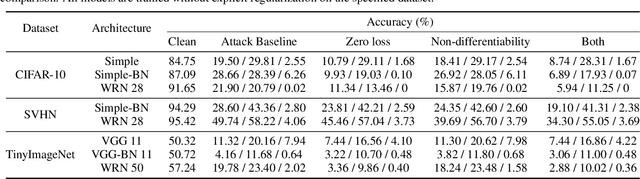

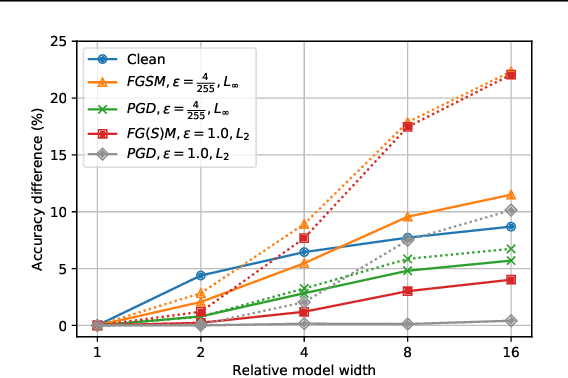

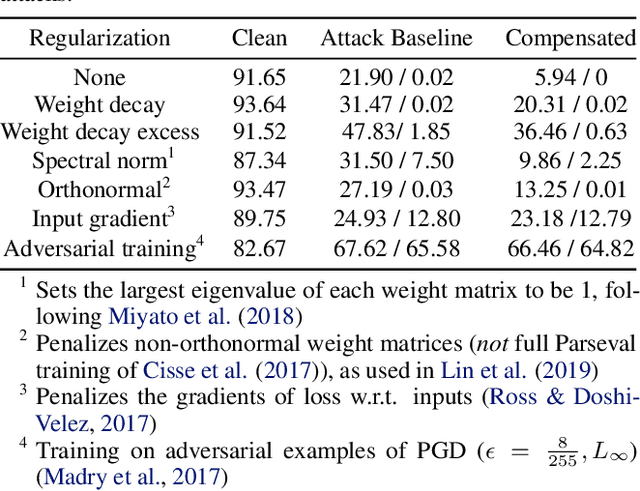

Abstract:We identify three common cases that lead to overestimation of adversarial accuracy against bounded first-order attack methods, which is popularly used as a proxy for adversarial robustness in empirical studies. For each case, we propose compensation methods that either address sources of inaccurate gradient computation, such as numerical instability near zero and non-differentiability, or reduce the total number of back-propagations for iterative attacks by approximating second-order information. These compensation methods can be combined with existing attack methods for a more precise empirical evaluation metric. We illustrate the impact of these three cases with examples of practical interest, such as benchmarking model capacity and regularization techniques for robustness. Overall, our work shows that overestimated adversarial accuracy that is not indicative of robustness is prevalent even for conventionally trained deep neural networks, and highlights cautions of using empirical evaluation without guaranteed bounds.

Autoencoder-Based Incremental Class Learning without Retraining on Old Data

Jul 18, 2019

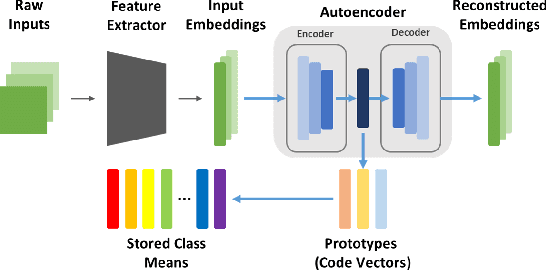

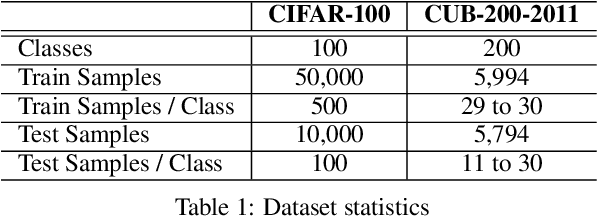

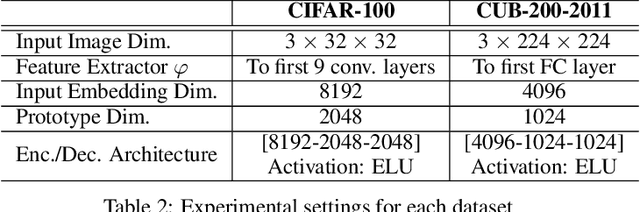

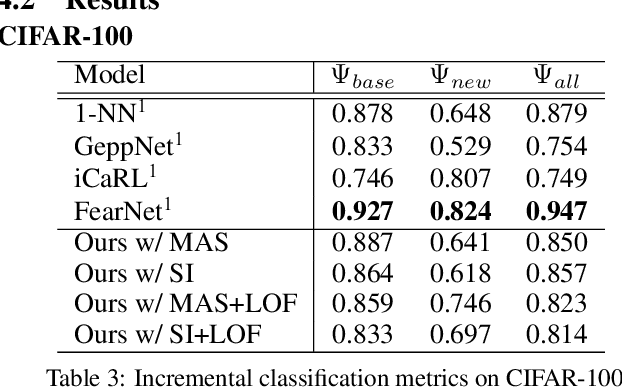

Abstract:Incremental class learning, a scenario in continual learning context where classes and their training data are sequentially and disjointedly observed, challenges a problem widely known as catastrophic forgetting. In this work, we propose a novel incremental class learning method that can significantly reduce memory overhead compared to previous approaches. Apart from conventional classification scheme using softmax, our model bases on an autoencoder to extract prototypes for given inputs so that no change in its output unit is required. It stores only the mean of prototypes per class to perform metric-based classification, unlike rehearsal approaches which rely on large memory or generative model. To mitigate catastrophic forgetting, regularization methods are applied on our model when a new task is encountered. We evaluate our method by experimenting on CIFAR-100 and CUB-200-2011 and show that its performance is comparable to the state-of-the-art method with much lower additional memory cost.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge