Get our free extension to see links to code for papers anywhere online!Free add-on: code for papers everywhere!Free add-on: See code for papers anywhere!

Kyeongmo Kim

Clinical Text Generation through Leveraging Medical Concept and Relations

Oct 02, 2019Figures and Tables:

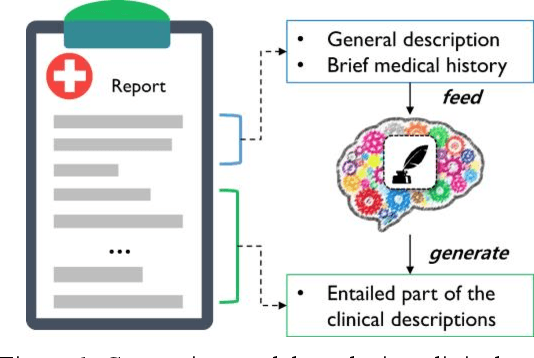

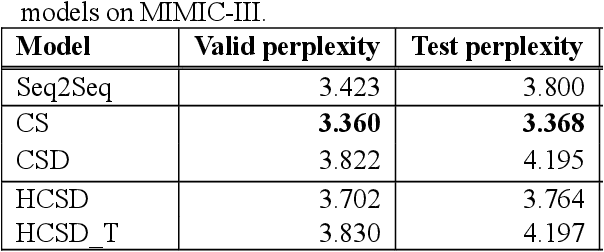

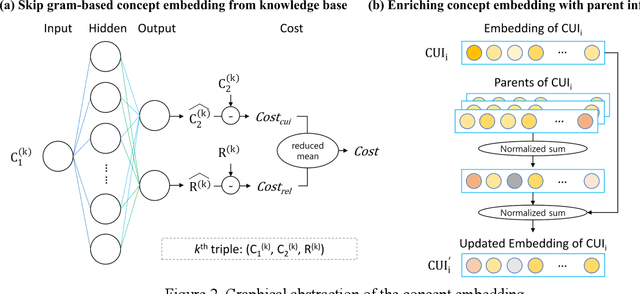

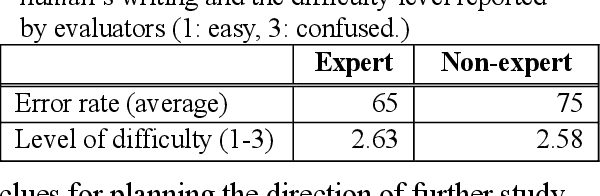

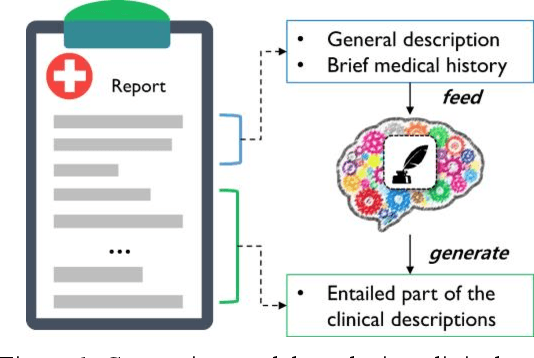

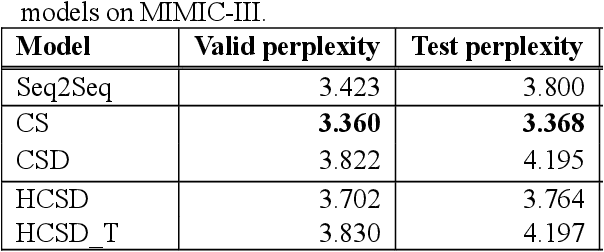

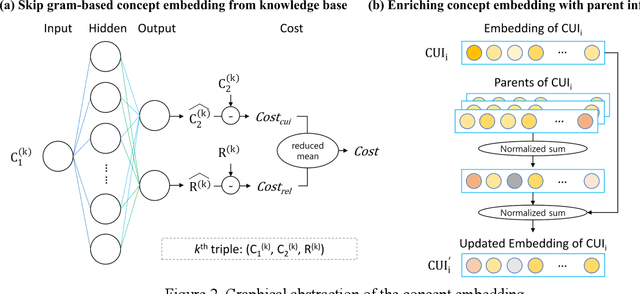

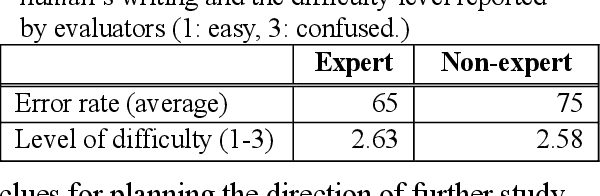

Abstract:With a neural sequence generation model, this study aims to develop a method of writing the patient clinical texts given a brief medical history. As a proof-of-a-concept, we have demonstrated that it can be workable to use medical concept embedding in clinical text generation. Our model was based on the Sequence-to-Sequence architecture and trained with a large set of de-identified clinical text data. The quantitative result shows that our concept embedding method decreased the perplexity of the baseline architecture. Also, we discuss the analyzed results from a human evaluation performed by medical doctors.

* This is a revised version of one uploaded in openreview.net

(https://openreview.net/forum?id=Skg6L9ZTpV)

Via

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge