Kush Dudhia

Generating Knowledge Graphs from Large Language Models: A Comparative Study of GPT-4, LLaMA 2, and BERT

Dec 10, 2024

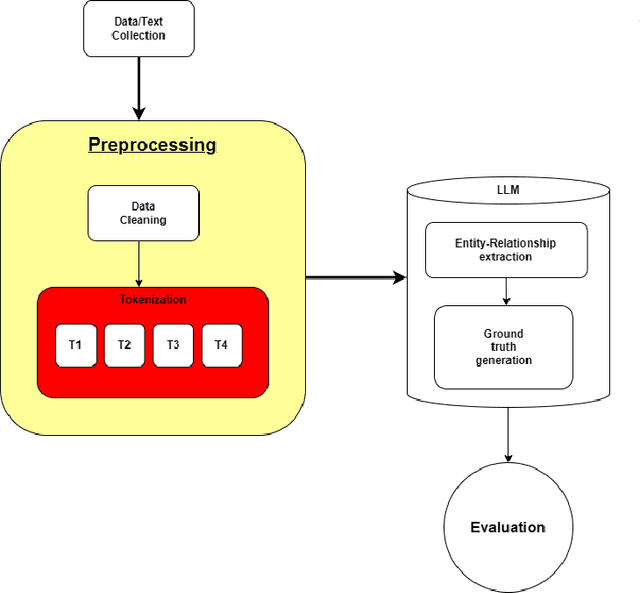

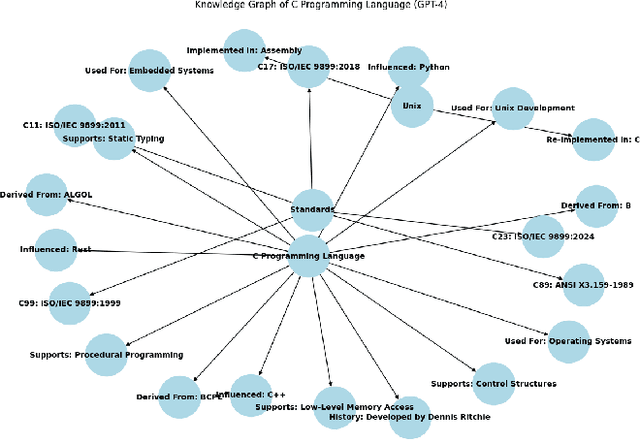

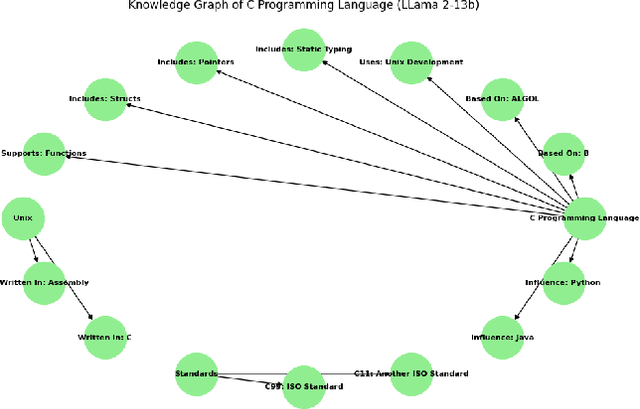

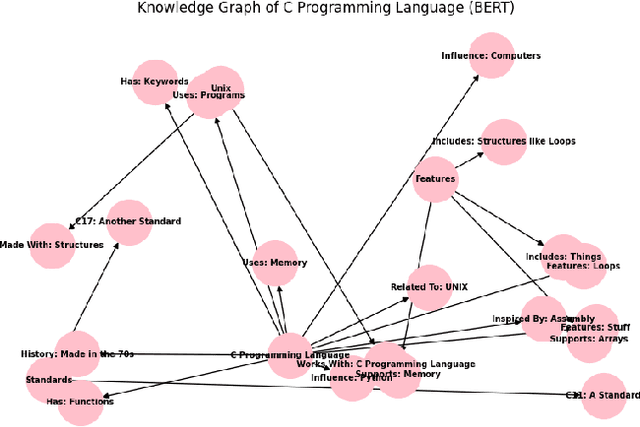

Abstract:Knowledge Graphs (KGs) are essential for the functionality of GraphRAGs, a form of Retrieval-Augmented Generative Systems (RAGs) that excel in tasks requiring structured reasoning and semantic understanding. However, creating KGs for GraphRAGs remains a significant challenge due to accuracy and scalability limitations of traditional methods. This paper introduces a novel approach leveraging large language models (LLMs) like GPT-4, LLaMA 2 (13B), and BERT to generate KGs directly from unstructured data, bypassing traditional pipelines. Using metrics such as Precision, Recall, F1-Score, Graph Edit Distance, and Semantic Similarity, we evaluate the models' ability to generate high-quality KGs. Results demonstrate that GPT-4 achieves superior semantic fidelity and structural accuracy, LLaMA 2 excels in lightweight, domain-specific graphs, and BERT provides insights into challenges in entity-relationship modeling. This study underscores the potential of LLMs to streamline KG creation and enhance GraphRAG accessibility for real-world applications, while setting a foundation for future advancements.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge