Kshitiz Bansal

CalTag: Robust calibration of mmWave Radar and LiDAR using backscatter tags

Aug 29, 2024Abstract:The rise of automation in robotics necessitates the use of high-quality perception systems, often through the use of multiple sensors. A crucial aspect of a successfully deployed multi-sensor systems is the calibration with a known object typically named fiducial. In this work, we propose a novel fiducial system for millimeter wave radars, termed as \name. \name addresses the limitations of traditional corner reflector-based calibration methods in extremely cluttered environments. \name leverages millimeter wave backscatter technology to achieve more reliable calibration than corner reflectors, enhancing the overall performance of multi-sensor perception systems. We compare the performance in several real-world environments and show the improvement achieved by using \name as the radar fiducial over a corner reflector.

R-fiducial: Reliable and Scalable Radar Fiducials for Smart mmwave Sensing

Sep 27, 2022

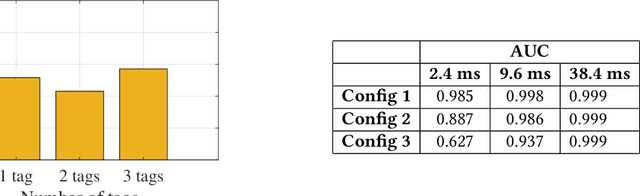

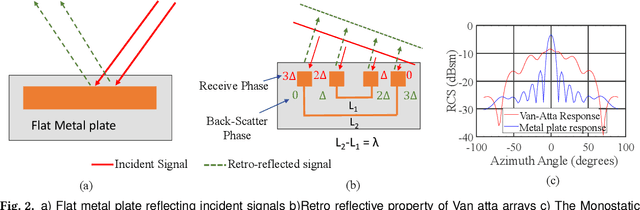

Abstract:Millimeter wave sensing has recently attracted a lot of attention given its environmental robust nature. In situations where visual sensors like cameras fail to perform, mmwave radars can be used to achieve reliable performance. However, because of the poor scattering performance and lack of texture in millimeter waves, radars can not be used in several situations that require precise identification of objects. In this paper, we take insight from camera fiducials which are very easily identifiable by the camera, and present R-fiducial tags, which smartly augment the current infrastructure to enable a myriad of applications with mmwave radars. R-fiducial acts as a fiducial for mmwave sensing, similar to camera fiducials, and can be reliably identified by a mmwave radar. We identify a precise list of requirements that a millimeter wave fiducial has to follow and show how R-fiducial achieves all of them. R-fiducial uses a novel spread-spectrum modulation technique that provides low latency with high reliability. Our evaluations show that R-fiducial can be reliably detected upto 25m and upto 120 degrees field of view with a latency of the order of milliseconds. We also conduct experiments and case studies in adverse and low visibility conditions to showcase the applicability of R-fiducial in a wide range of applications.

RadSegNet: A Reliable Approach to Radar Camera Fusion

Aug 08, 2022

Abstract:Perception systems for autonomous driving have seen significant advancements in their performance over last few years. However, these systems struggle to show robustness in extreme weather conditions because sensors like lidars and cameras, which are the primary sensors in a sensor suite, see a decline in performance under these conditions. In order to solve this problem, camera-radar fusion systems provide a unique opportunity for all weather reliable high quality perception. Cameras provides rich semantic information while radars can work through occlusions and in all weather conditions. In this work, we show that the state-of-the-art fusion methods perform poorly when camera input is degraded, which essentially results in losing the all-weather reliability they set out to achieve. Contrary to these approaches, we propose a new method, RadSegNet, that uses a new design philosophy of independent information extraction and truly achieves reliability in all conditions, including occlusions and adverse weather. We develop and validate our proposed system on the benchmark Astyx dataset and further verify these results on the RADIATE dataset. When compared to state-of-the-art methods, RadSegNet achieves a 27% improvement on Astyx and 41.46% increase on RADIATE, in average precision score and maintains a significantly better performance in adverse weather conditions

Pointillism: Accurate 3D bounding box estimation with multi-radars

Mar 08, 2022

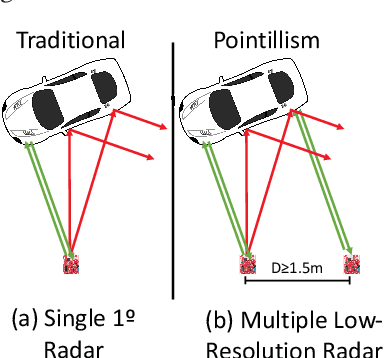

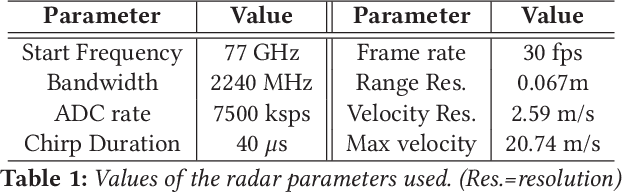

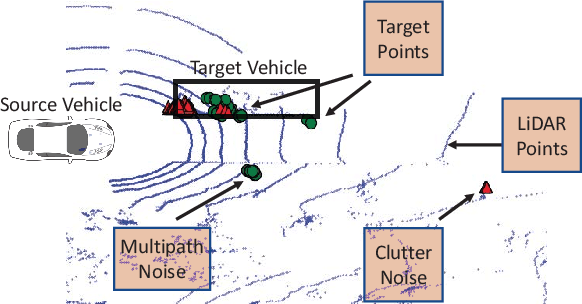

Abstract:Autonomous perception requires high-quality environment sensing in the form of 3D bounding boxes of dynamic objects. The primary sensors used in automotive systems are light-based cameras and LiDARs. However, they are known to fail in adverse weather conditions. Radars can potentially solve this problem as they are barely affected by adverse weather conditions. However, specular reflections of wireless signals cause poor performance of radar point clouds. We introduce Pointillism, a system that combines data from multiple spatially separated radars with an optimal separation to mitigate these problems. We introduce a novel concept of Cross Potential Point Clouds, which uses the spatial diversity induced by multiple radars and solves the problem of noise and sparsity in radar point clouds. Furthermore, we present the design of RP-net, a novel deep learning architecture, designed explicitly for radar's sparse data distribution, to enable accurate 3D bounding box estimation. The spatial techniques designed and proposed in this paper are fundamental to radars point cloud distribution and would benefit other radar sensing applications.

* Accepted in SenSys '20. Dataset has been made publicly available

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge