Kodai Kamiya

Online pre-training with long-form videos

Aug 28, 2024

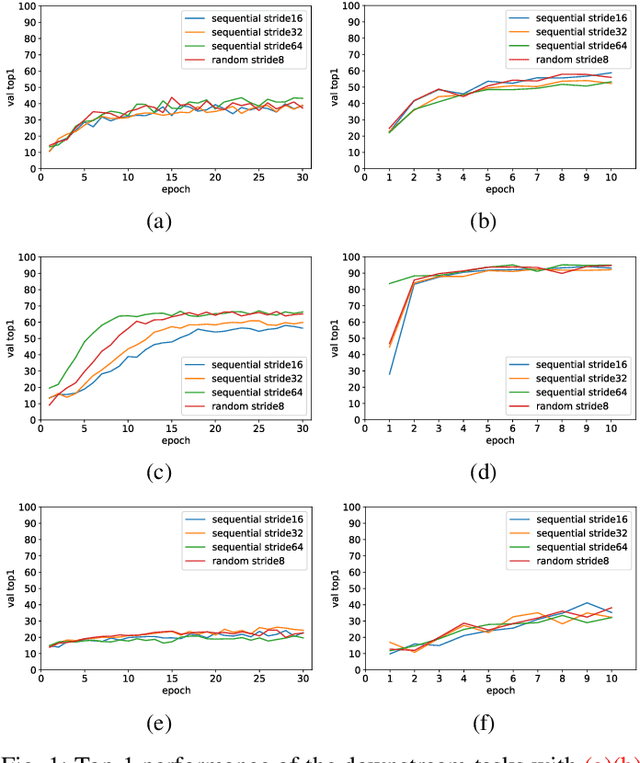

Abstract:In this study, we investigate the impact of online pre-training with continuous video clips. We will examine three methods for pre-training (masked image modeling, contrastive learning, and knowledge distillation), and assess the performance on downstream action recognition tasks. As a result, online pre-training with contrast learning showed the highest performance in downstream tasks. Our findings suggest that learning from long-form videos can be helpful for action recognition with short videos.

Multi-model learning by sequential reading of untrimmed videos for action recognition

Jan 26, 2024Abstract:We propose a new method for learning videos by aggregating multiple models by sequentially extracting video clips from untrimmed video. The proposed method reduces the correlation between clips by feeding clips to multiple models in turn and synchronizes these models through federated learning. Experimental results show that the proposed method improves the performance compared to the no synchronization.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge