Kin Hong Wong

Calibration of an Articulated Camera System with Scale Factor Estimation

Oct 17, 2013

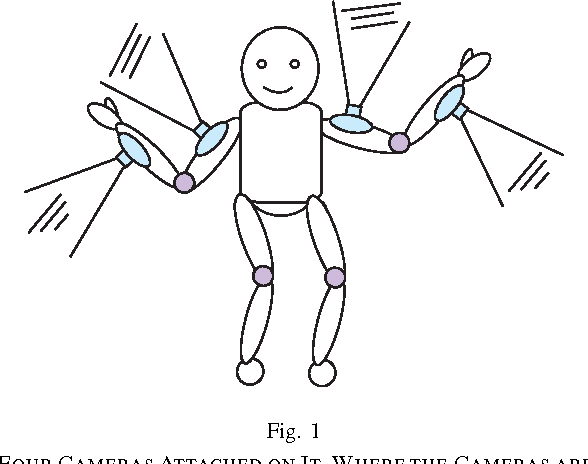

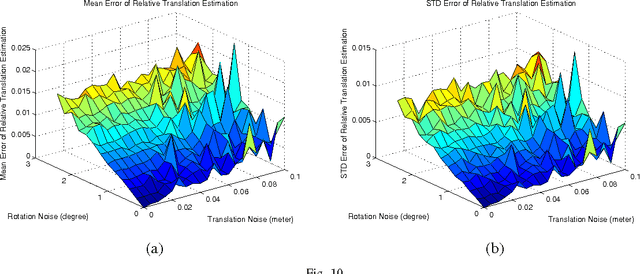

Abstract:Multiple Camera Systems (MCS) have been widely used in many vision applications and attracted much attention recently. There are two principle types of MCS, one is the Rigid Multiple Camera System (RMCS); the other is the Articulated Camera System (ACS). In a RMCS, the relative poses (relative 3-D position and orientation) between the cameras are invariant. While, in an ACS, the cameras are articulated through movable joints, the relative pose between them may change. Therefore, through calibration of an ACS we want to find not only the relative poses between the cameras but also the positions of the joints in the ACS. In this paper, we developed calibration algorithms for the ACS using a simple constraint: the joint is fixed relative to the cameras connected with it during the transformations of the ACS. When the transformations of the cameras in an ACS can be estimated relative to the same coordinate system, the positions of the joints in the ACS can be calculated by solving linear equations. However, in a non-overlapping view ACS, only the ego-transformations of the cameras and can be estimated. We proposed a two-steps method to deal with this problem. In both methods, the ACS is assumed to have performed general transformations in a static environment. The efficiency and robustness of the proposed methods are tested by simulation and real experiments. In the real experiment, the intrinsic and extrinsic parameters of the ACS are obtained simultaneously by our calibration procedure using the same image sequences, no extra data capturing step is required. The corresponding trajectory is recovered and illustrated using the calibration results of the ACS. Since the estimated translations of different cameras in an ACS may scaled by different scale factors, a scale factor estimation algorithm is also proposed. To our knowledge, we are the first to study the calibration of ACS.

CSIFT Based Locality-constrained Linear Coding for Image Classification

Sep 28, 2013

Abstract:In the past decade, SIFT descriptor has been witnessed as one of the most robust local invariant feature descriptors and widely used in various vision tasks. Most traditional image classification systems depend on the luminance-based SIFT descriptors, which only analyze the gray level variations of the images. Misclassification may happen since their color contents are ignored. In this article, we concentrate on improving the performance of existing image classification algorithms by adding color information. To achieve this purpose, different kinds of colored SIFT descriptors are introduced and implemented. Locality-constrained Linear Coding (LLC), a state-of-the-art sparse coding technology, is employed to construct the image classification system for the evaluation. The real experiments are carried out on several benchmarks. With the enhancements of color SIFT, the proposed image classification system obtains approximate 3% improvement of classification accuracy on the Caltech-101 dataset and approximate 4% improvement of classification accuracy on the Caltech-256 dataset.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge