Ken Forbus

Learning Norms via Natural Language Teachings

Jan 20, 2022

Abstract:To interact with humans, artificial intelligence (AI) systems must understand our social world. Within this world norms play an important role in motivating and guiding agents. However, very few computational theories for learning social norms have been proposed. There also exists a long history of debate on the distinction between what is normal (is) and what is normative (ought). Many have argued that being capable of learning both concepts and recognizing the difference is necessary for all social agents. This paper introduces and demonstrates a computational approach to learning norms from natural language text that accounts for both what is normal and what is normative. It provides a foundation for everyday people to train AI systems about social norms.

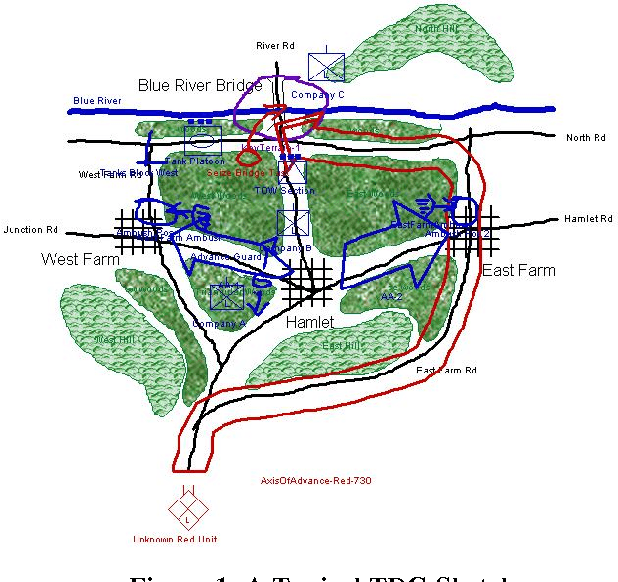

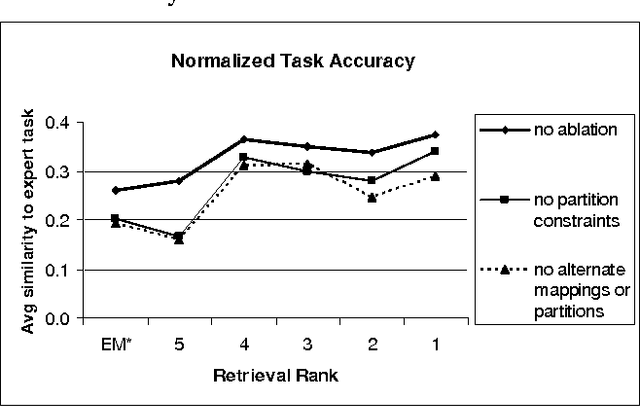

Analogical Learning in Tactical Decision Games

Aug 18, 2021

Abstract:Tactical Decision Games (TDGs) are military conflict scenarios presented both textually and graphically on a map. These scenarios provide a challenging domain for machine learning because they are open-ended, highly structured, and typically contain many details of varying relevance. We have developed a problem-solving component of an interactive companion system that proposes military tasks to solve TDG scenarios using a combination of analogical retrieval, mapping, and constraint propagation. We use this problem-solving component to explore analogical learning. In this paper, we describe the problems encountered in learning for this domain, and the methods we have developed to address these, such as partition constraints on analogical mapping correspondences and the use of incremental remapping to improve robustness. We present the results of learning experiments that show improvement in performance through the simple accumulation of examples, despite a weak domain theory.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge