Greg Dunham

Analogical Learning in Tactical Decision Games

Aug 18, 2021

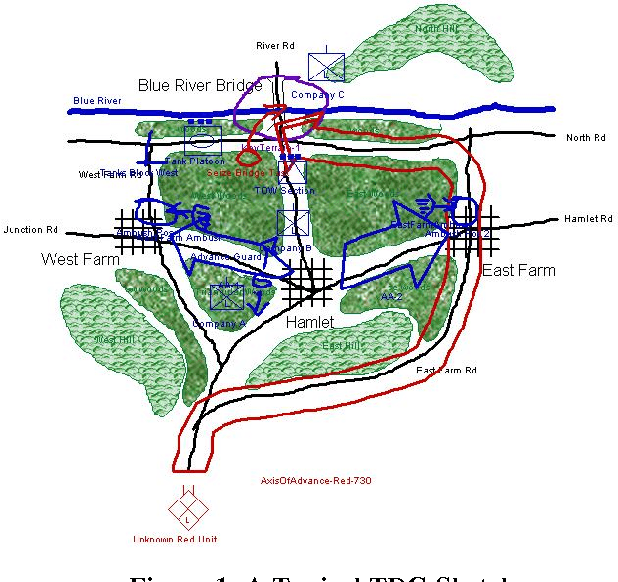

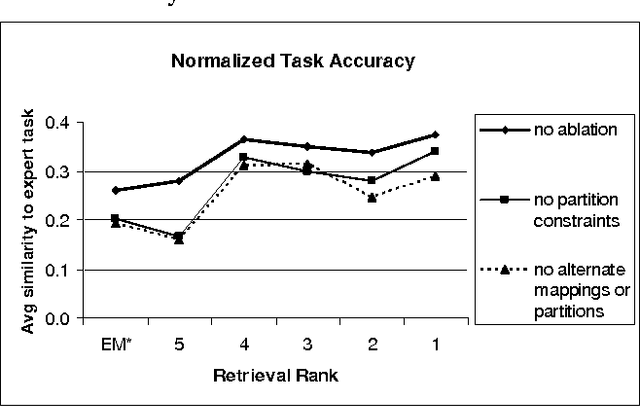

Abstract:Tactical Decision Games (TDGs) are military conflict scenarios presented both textually and graphically on a map. These scenarios provide a challenging domain for machine learning because they are open-ended, highly structured, and typically contain many details of varying relevance. We have developed a problem-solving component of an interactive companion system that proposes military tasks to solve TDG scenarios using a combination of analogical retrieval, mapping, and constraint propagation. We use this problem-solving component to explore analogical learning. In this paper, we describe the problems encountered in learning for this domain, and the methods we have developed to address these, such as partition constraints on analogical mapping correspondences and the use of incremental remapping to improve robustness. We present the results of learning experiments that show improvement in performance through the simple accumulation of examples, despite a weak domain theory.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge