Katja Logar

Unified Question Answering in Slovene

Nov 16, 2022

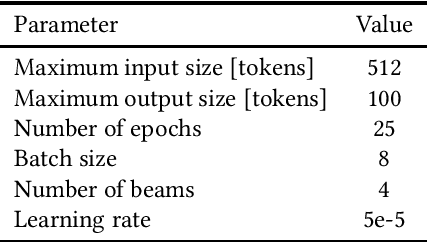

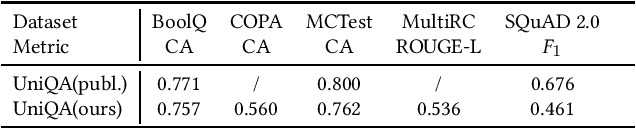

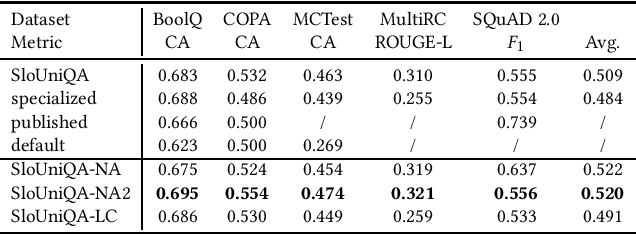

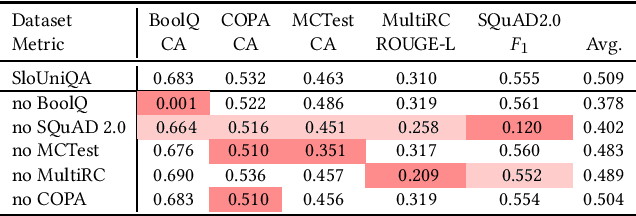

Abstract:Question answering is one of the most challenging tasks in language understanding. Most approaches are developed for English, while less-resourced languages are much less researched. We adapt a successful English question-answering approach, called UnifiedQA, to the less-resourced Slovene language. Our adaptation uses the encoder-decoder transformer SloT5 and mT5 models to handle four question-answering formats: yes/no, multiple-choice, abstractive, and extractive. We use existing Slovene adaptations of four datasets, and machine translate the MCTest dataset. We show that a general model can answer questions in different formats at least as well as specialized models. The results are further improved using cross-lingual transfer from English. While we produce state-of-the-art results for Slovene, the performance still lags behind English.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge