Kara Breeden

Towards a Standardized Reinforcement Learning Framework for AAM Contingency Management

Nov 17, 2023

Abstract:Advanced Air Mobility (AAM) is the next generation of air transportation that includes new entrants such as electric vertical takeoff and landing (eVTOL) aircraft, increasingly autonomous flight operations, and small UAS package delivery. With these new vehicles and operational concepts comes a desire to increase densities far beyond what occurs today in and around urban areas, to utilize new battery technology, and to move toward more autonomously-piloted aircraft. To achieve these goals, it becomes essential to introduce new safety management system capabilities that can rapidly assess risk as it evolves across a span of complex hazards and, if necessary, mitigate risk by executing appropriate contingencies via supervised or automated decision-making during flights. Recently, reinforcement learning has shown promise for real-time decision making across a wide variety of applications including contingency management. In this work, we formulate the contingency management problem as a Markov Decision Process (MDP) and integrate the contingency management MDP into the AAM-Gym simulation framework. This enables rapid prototyping of reinforcement learning algorithms and evaluation of existing systems, thus providing a community benchmark for future algorithm development. We report baseline statistical information for the environment and provide example performance metrics.

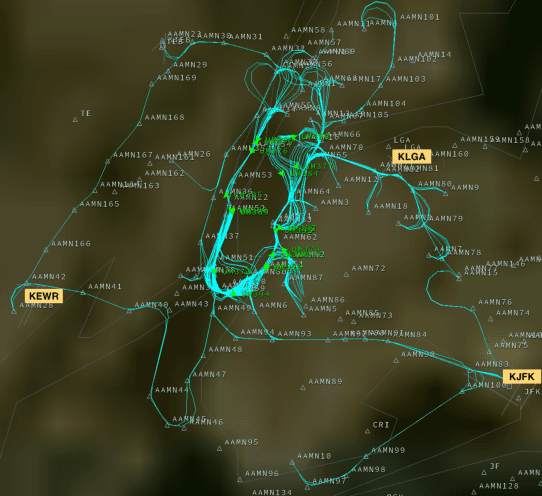

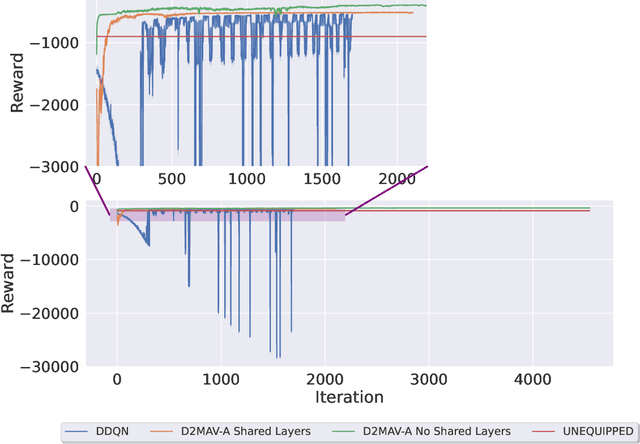

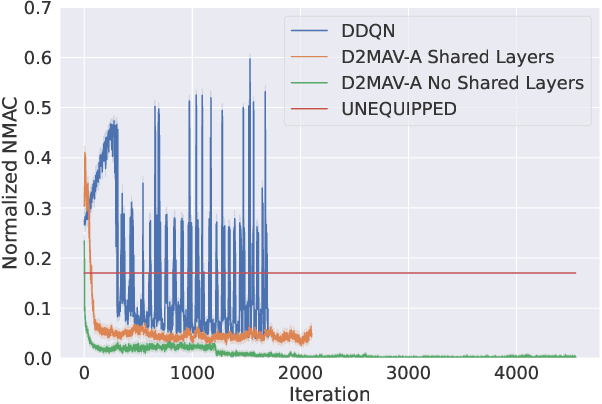

Improving Autonomous Separation Assurance through Distributed Reinforcement Learning with Attention Networks

Aug 09, 2023Abstract:Advanced Air Mobility (AAM) introduces a new, efficient mode of transportation with the use of vehicle autonomy and electrified aircraft to provide increasingly autonomous transportation between previously underserved markets. Safe and efficient navigation of low altitude aircraft through highly dense environments requires the integration of a multitude of complex observations, such as surveillance, knowledge of vehicle dynamics, and weather. The processing and reasoning on these observations pose challenges due to the various sources of uncertainty in the information while ensuring cooperation with a variable number of aircraft in the airspace. These challenges coupled with the requirement to make safety-critical decisions in real-time rule out the use of conventional separation assurance techniques. We present a decentralized reinforcement learning framework to provide autonomous self-separation capabilities within AAM corridors with the use of speed and vertical maneuvers. The problem is formulated as a Markov Decision Process and solved by developing a novel extension to the sample-efficient, off-policy soft actor-critic (SAC) algorithm. We introduce the use of attention networks for variable-length observation processing and a distributed computing architecture to achieve high training sample throughput as compared to existing approaches. A comprehensive numerical study shows that the proposed framework can ensure safe and efficient separation of aircraft in high density, dynamic environments with various sources of uncertainty.

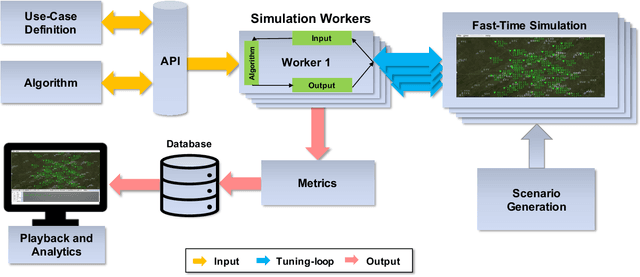

AAM-Gym: Artificial Intelligence Testbed for Advanced Air Mobility

Jun 09, 2022

Abstract:We introduce AAM-Gym, a research and development testbed for Advanced Air Mobility (AAM). AAM has the potential to revolutionize travel by reducing ground traffic and emissions by leveraging new types of aircraft such as electric vertical take-off and landing (eVTOL) aircraft and new advanced artificial intelligence (AI) algorithms. Validation of AI algorithms require representative AAM scenarios, as well as a fast time simulation testbed to evaluate their performance. Until now, there has been no such testbed available for AAM to enable a common research platform for individuals in government, industry, or academia. MIT Lincoln Laboratory has developed AAM-Gym to address this gap by providing an ecosystem to develop, train, and validate new and established AI algorithms across a wide variety of AAM use-cases. In this paper, we use AAM-Gym to study the performance of two reinforcement learning algorithms on an AAM use-case, separation assurance in AAM corridors. The performance of the two algorithms is demonstrated based on a series of metrics provided by AAM-Gym, showing the testbed's utility to AAM research.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge