Kami Vinton

Entendre, a Social Bot Detection Tool for Niche, Fringe, and Extreme Social Media

Aug 13, 2024Abstract:Social bots-automated accounts that generate and spread content on social media-are exploiting vulnerabilities in these platforms to manipulate public perception and disseminate disinformation. This has prompted the development of public bot detection services; however, most of these services focus primarily on Twitter, leaving niche platforms vulnerable. Fringe social media platforms such as Parler, Gab, and Gettr often have minimal moderation, which facilitates the spread of hate speech and misinformation. To address this gap, we introduce Entendre, an open-access, scalable, and platform-agnostic bot detection framework. Entendre can process a labeled dataset from any social platform to produce a tailored bot detection model using a random forest classification approach, ensuring robust social bot detection. We exploit the idea that most social platforms share a generic template, where users can post content, approve content, and provide a bio (common data features). By emphasizing general data features over platform-specific ones, Entendre offers rapid extensibility at the expense of some accuracy. To demonstrate Entendre's effectiveness, we used it to explore the presence of bots among accounts posting racist content on the now-defunct right-wing platform Parler. We examined 233,000 posts from 38,379 unique users and found that 1,916 unique users (4.99%) exhibited bot-like behavior. Visualization techniques further revealed that these bots significantly impacted the network, amplifying influential rhetoric and hashtags (e.g., #qanon, #trump, #antilgbt). These preliminary findings underscore the need for tools like Entendre to monitor and assess bot activity across diverse platforms.

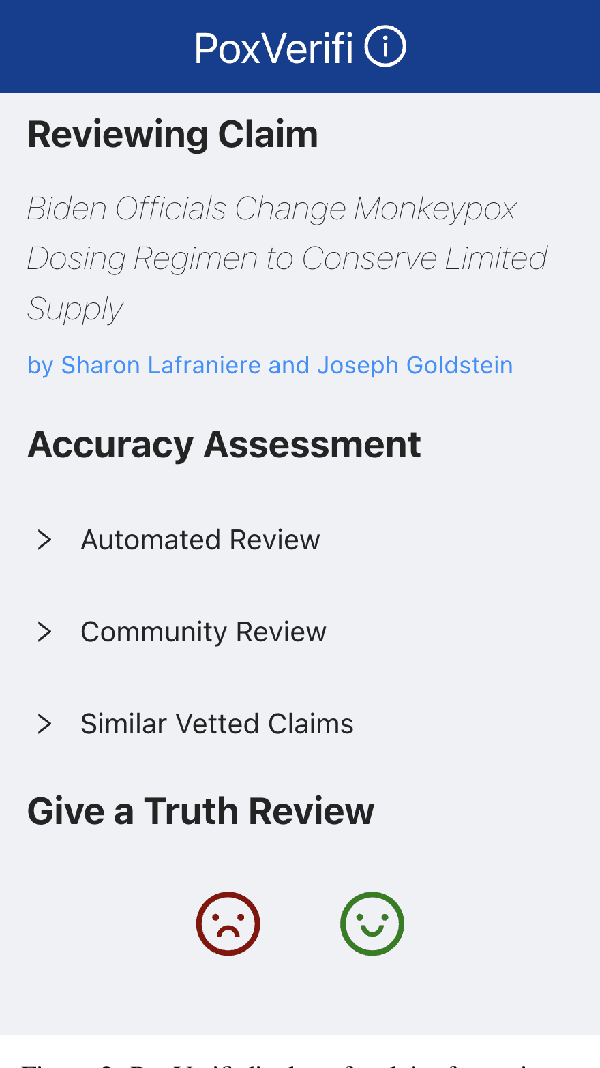

PoxVerifi: An Information Verification System to Combat Monkeypox Misinformation

Sep 09, 2022

Abstract:Following recent outbreaks, monkeypox-related misinformation continues to rapidly spread online. This negatively impacts response strategies and disproportionately harms LGBTQ+ communities in the short-term, and ultimately undermines the overall effectiveness of public health responses. In an attempt to combat monkeypox-related misinformation, we present PoxVerifi, an open-source, extensible tool that provides a comprehensive approach to assessing the accuracy of monkeypox related claims. Leveraging information from existing fact checking sources and published World Health Organization (WHO) information, we created an open-sourced corpus of 225 rated monkeypox claims. Additionally, we trained an open-sourced BERT-based machine learning model for specifically classifying monkeypox information, which achieved 96% cross-validation accuracy. PoxVerifi is a Google Chrome browser extension designed to empower users to navigate through monkeypox-related misinformation. Specifically, PoxVerifi provides users with a comprehensive toolkit to assess the veracity of headlines on any webpage across the Internet without having to visit an external site. Users can view an automated accuracy review from our trained machine learning model, a user-generated accuracy review based on community-member votes, and have the ability to see similar, vetted, claims. Besides PoxVerifi's comprehensive approach to claim-testing, our platform provides an efficient and accessible method to crowdsource accuracy ratings on monkeypox related-claims, which can be aggregated to create new labeled misinformation datasets.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge