Kai Dierkes

Towards disease-aware image editing of chest X-rays

Sep 03, 2021

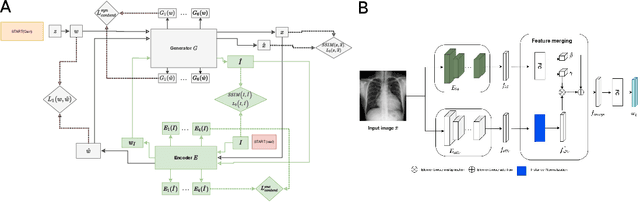

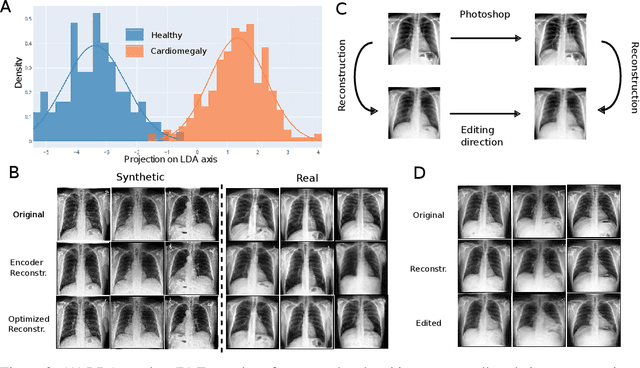

Abstract:Disease-aware image editing by means of generative adversarial networks (GANs) constitutes a promising avenue for advancing the use of AI in the healthcare sector. Here, we present a proof of concept of this idea. While GAN-based techniques have been successful in generating and manipulating natural images, their application to the medical domain, however, is still in its infancy. Working with the CheXpert data set, we show that StyleGAN can be trained to generate realistic chest X-rays. Inspired by the Cyclic Reverse Generator (CRG) framework, we train an encoder that allows for faithfully inverting the generator on synthetic X-rays and provides organ-level reconstructions of real ones. Employing a guided manipulation of latent codes, we confer the medical condition of cardiomegaly (increased heart size) onto real X-rays from healthy patients. This work was presented in the Medical Imaging meets Neurips Workshop 2020, which was held as part of the 34th Conference on Neural Information Processing Systems (NeurIPS 2020) in Vancouver, Canada

A High-Level Description and Performance Evaluation of Pupil Invisible

Sep 01, 2020

Abstract:Head-mounted eye trackers promise convenient access to reliable gaze data in unconstrained environments. Due to several limitations, however, often they can only partially deliver on this promise. Among those are the following: (i) the necessity of performing a device setup and calibration prior to every use of the eye tracker, (ii) a lack of robustness of gaze-estimation results against perturbations, such as outdoor lighting conditions and unavoidable slippage of the eye tracker on the head of the subject, and (iii) behavioral distortion resulting from social awkwardness, due to the unnatural appearance of current head-mounted eye trackers. Recently, Pupil Labs released Pupil Invisible glasses, a head-mounted eye tracker engineered to tackle these limitations. Here, we present an extensive evaluation of its gaze-estimation capabilities. To this end, we designed a data-collection protocol and evaluation scheme geared towards providing a faithful portrayal of the real-world usage of Pupil Invisible glasses. In particular, we develop a geometric framework for gauging gaze-estimation accuracy that goes beyond reporting mean angular accuracy. We demonstrate that Pupil Invisible glasses, without the need of a calibration, provide gaze estimates which are robust to perturbations, including outdoor lighting conditions and slippage of the headset.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge