K Naveen Kumar

Precision Guided Approach to Mitigate Data Poisoning Attacks in Federated Learning

Apr 05, 2024

Abstract:Federated Learning (FL) is a collaborative learning paradigm enabling participants to collectively train a shared machine learning model while preserving the privacy of their sensitive data. Nevertheless, the inherent decentralized and data-opaque characteristics of FL render its susceptibility to data poisoning attacks. These attacks introduce malformed or malicious inputs during local model training, subsequently influencing the global model and resulting in erroneous predictions. Current FL defense strategies against data poisoning attacks either involve a trade-off between accuracy and robustness or necessitate the presence of a uniformly distributed root dataset at the server. To overcome these limitations, we present FedZZ, which harnesses a zone-based deviating update (ZBDU) mechanism to effectively counter data poisoning attacks in FL. Further, we introduce a precision-guided methodology that actively characterizes these client clusters (zones), which in turn aids in recognizing and discarding malicious updates at the server. Our evaluation of FedZZ across two widely recognized datasets: CIFAR10 and EMNIST, demonstrate its efficacy in mitigating data poisoning attacks, surpassing the performance of prevailing state-of-the-art methodologies in both single and multi-client attack scenarios and varying attack volumes. Notably, FedZZ also functions as a robust client selection strategy, even in highly non-IID and attack-free scenarios. Moreover, in the face of escalating poisoning rates, the model accuracy attained by FedZZ displays superior resilience compared to existing techniques. For instance, when confronted with a 50% presence of malicious clients, FedZZ sustains an accuracy of 67.43%, while the accuracy of the second-best solution, FL-Defender, diminishes to 43.36%.

Black-box Adversarial Attacks in Autonomous Vehicle Technology

Jan 15, 2021

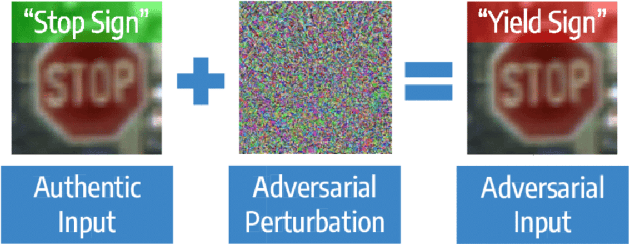

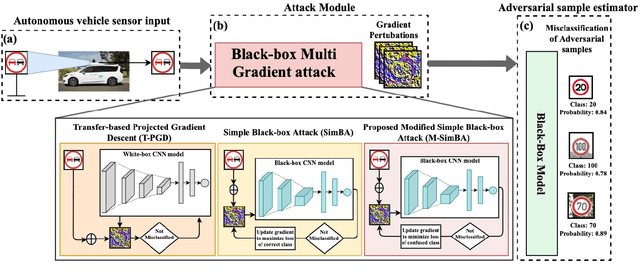

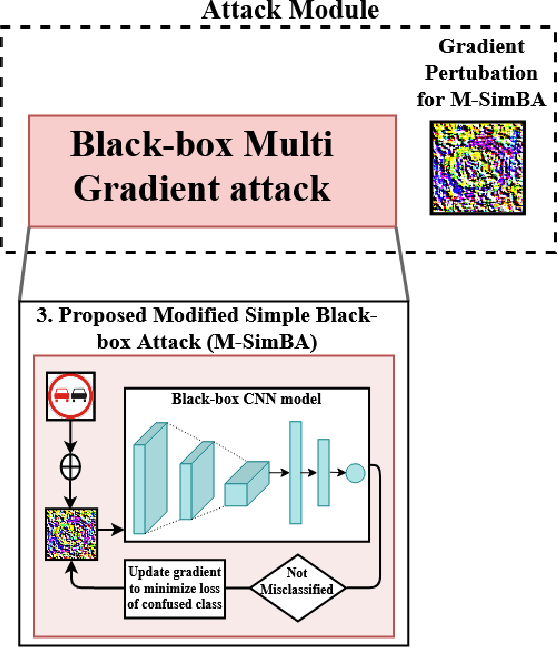

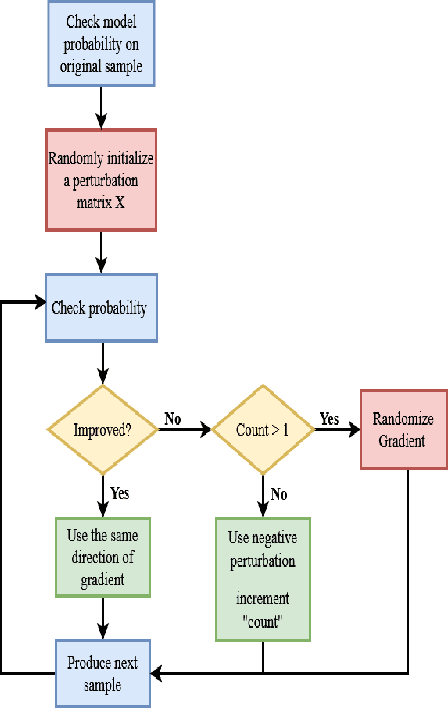

Abstract:Despite the high quality performance of the deep neural network in real-world applications, they are susceptible to minor perturbations of adversarial attacks. This is mostly undetectable to human vision. The impact of such attacks has become extremely detrimental in autonomous vehicles with real-time "safety" concerns. The black-box adversarial attacks cause drastic misclassification in critical scene elements such as road signs and traffic lights leading the autonomous vehicle to crash into other vehicles or pedestrians. In this paper, we propose a novel query-based attack method called Modified Simple black-box attack (M-SimBA) to overcome the use of a white-box source in transfer based attack method. Also, the issue of late convergence in a Simple black-box attack (SimBA) is addressed by minimizing the loss of the most confused class which is the incorrect class predicted by the model with the highest probability, instead of trying to maximize the loss of the correct class. We evaluate the performance of the proposed approach to the German Traffic Sign Recognition Benchmark (GTSRB) dataset. We show that the proposed model outperforms the existing models like Transfer-based projected gradient descent (T-PGD), SimBA in terms of convergence time, flattening the distribution of confused class probability, and producing adversarial samples with least confidence on the true class.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge