Justin Folden

FoveaSPAD: Exploiting Depth Priors for Adaptive and Efficient Single-Photon 3D Imaging

Dec 03, 2024

Abstract:Fast, efficient, and accurate depth-sensing is important for safety-critical applications such as autonomous vehicles. Direct time-of-flight LiDAR has the potential to fulfill these demands, thanks to its ability to provide high-precision depth measurements at long standoff distances. While conventional LiDAR relies on avalanche photodiodes (APDs), single-photon avalanche diodes (SPADs) are an emerging image-sensing technology that offer many advantages such as extreme sensitivity and time resolution. In this paper, we remove the key challenges to widespread adoption of SPAD-based LiDARs: their susceptibility to ambient light and the large amount of raw photon data that must be processed to obtain in-pixel depth estimates. We propose new algorithms and sensing policies that improve signal-to-noise ratio (SNR) and increase computing and memory efficiency for SPAD-based LiDARs. During capture, we use external signals to \emph{foveate}, i.e., guide how the SPAD system estimates scene depths. This foveated approach allows our method to ``zoom into'' the signal of interest, reducing the amount of raw photon data that needs to be stored and transferred from the SPAD sensor, while also improving resilience to ambient light. We show results both in simulation and also with real hardware emulation, with specific implementations achieving a 1548-fold reduction in memory usage, and our algorithms can be applied to newly available and future SPAD arrays.

A MEMS-based Foveating LIDAR to enable Real-time Adaptive Depth Sensing

Mar 21, 2020

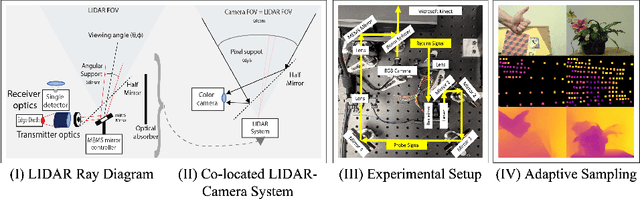

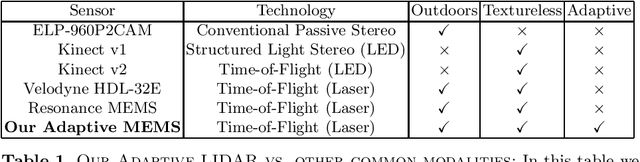

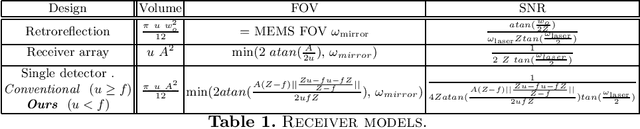

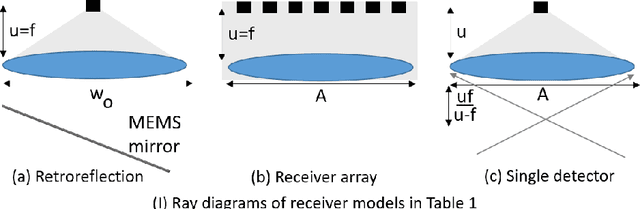

Abstract:Most active depth sensors sample their visual field using a fixed pattern, decided by accuracy, speed and cost trade-offs, rather than scene content. However, a number of recent works have demonstrated that adapting measurement patterns to scene content can offer significantly better trade-offs. We propose a hardware LIDAR design that allows flexible real-time measurements according to dynamically specified measurement patterns. Our flexible depth sensor design consists of a controllable scanning LIDAR that can foveate, or increase resolution in regions of interest, and that can fully leverage the power of adaptive depth sensing. We describe our optical setup and calibration, which enables fast sparse depth measurements using a scanning MEMS (micro-electro mechanical) mirror. We validate the efficacy of our prototype LIDAR design by testing on over 75 static and dynamic scenes spanning a range of environments. We also show CNN-based depth-map completion of sparse measurements obtained by our sensor. Our experiments show that our sensor can realize adaptive depth sensing systems.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge