Justin Beaver

An Assessment of the Usability of Machine Learning Based Tools for the Security Operations Center

Dec 16, 2020

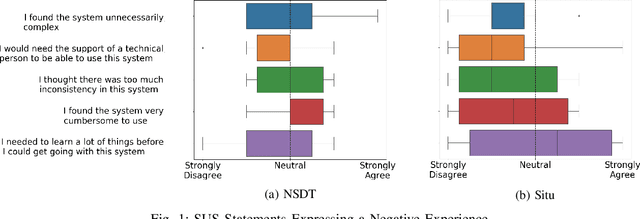

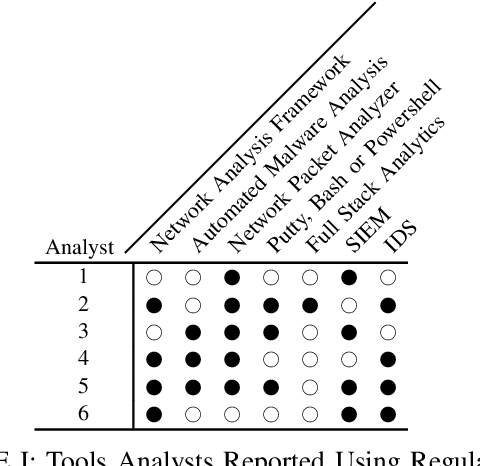

Abstract:Gartner, a large research and advisory company, anticipates that by 2024 80% of security operation centers (SOCs) will use machine learning (ML) based solutions to enhance their operations. In light of such widespread adoption, it is vital for the research community to identify and address usability concerns. This work presents the results of the first in situ usability assessment of ML-based tools. With the support of the US Navy, we leveraged the national cyber range, a large, air-gapped cyber testbed equipped with state-of-the-art network and user emulation capabilities, to study six US Naval SOC analysts' usage of two tools. Our analysis identified several serious usability issues, including multiple violations of established usability heuristics form user interface design. We also discovered that analysts lacked a clear mental model of how these tools generate scores, resulting in mistrust and/or misuse of the tools themselves. Surprisingly, we found no correlation between analysts' level of education or years of experience and their performance with either tool, suggesting that other factors such as prior background knowledge or personality play a significant role in ML-based tool usage. Our findings demonstrate that ML-based security tool vendors must put a renewed focus on working with analysts, both experienced and inexperienced, to ensure that their systems are usable and useful in real-world security operations settings.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge