Julius Surya Sumantri

360 Panorama Synthesis from a Sparse Set of Images with Unknown FOV

Apr 06, 2019

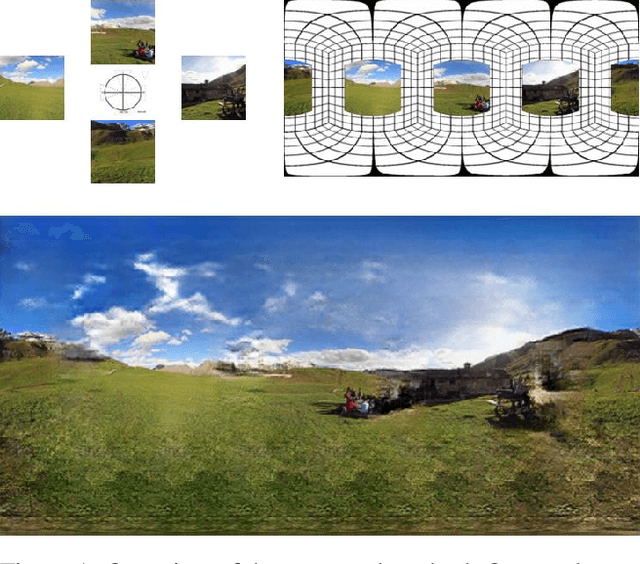

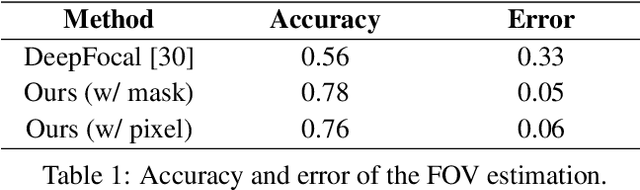

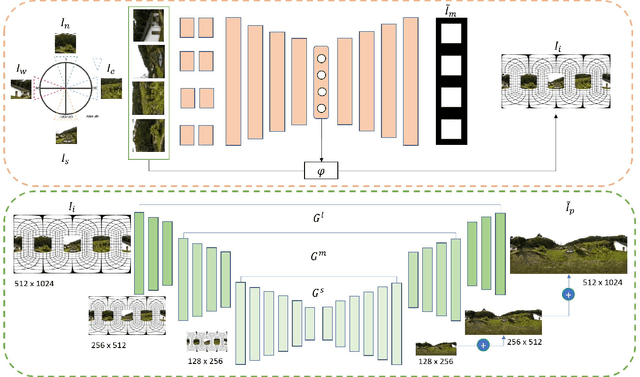

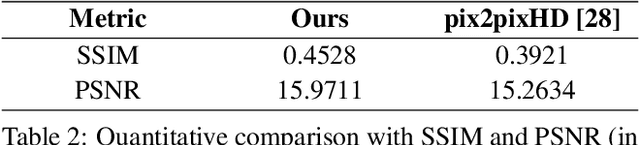

Abstract:360 images represent scenes captured in all possible viewing directions. They enable viewers to navigate freely around the scene and thus provide an immersive experience. Conversely, conventional images represent scenes in a single viewing direction. These images are captured with a small or limited field of view. As a result, only some parts of the scenes are observed, and valuable information about the surroundings is lost. We propose a learning-based approach that reconstructs the scene in 360 x180 from conventional images. This approach first estimates the field of view of input images relative to the panorama. The estimated field of view is then used as the prior for synthesizing a high-resolution 360 panoramic output. Experimental results demonstrate that our approach outperforms alternative method and is robust enough to synthesize real-world data (e.g. scenes captured using smartphones).

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge