Jue Lin

Texture Representation via Analysis and Synthesis with Generative Adversarial Networks

Dec 20, 2022Abstract:We investigate data-driven texture modeling via analysis and synthesis with generative adversarial networks. For network training and testing, we have compiled a diverse set of spatially homogeneous textures, ranging from stochastic to regular. We adopt StyleGAN3 for synthesis and demonstrate that it produces diverse textures beyond those represented in the training data. For texture analysis, we propose GAN inversion using a novel latent domain reconstruction consistency criterion for synthesized textures, and iterative refinement with Gramian loss for real textures. We propose perceptual procedures for evaluating network capabilities, exploring the global and local behavior of latent space trajectories, and comparing with existing texture analysis-synthesis techniques.

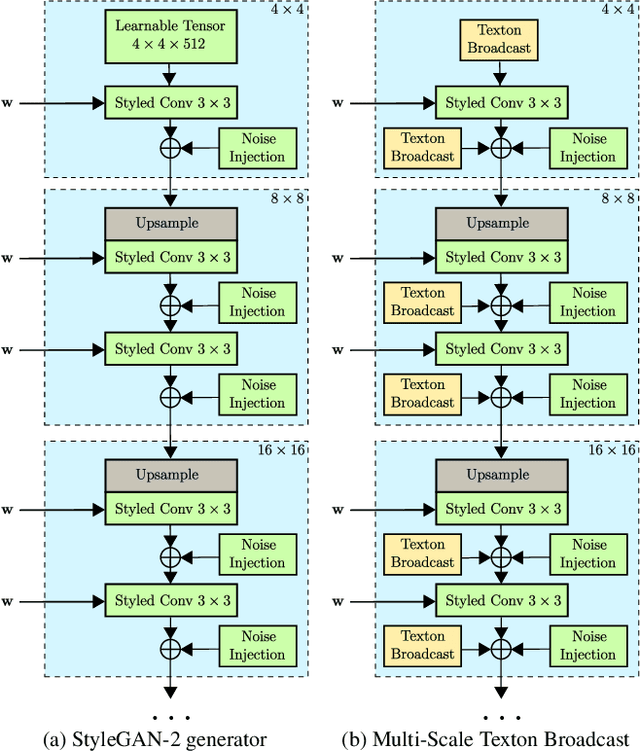

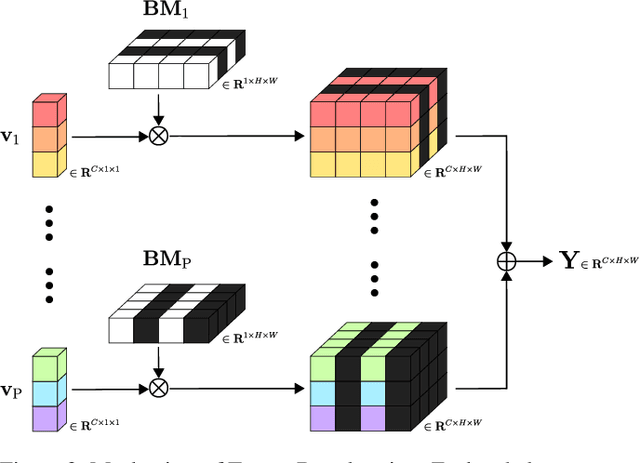

Towards Universal Texture Synthesis by Combining Texton Broadcasting with Noise Injection in StyleGAN-2

Mar 08, 2022

Abstract:We present a new approach for universal texture synthesis by incorporating a multi-scale texton broadcasting module in the StyleGAN-2 framework. The texton broadcasting module introduces an inductive bias, enabling generation of broader range of textures, from those with regular structures to completely stochastic ones. To train and evaluate the proposed approach, we construct a comprehensive high-resolution dataset that captures the diversity of natural textures as well as stochastic variations within each perceptually uniform texture. Experimental results demonstrate that the proposed approach yields significantly better quality textures than the state of the art. The ultimate goal of this work is a comprehensive understanding of texture space.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge