Joshua W. Shaevitz

Automatically tracking neurons in a moving and deforming brain

Oct 14, 2016

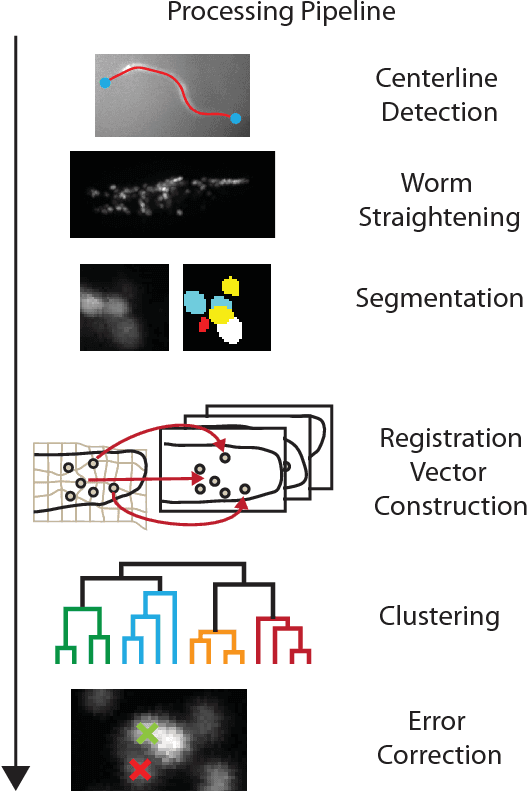

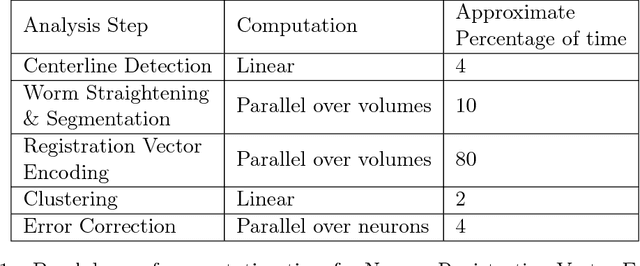

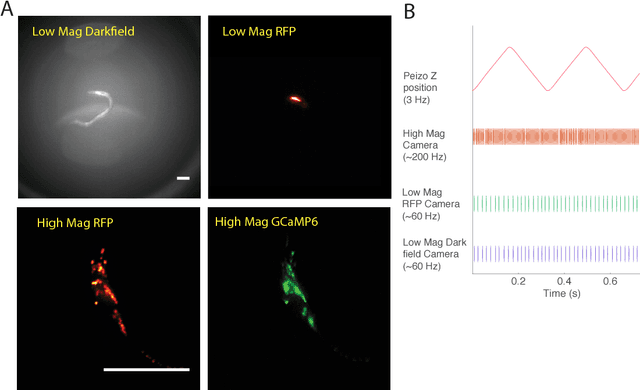

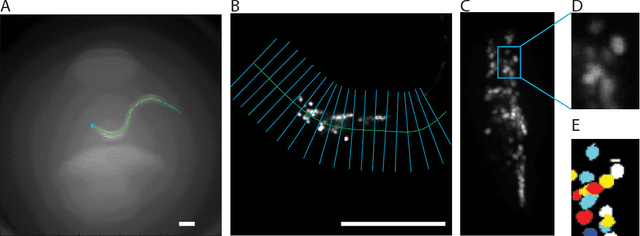

Abstract:Advances in optical neuroimaging techniques now allow neural activity to be recorded with cellular resolution in awake and behaving animals. Brain motion in these recordings pose a unique challenge. The location of individual neurons must be tracked in 3D over time to accurately extract single neuron activity traces. Recordings from small invertebrates like C. elegans are especially challenging because they undergo very large brain motion and deformation during animal movement. Here we present an automated computer vision pipeline to reliably track populations of neurons with single neuron resolution in the brain of a freely moving C. elegans undergoing large motion and deformation. 3D volumetric fluorescent images of the animal's brain are straightened, aligned and registered, and the locations of neurons in the images are found via segmentation. Each neuron is then assigned an identity using a new time-independent machine-learning approach we call Neuron Registration Vector Encoding. In this approach, non-rigid point-set registration is used to match each segmented neuron in each volume with a set of reference volumes taken from throughout the recording. The way each neuron matches with the references defines a feature vector which is clustered to assign an identity to each neuron in each volume. Finally, thin-plate spline interpolation is used to correct errors in segmentation and check consistency of assigned identities. The Neuron Registration Vector Encoding approach proposed here is uniquely well suited for tracking neurons in brains undergoing large deformations. When applied to whole-brain calcium imaging recordings in freely moving C. elegans, this analysis pipeline located 150 neurons for the duration of an 8 minute recording and consistently found more neurons more quickly than manual or semi-automated approaches.

* 33 pages, 7 figures, code available

Mapping the stereotyped behaviour of freely-moving fruit flies

Aug 12, 2014

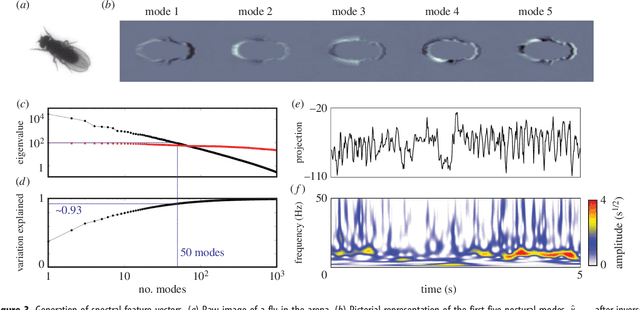

Abstract:Most animals possess the ability to actuate a vast diversity of movements, ostensibly constrained only by morphology and physics. In practice, however, a frequent assumption in behavioral science is that most of an animal's activities can be described in terms of a small set of stereotyped motifs. Here we introduce a method for mapping the behavioral space of organisms, relying only upon the underlying structure of postural movement data to organize and classify behaviors. We find that six different drosophilid species each perform a mix of non-stereotyped actions and over one hundred hierarchically-organized, stereotyped behaviors. Moreover, we use this approach to compare these species' behavioral spaces, systematically identifying subtle behavioral differences between closely-related species.

Efficient Multiple Object Tracking Using Mutually Repulsive Active Membranes

Dec 26, 2012

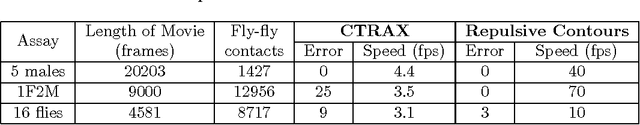

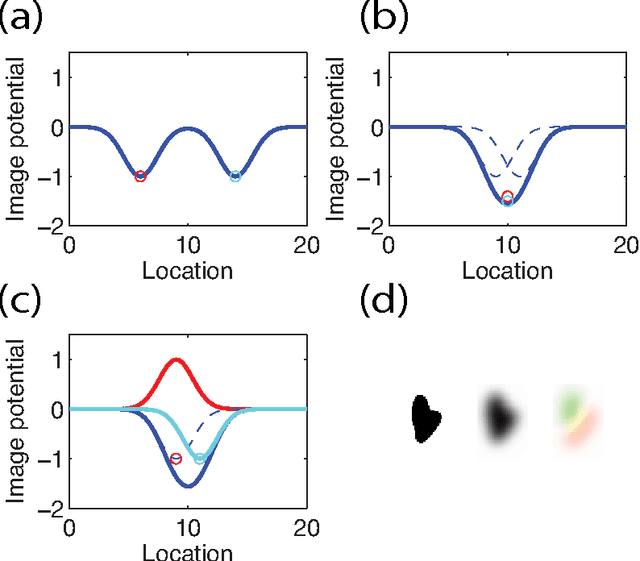

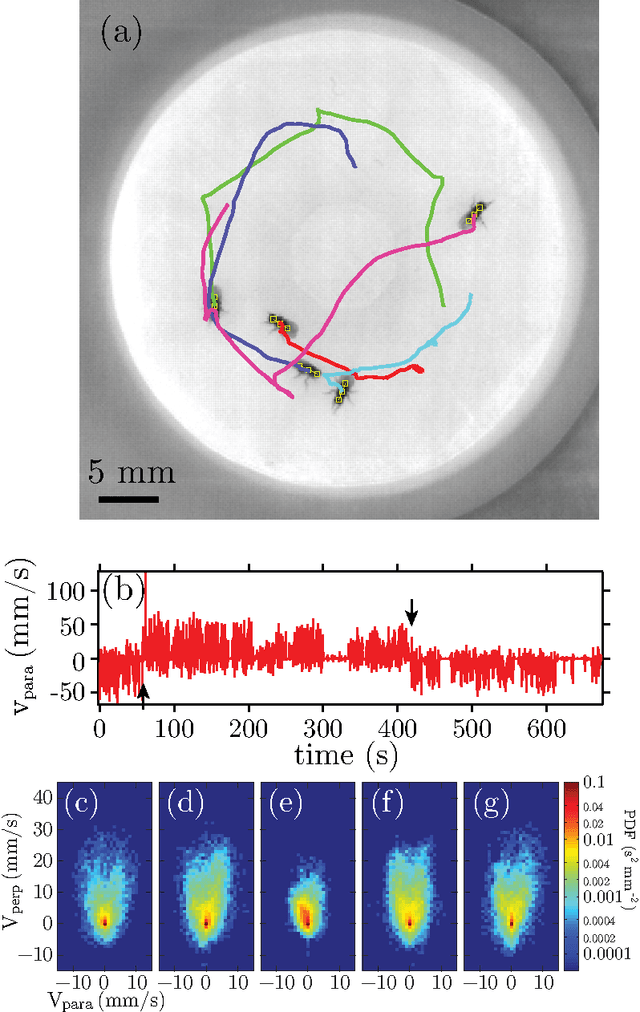

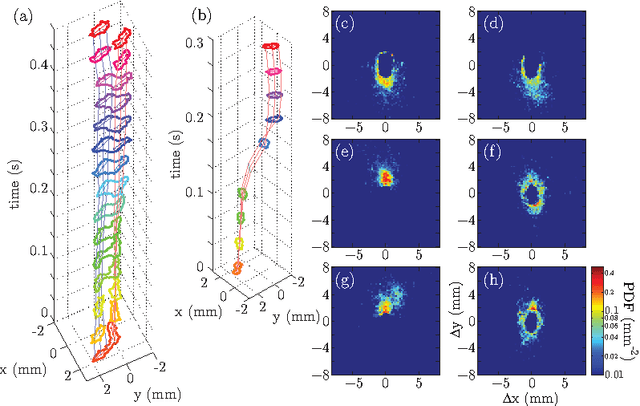

Abstract:Studies of social and group behavior in interacting organisms require high-throughput analysis of the motion of a large number of individual subjects. Computer vision techniques offer solutions to specific tracking problems, and allow automated and efficient tracking with minimal human intervention. In this work, we adopt the open active contour model to track the trajectories of moving objects at high density. We add repulsive interactions between open contours to the original model, treat the trajectories as an extrusion in the temporal dimension, and show applications to two tracking problems. The walking behavior of Drosophila is studied at different population density and gender composition. We demonstrate that individual male flies have distinct walking signatures, and that the social interaction between flies in a mixed gender arena is gender specific. We also apply our model to studies of trajectories of gliding Myxococcus xanthus bacteria at high density. We examine the individual gliding behavioral statistics in terms of the gliding speed distribution. Using these two examples at very distinctive spatial scales, we illustrate the use of our algorithm on tracking both short rigid bodies (Drosophila) and long flexible objects (Myxococcus xanthus). Our repulsive active membrane model reaches error rates better than $5\times 10^{-6}$ per fly per second for Drosophila tracking and comparable results for Myxococcus xanthus.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge