Joshua A. Kroll

Concrete Safety for ML Problems: System Safety for ML Development and Assessment

Feb 06, 2023

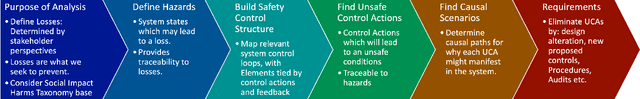

Abstract:Many stakeholders struggle to make reliances on ML-driven systems due to the risk of harm these systems may cause. Concerns of trustworthiness, unintended social harms, and unacceptable social and ethical violations undermine the promise of ML advancements. Moreover, such risks in complex ML-driven systems present a special challenge as they are often difficult to foresee, arising over periods of time, across populations, and at scale. These risks often arise not from poor ML development decisions or low performance directly but rather emerge through the interactions amongst ML development choices, the context of model use, environmental factors, and the effects of a model on its target. Systems safety engineering is an established discipline with a proven track record of identifying and managing risks even in high-complexity sociotechnical systems. In this work, we apply a state-of-the-art systems safety approach to concrete applications of ML with notable social and ethical risks to demonstrate a systematic means for meeting the assurance requirements needed to argue for safe and trustworthy ML in sociotechnical systems.

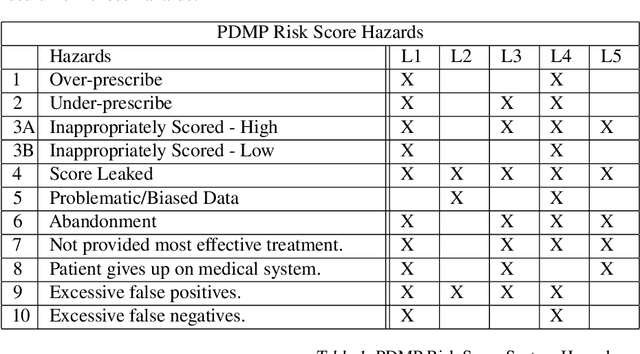

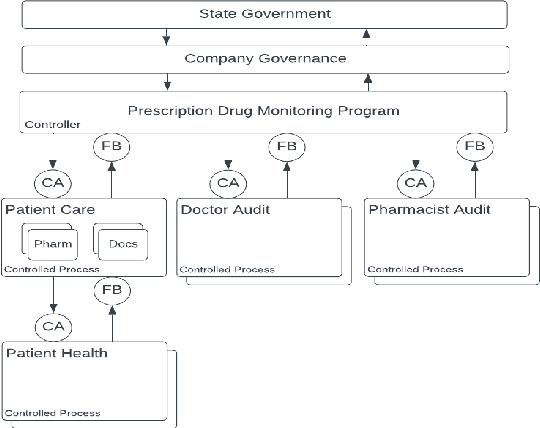

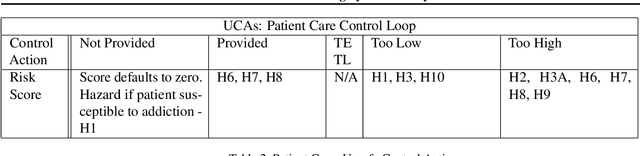

System Safety Engineering for Social and Ethical ML Risks: A Case Study

Nov 08, 2022Abstract:Governments, industry, and academia have undertaken efforts to identify and mitigate harms in ML-driven systems, with a particular focus on social and ethical risks of ML components in complex sociotechnical systems. However, existing approaches are largely disjointed, ad-hoc and of unknown effectiveness. Systems safety engineering is a well established discipline with a track record of identifying and managing risks in many complex sociotechnical domains. We adopt the natural hypothesis that tools from this domain could serve to enhance risk analyses of ML in its context of use. To test this hypothesis, we apply a "best of breed" systems safety analysis, Systems Theoretic Process Analysis (STPA), to a specific high-consequence system with an important ML-driven component, namely the Prescription Drug Monitoring Programs (PDMPs) operated by many US States, several of which rely on an ML-derived risk score. We focus in particular on how this analysis can extend to identifying social and ethical risks and developing concrete design-level controls to mitigate them.

Outlining Traceability: A Principle for Operationalizing Accountability in Computing Systems

Jan 23, 2021Abstract:Accountability is widely understood as a goal for well governed computer systems, and is a sought-after value in many governance contexts. But how can it be achieved? Recent work on standards for governable artificial intelligence systems offers a related principle: traceability. Traceability requires establishing not only how a system worked but how it was created and for what purpose, in a way that explains why a system has particular dynamics or behaviors. It connects records of how the system was constructed and what the system did mechanically to the broader goals of governance, in a way that highlights human understanding of that mechanical operation and the decision processes underlying it. We examine the various ways in which the principle of traceability has been articulated in AI principles and other policy documents from around the world, distill from these a set of requirements on software systems driven by the principle, and systematize the technologies available to meet those requirements. From our map of requirements to supporting tools, techniques, and procedures, we identify gaps and needs separating what traceability requires from the toolbox available for practitioners. This map reframes existing discussions around accountability and transparency, using the principle of traceability to show how, when, and why transparency can be deployed to serve accountability goals and thereby improve the normative fidelity of systems and their development processes.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge